SSCP Dump Free – 50 Practice Questions to Sharpen Your Exam Readiness.

Looking for a reliable way to prepare for your SSCP certification? Our SSCP Dump Free includes 50 exam-style practice questions designed to reflect real test scenarios—helping you study smarter and pass with confidence.

Using an SSCP dump free set of questions can give you an edge in your exam prep by helping you:

- Understand the format and types of questions you’ll face

- Pinpoint weak areas and focus your study efforts

- Boost your confidence with realistic question practice

Below, you will find 50 free questions from our SSCP Dump Free collection. These cover key topics and are structured to simulate the difficulty level of the real exam, making them a valuable tool for review or final prep.

One purpose of a security awareness program is to modify:

A. employee’s attitudes and behaviors towards enterprise’s security posture

B. management’s approach towards enterprise’s security posture

C. attitudes of employees with sensitive data

D. corporate attitudes about safeguarding data

Which of the following is implemented through scripts or smart agents that replays the users multiple log-ins against authentication servers to verify a user's identity which permit access to system services?

A. Single Sign-On

B. Dynamic Sign-On

C. Smart cards

D. Kerberos

Which of the following was developed by the National Computer Security Center (NCSC) for the US Department of Defense ?

A. TCSEC

B. ITSEC

C. DIACAP

D. NIACAP

Which of the following remote access authentication systems is the most robust?

A. TACACS+

B. RADIUS

C. PAP

D. TACACS

Which of the following is addressed by Kerberos?

A. Confidentiality and Integrity

B. Authentication and Availability

C. Validation and Integrity

D. Auditability and Integrity

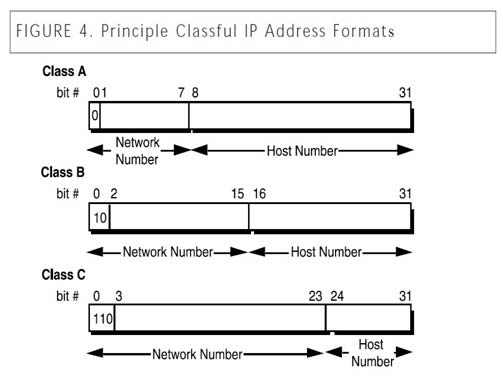

In the days before CIDR (Classless Internet Domain Routing), networks were commonly organized by classes. Which of the following would have been true of a Class B network?

A. The first bit of the IP address would be set to zero.

B. The first bit of the IP address would be set to one and the second bit set to zero.

C. The first two bits of the IP address would be set to one, and the third bit set to zero.

D. The first three bits of the IP address would be set to one.

While using IPsec, the ESP and AH protocols both provides integrity services. However when using AH, some special attention needs to be paid if one of the peers uses NAT for address translation service. Which of the items below would affects the use of AH and its Integrity Check Value (ICV) the most?

A. Key session exchange

B. Packet Header Source or Destination address

C. VPN cryptographic key size

D. Crypotographic algorithm used B

Unshielded Twisted Pair cabling is a:

A. four-pair wire medium that is used in a variety of networks.

B. three-pair wire medium that is used in a variety of networks.

C. two-pair wire medium that is used in a variety of networks.

D. one-pair wire medium that is used in a variety of networks.

Which of the following is NOT a technique used to perform a penetration test?

A. traffic padding

B. scanning and probing

C. war dialing

D. sniffing

Which of the following is NOT true concerning Application Control?

A. It limits end users use of applications in such a way that only particular screens are visible.

B. Only specific records can be requested through the application controls

C. Particular usage of the application can be recorded for audit purposes

D. It is non-transparent to the endpoint applications so changes are needed to the applications and databases involved

Which of the following is an example of an active attack?

A. Traffic analysis

B. Scanning

C. Eavesdropping

D. Wiretapping

A timely review of system access audit records would be an example of which of the basic security functions?

A. avoidance.

B. deterrence.

C. prevention.

D. detection.

Which access control model is also called Non Discretionary Access Control (NDAC)?

A. Lattice based access control

B. Mandatory access control

C. Role-based access control

D. Label-based access control

A central authority determines what subjects can have access to certain objects based on the organizational security policy is called:

A. Mandatory Access Control

B. Discretionary Access Control

C. Non-Discretionary Access Control

D. Rule-based Access control

At which layer of ISO/OSI does the fiber optics work?

A. Network layer

B. Transport layer

C. Data link layer

D. Physical layer

Which of the following statements pertaining to software testing approaches is correct?

A. A bottom-up approach allows interface errors to be detected earlier.

B. A top-down approach allows errors in critical modules to be detected earlier.

C. The test plan and results should be retained as part of the system’s permanent documentation.

D. Black box testing is predicated on a close examination of procedural detail.

What are the components of an object's sensitivity label?

A. A Classification Set and a single Compartment.

B. A single classification and a single compartment.

C. A Classification Set and user credentials.

D. A single classification and a Compartment Set.

Which of the following usually provides reliable, real-time information without consuming network or host resources?

A. network-based IDS

B. host-based IDS

C. application-based IDS

D. firewall-based IDS

Where parties do not have a shared secret and large quantities of sensitive information must be passed, the most efficient means of transferring information is to use Hybrid Encryption Methods. What does this mean?

A. Use of public key encryption to secure a secret key, and message encryption using the secret key.

B. Use of the recipient’s public key for encryption and decryption based on the recipient’s private key.

C. Use of software encryption assisted by a hardware encryption accelerator.

D. Use of elliptic curve encryption.

What is one disadvantage of content-dependent protection of information?

A. It increases processing overhead.

B. It requires additional password entry.

C. It exposes the system to data locking.

D. It limits the user’s individual address space.

Which of the following is NOT a transaction redundancy implementation?

A. on-site mirroring

B. Electronic Vaulting

C. Remote Journaling

D. Database Shadowing

What is the main focus of the Bell-LaPadula security model?

A. Accountability

B. Integrity

C. Confidentiality

D. Availability

What is called the percentage of valid subjects that are falsely rejected by a Biometric Authentication system?

A. False Rejection Rate (FRR) or Type I Error

B. False Acceptance Rate (FAR) or Type II Error

C. Crossover Error Rate (CER)

D. True Rejection Rate (TRR) or Type III Error A

What does the simple security (ss) property mean in the Bell-LaPadula model?

A. No read up

B. No write down

C. No read down

D. No write up

Which of the following biometric devices offers the LOWEST CER?

A. Keystroke dynamics

B. Voice verification

C. Iris scan

D. Fingerprint

Whose role is it to assign classification level to information?

A. Security Administrator

B. User

C. Owner

D. Auditor

What can be defined as a list of subjects along with their access rights that are authorized to access a specific object?

A. A capability table

B. An access control list

C. An access control matrix

D. A role-based matrix

Which of the following elements of telecommunications is not used in assuring confidentiality?

A. Network security protocols

B. Network authentication services

C. Data encryption services

D. Passwords D

Which of the following is true of two-factor authentication?

A. It uses the RSA public-key signature based on integers with large prime factors.

B. It requires two measurements of hand geometry.

C. It does not use single sign-on technology.

D. It relies on two independent proofs of identity.

Which of the following is the act of performing tests and evaluations to test a system's security level to see if it complies with the design specifications and security requirements?

A. Validation

B. Verification

C. Assessment

D. Accuracy

Which of the following security modes of operation involves the highest risk?

A. Compartmented Security Mode

B. Multilevel Security Mode

C. System-High Security Mode

D. Dedicated Security Mode

A potential problem related to the physical installation of the Iris Scanner in regards to the usage of the iris pattern within a biometric system is:

A. concern that the laser beam may cause eye damage

B. the iris pattern changes as a person grows older.

C. there is a relatively high rate of false accepts.

D. the optical unit must be positioned so that the sun does not shine into the aperture.

Which of the following is not one of the three goals of Integrity addressed by the Clark-Wilson model?

A. Prevention of the modification of information by unauthorized users.

B. Prevention of the unauthorized or unintentional modification of information by authorized users.

C. Preservation of the internal and external consistency.

D. Prevention of the modification of information by authorized users.

What is called the verification that the user's claimed identity is valid and is usually implemented through a user password at log-on time?

A. Authentication

B. Identification

C. Integrity

D. Confidentiality

How are memory cards and smart cards different?

A. Memory cards normally hold more memory than smart cards

B. Smart cards provide a two-factor authentication whereas memory cards don’t

C. Memory cards have no processing power

D. Only smart cards can be used for ATM cards

Which of the following is most appropriate to notify an internal user that session monitoring is being conducted?

A. Logon Banners

B. Wall poster

C. Employee Handbook

D. Written agreement D

In the context of network enumeration by an outside attacker and possible Distributed Denial of Service (DDoS) attacks, which of the following firewall rules is not appropriate to protect an organization's internal network?

A. Allow echo reply outbound

B. Allow echo request outbound

C. Drop echo request inbound

D. Allow echo reply inbound

One of the following statements about the differences between PPTP and L2TP is NOT true

A. PPTP can run only on top of IP networks.

B. PPTP is an encryption protocol and L2TP is not.

C. L2TP works well with all firewalls and network devices that perform NAT.

D. L2TP supports AAA servers C

External consistency ensures that the data stored in the database is:

A. in-consistent with the real world.

B. remains consistant when sent from one system to another.

C. consistent with the logical world.

D. consistent with the real world.

Which of the following control pairings include: organizational policies and procedures, pre-employment background checks, strict hiring practices, employment agreements, employee termination procedures, vacation scheduling, labeling of sensitive materials, increased supervision, security awareness training, behavior awareness, and sign-up procedures to obtain access to information systems and networks?

A. Preventive/Administrative Pairing

B. Preventive/Technical Pairing

C. Preventive/Physical Pairing

D. Detective/Administrative Pairing

Smart cards are an example of which type of control?

A. Detective control

B. Administrative control

C. Technical control

D. Physical control

Rule-Based Access Control (RuBAC) access is determined by rules. Such rules would fit within what category of access control ?

A. Discretionary Access Control (DAC)

B. Mandatory Access control (MAC)

C. Non-Discretionary Access Control (NDAC)

D. Lattice-based Access control C

Secure Shell (SSH-2) supports authentication, compression, confidentiality, and integrity, SSH is commonly used as a secure alternative to all of the following protocols below except:

A. telnet

B. rlogin

C. RSH

D. HTTPS

Which TCSEC level is labeled Controlled Access Protection?

A. C1

B. C2

C. C3

D. B1

How would an IP spoofing attack be best classified?

A. Session hijacking attack

B. Passive attack

C. Fragmentation attack

D. Sniffing attack A

Which of the following is less likely to be included in the change control sub-phase of the maintenance phase of a software product?

A. Estimating the cost of the changes requested

B. Recreating and analyzing the problem

C. Determining the interface that is presented to the user

D. Establishing the priorities of requests

Which of the following binds a subject name to a public key value?

A. A public-key certificate

B. A public key infrastructure

C. A secret key infrastructure

D. A private key certificate A

Which of the following are NOT a countermeasure to traffic analysis?

A. Padding messages.

B. Eavesdropping.

C. Sending noise.

D. Faraday Cage B

Which of the following statements pertaining to access control is false?

A. Users should only access data on a need-to-know basis.

B. If access is not explicitly denied, it should be implicitly allowed.

C. Access rights should be granted based on the level of trust a company has on a subject.

D. Roles can be an efficient way to assign rights to a type of user who performs certain tasks.

Which one of the following statements about the advantages and disadvantages of network-based Intrusion detection systems is true

A. Network-based IDSs are not vulnerable to attacks.

B. Network-based IDSs are well suited for modern switch-based networks.

C. Most network-based IDSs can automatically indicate whether or not an attack was successful.

D. The deployment of network-based IDSs has little impact upon an existing network.

Access Full SSCP Dump Free

Looking for even more practice questions? Click here to access the complete SSCP Dump Free collection, offering hundreds of questions across all exam objectives.

We regularly update our content to ensure accuracy and relevance—so be sure to check back for new material.

Begin your certification journey today with our SSCP dump free questions — and get one step closer to exam success!