Google Professional Cloud Developer Mock Test Free – 50 Realistic Questions to Prepare with Confidence.

Getting ready for your Google Professional Cloud Developer certification exam? Start your preparation the smart way with our Google Professional Cloud Developer Mock Test Free – a carefully crafted set of 50 realistic, exam-style questions to help you practice effectively and boost your confidence.

Using a mock test free for Google Professional Cloud Developer exam is one of the best ways to:

- Familiarize yourself with the actual exam format and question style

- Identify areas where you need more review

- Strengthen your time management and test-taking strategy

Below, you will find 50 free questions from our Google Professional Cloud Developer Mock Test Free resource. These questions are structured to reflect the real exam’s difficulty and content areas, helping you assess your readiness accurately.

You have an application running in a production Google Kubernetes Engine (GKE) cluster. You use Cloud Deploy to automatically deploy your application to your production GKE cluster. As part of your development process, you are planning to make frequent changes to the application’s source code and need to select the tools to test the changes before pushing them to your remote source code repository. Your toolset must meet the following requirements: • Test frequent local changes automatically. • Local deployment emulates production deployment. Which tools should you use to test building and running a container on your laptop using minimal resources?

A. Docker Compose and dockerd

B. Terraform and kubeadm

C. Minikube and Skaffold

D. kaniko and Tekton

You are deploying your applications on Compute Engine. One of your Compute Engine instances failed to launch. What should you do? (Choose two.)

A. Determine whether your file system is corrupted.

B. Access Compute Engine as a different SSH user.

C. Troubleshoot firewall rules or routes on an instance.

D. Check whether your instance boot disk is completely full.

E. Check whether network traffic to or from your instance is being dropped.

You are building a new API. You want to minimize the cost of storing and reduce the latency of serving images. Which architecture should you use?

A. App Engine backed by Cloud Storage

B. Compute Engine backed by Persistent Disk

C. Transfer Appliance backed by Cloud Filestore

D. Cloud Content Delivery Network (CDN) backed by Cloud Storage

You are using Cloud Build build to promote a Docker image to Development, Test, and Production environments. You need to ensure that the same Docker image is deployed to each of these environments. How should you identify the Docker image in your build?

A. Use the latest Docker image tag.

B. Use a unique Docker image name.

C. Use the digest of the Docker image.

D. Use a semantic version Docker image tag.

Your team is building an application for a financial institution. The application's frontend runs on Compute Engine, and the data resides in Cloud SQL and one Cloud Storage bucket. The application will collect data containing PII, which will be stored in the Cloud SQL database and the Cloud Storage bucket. You need to secure the PII data. What should you do?

A. 1. Create the relevant firewall rules to allow only the frontend to communicate with the Cloud SQL database2. Using IAM, allow only the frontend service account to access the Cloud Storage bucket

B. 1. Create the relevant firewall rules to allow only the frontend to communicate with the Cloud SQL database2. Enable private access to allow the frontend to access the Cloud Storage bucket privately

C. 1. Configure a private IP address for Cloud SQL2. Use VPC-SC to create a service perimeter3. Add the Cloud SQL database and the Cloud Storage bucket to the same service perimeter

D. 1. Configure a private IP address for Cloud SQL2. Use VPC-SC to create a service perimeter3. Add the Cloud SQL database and the Cloud Storage bucket to different service perimeters

Your team develops services that run on Google Cloud. You need to build a data processing service and will use Cloud Functions. The data to be processed by the function is sensitive. You need to ensure that invocations can only happen from authorized services and follow Google-recommended best practices for securing functions. What should you do?

A. Enable Identity-Aware Proxy in your project. Secure function access using its permissions.

B. Create a service account with the Cloud Functions Viewer role. Use that service account to invoke the function.

C. Create a service account with the Cloud Functions Invoker role. Use that service account to invoke the function.

D. Create an OAuth 2.0 client ID for your calling service in the same project as the function you want to secure. Use those credentials to invoke the function.

You are a lead developer working on a new retail system that runs on Cloud Run and Firestore in Datastore mode. A web UI requirement is for the system to display a list of available products when users access the system and for the user to be able to browse through all products. You have implemented this requirement in the minimum viable product (MVP) phase by returning a list of all available products stored in Firestore. A few months after go-live, you notice that Cloud Run instances are terminated with HTTP 500: Container instances are exceeding memory limits errors during busy times. This error coincides with spikes in the number of Datastore entity reads. You need to prevent Cloud Run from crashing and decrease the number of Datastore entity reads. You want to use a solution that optimizes system performance. What should you do?

A. Modify the query that returns the product list using integer offsets.

B. Modify the query that returns the product list using limits.

C. Modify the Cloud Run configuration to increase the memory limits.

D. Modify the query that returns the product list using cursors.

You are in the final stage of migrating an on-premises data center to Google Cloud. You are quickly approaching your deadline, and discover that a web API is running on a server slated for decommissioning. You need to recommend a solution to modernize this API while migrating to Google Cloud. The modernized web API must meet the following requirements: • Autoscales during high traffic periods at the end of each month • Written in Python 3.x • Developers must be able to rapidly deploy new versions in response to frequent code changes You want to minimize cost, effort, and operational overhead of this migration. What should you do?

A. Modernize and deploy the code on App Engine flexible environment.

B. Modernize and deploy the code on App Engine standard environment.

C. Deploy the modernized application to an n1-standard-1 Compute Engine instance.

D. Ask the development team to re-write the application to run as a Docker container on Google Kubernetes Engine.

Case study - This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided. To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study. At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section. To start the case study - To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question. Company Overview - HipLocal is a community application designed to facilitate communication between people in close proximity. It is used for event planning and organizing sporting events, and for businesses to connect with their local communities. HipLocal launched recently in a few neighborhoods in Dallas and is rapidly growing into a global phenomenon. Its unique style of hyper-local community communication and business outreach is in demand around the world. Executive Statement - We are the number one local community app; it's time to take our local community services global. Our venture capital investors want to see rapid growth and the same great experience for new local and virtual communities that come online, whether their members are 10 or 10000 miles away from each other. Solution Concept - HipLocal wants to expand their existing service, with updated functionality, in new regions to better serve their global customers. They want to hire and train a new team to support these regions in their time zones. They will need to ensure that the application scales smoothly and provides clear uptime data. Existing Technical Environment - HipLocal's environment is a mix of on-premises hardware and infrastructure running in Google Cloud Platform. The HipLocal team understands their application well, but has limited experience in global scale applications. Their existing technical environment is as follows: * Existing APIs run on Compute Engine virtual machine instances hosted in GCP. * State is stored in a single instance MySQL database in GCP. * Data is exported to an on-premises Teradata/Vertica data warehouse. * Data analytics is performed in an on-premises Hadoop environment. * The application has no logging. * There are basic indicators of uptime; alerts are frequently fired when the APIs are unresponsive. Business Requirements - HipLocal's investors want to expand their footprint and support the increase in demand they are seeing. Their requirements are: * Expand availability of the application to new regions. * Increase the number of concurrent users that can be supported. * Ensure a consistent experience for users when they travel to different regions. * Obtain user activity metrics to better understand how to monetize their product. * Ensure compliance with regulations in the new regions (for example, GDPR). * Reduce infrastructure management time and cost. * Adopt the Google-recommended practices for cloud computing. Technical Requirements - * The application and backend must provide usage metrics and monitoring. * APIs require strong authentication and authorization. * Logging must be increased, and data should be stored in a cloud analytics platform. * Move to serverless architecture to facilitate elastic scaling. * Provide authorized access to internal apps in a secure manner. HipLocal wants to improve the resilience of their MySQL deployment, while also meeting their business and technical requirements. Which configuration should they choose?

A. Use the current single instance MySQL on Compute Engine and several read-only MySQL servers on Compute Engine.

B. Use the current single instance MySQL on Compute Engine, and replicate the data to Cloud SQL in an external master configuration.

C. Replace the current single instance MySQL instance with Cloud SQL, and configure high availability.

D. Replace the current single instance MySQL instance with Cloud SQL, and Google provides redundancy without further configuration.

You are developing an application that will store and access sensitive unstructured data objects in a Cloud Storage bucket. To comply with regulatory requirements, you need to ensure that all data objects are available for at least 7 years after their initial creation. Objects created more than 3 years ago are accessed very infrequently (less than once a year). You need to configure object storage while ensuring that storage cost is optimized. What should you do? (Choose two.)

A. Set a retention policy on the bucket with a period of 7 years.

B. Use IAM Conditions to provide access to objects 7 years after the object creation date.

C. Enable Object Versioning to prevent objects from being accidentally deleted for 7 years after object creation.

D. Create an object lifecycle policy on the bucket that moves objects from Standard Storage to Archive Storage after 3 years.

E. Implement a Cloud Function that checks the age of each object in the bucket and moves the objects older than 3 years to a second bucket with the Archive Storage class. Use Cloud Scheduler to trigger the Cloud Function on a daily schedule.

You are developing an ecommerce web application that uses App Engine standard environment and Memorystore for Redis. When a user logs into the app, the application caches the user's information (e.g., session, name, address, preferences), which is stored for quick retrieval during checkout. While testing your application in a browser, you get a 502 Bad Gateway error. You have determined that the application is not connecting to Memorystore. What is the reason for this error?

A. Your Memorystore for Redis instance was deployed without a public IP address.

B. You configured your Serverless VPC Access connector in a different region than your App Engine instance.

C. The firewall rule allowing a connection between App Engine and Memorystore was removed during an infrastructure update by the DevOps team.

D. You configured your application to use a Serverless VPC Access connector on a different subnet in a different availability zone than your App Engine instance.

You are building an application that uses a distributed microservices architecture. You want to measure the performance and system resource utilization in one of the microservices written in Java. What should you do?

A. Instrument the service with Cloud Profiler to measure CPU utilization and method-level execution times in the service.

B. Instrument the service with Debugger to investigate service errors.

C. Instrument the service with Cloud Trace to measure request latency.

D. Instrument the service with OpenCensus to measure service latency, and write custom metrics to Cloud Monitoring.

You support an application that uses the Cloud Storage API. You review the logs and discover multiple HTTP 503 Service Unavailable error responses from the API. Your application logs the error and does not take any further action. You want to implement Google-recommended retry logic to improve success rates. Which approach should you take?

A. Retry the failures in batch after a set number of failures is logged.

B. Retry each failure at a set time interval up to a maximum number of times.

C. Retry each failure at increasing time intervals up to a maximum number of tries.

D. Retry each failure at decreasing time intervals up to a maximum number of tries.

You have recently instrumented a new application with OpenTelemetry, and you want to check the latency of your application requests in Trace. You want to ensure that a specific request is always traced. What should you do?

A. Wait 10 minutes, then verify that Trace captures those types of requests automatically.

B. Write a custom script that sends this type of request repeatedly from your dev project.

C. Use the Trace API to apply custom attributes to the trace.

D. Add the X-Cloud-Trace-Context header to the request with the appropriate parameters.

You are developing a web application that contains private images and videos stored in a Cloud Storage bucket. Your users are anonymous and do not have Google Accounts. You want to use your application-specific logic to control access to the images and videos. How should you configure access?

A. Cache each web application user’s IP address to create a named IP table using Google Cloud Armor. Create a Google Cloud Armor security policy that allows users to access the backend bucket.

B. Grant the Storage Object Viewer IAM role to allUsers. Allow users to access the bucket after authenticating through your web application.

C. Configure Identity-Aware Proxy (IAP) to authenticate users into the web application. Allow users to access the bucket after authenticating through IAP.

D. Generate a signed URL that grants read access to the bucket. Allow users to access the URL after authenticating through your web application.

You are developing a Java Web Server that needs to interact with Google Cloud services via the Google Cloud API on the user's behalf. Users should be able to authenticate to the Google Cloud API using their Google Cloud identities. Which workflow should you implement in your web application?

A. 1. When a user arrives at your application, prompt them for their Google username and password.2. Store an SHA password hash in your application’s database along with the user’s username.3. The application authenticates to the Google Cloud API using HTTPs requests with the user’s username and password hash in the Authorization request header.

B. 1. When a user arrives at your application, prompt them for their Google username and password.2. Forward the user’s username and password in an HTTPS request to the Google Cloud authorization server, and request an access token.3. The Google server validates the user’s credentials and returns an access token to the application.4. The application uses the access token to call the Google Cloud API.

C. 1. When a user arrives at your application, route them to a Google Cloud consent screen with a list of requested permissions that prompts the user to sign in with SSO to their Google Account.2. After the user signs in and provides consent, your application receives an authorization code from a Google server.3. The Google server returns the authorization code to the user, which is stored in the browser’s cookies.4. The user authenticates to the Google Cloud API using the authorization code in the cookie.

D. 1. When a user arrives at your application, route them to a Google Cloud consent screen with a list of requested permissions that prompts the user to sign in with SSO to their Google Account.2. After the user signs in and provides consent, your application receives an authorization code from a Google server.3. The application requests a Google Server to exchange the authorization code with an access token.4. The Google server responds with the access token that is used by the application to call the Google Cloud API.

Your website is deployed on Compute Engine. Your marketing team wants to test conversion rates between 3 different website designs. Which approach should you use?

A. Deploy the website on App Engine and use traffic splitting.

B. Deploy the website on App Engine as three separate services.

C. Deploy the website on Cloud Functions and use traffic splitting.

D. Deploy the website on Cloud Functions as three separate functions.

You migrated some of your applications to Google Cloud. You are using a legacy monitoring platform deployed on-premises for both on-premises and cloud- deployed applications. You discover that your notification system is responding slowly to time-critical problems in the cloud applications. What should you do?

A. Replace your monitoring platform with Cloud Monitoring.

B. Install the Cloud Monitoring agent on your Compute Engine instances.

C. Migrate some traffic back to your old platform. Perform A/B testing on the two platforms concurrently.

D. Use Cloud Logging and Cloud Monitoring to capture logs, monitor, and send alerts. Send them to your existing platform.

You have an application in production. It is deployed on Compute Engine virtual machine instances controlled by a managed instance group. Traffic is routed to the instances via a HTTP(s) load balancer. Your users are unable to access your application. You want to implement a monitoring technique to alert you when the application is unavailable. Which technique should you choose?

A. Smoke tests

B. Stackdriver uptime checks

C. Cloud Load Balancing – heath checks

D. Managed instance group – heath checks

You are using Cloud Run to host a web application. You need to securely obtain the application project ID and region where the application is running and display this information to users. You want to use the most performant approach. What should you do?

A. Use HTTP requests to query the available metadata server at the http://metadata.google.internal/ endpoint with the Metadata-Flavor: Google header.

B. In the Google Cloud console, navigate to the Project Dashboard and gather configuration details. Navigate to the Cloud Run “Variables & Secrets” tab, and add the desired environment variables in Key:Value format.

C. In the Google Cloud console, navigate to the Project Dashboard and gather configuration details. Write the application configuration information to Cloud Run’s in-memory container filesystem.

D. Make an API call to the Cloud Asset Inventory API from the application and format the request to include instance metadata.

Your team is developing a Cloud Function triggered by Cloud Storage events. You want to accelerate testing and development of your Cloud Function while following Google-recommended best practices. What should you do?

A. Create a new Cloud Function that is triggered when Cloud Audit Logs detects the cloudfunctions.functions.sourceCodeSet operation in the original Cloud Function. Send mock requests to the new function to evaluate the functionality.

B. Make a copy of the Cloud Function, and rewrite the code to be HTTP-triggered. Edit and test the new version by triggering the HTTP endpoint. Send mock requests to the new function to evaluate the functionality.

C. Install the Functions Frameworks library, and configure the Cloud Function on localhost. Make a copy of the function, and make edits to the new version. Test the new version using curl.

D. Make a copy of the Cloud Function in the Google Cloud console. Use the Cloud console’s in-line editor to make source code changes to the new function. Modify your web application to call the new function, and test the new version in production

You are monitoring a web application that is written in Go and deployed in Google Kubernetes Engine. You notice an increase in CPU and memory utilization. You need to determine which source code is consuming the most CPU and memory resources. What should you do?

A. Download, install, and start the Snapshot Debugger agent in your VM. Take debug snapshots of the functions that take the longest time. Review the call stack frame, and identify the local variables at that level in the stack.

B. Import the Cloud Profiler package into your application, and initialize the Profiler agent. Review the generated flame graph in the Google Cloud console to identify time-intensive functions.

C. Import OpenTelemetry and Trace export packages into your application, and create the trace provider.Review the latency data for your application on the Trace overview page, and identify where bottlenecks are occurring.

D. Create a Cloud Logging query that gathers the web application’s logs. Write a Python script that calculates the difference between the timestamps from the beginning and the end of the application’s longest functions to identity time-intensive functions.

You have an on-premises application that authenticates to the Cloud Storage API using a user-managed service account with a user-managed key. The application connects to Cloud Storage using Private Google Access over a Dedicated Interconnect link. You discover that requests from the application to access objects in the Cloud Storage bucket are failing with a 403 Permission Denied error code. What is the likely cause of this issue?

A. The folder structure inside the bucket and object paths have changed.

B. The permissions of the service account’s predefined role have changed.

C. The service account key has been rotated but not updated on the application server.

D. The Interconnect link from the on-premises data center to Google Cloud is experiencing a temporary outage.

You have written a Cloud Function that accesses other Google Cloud resources. You want to secure the environment using the principle of least privilege. What should you do?

A. Create a new service account that has Editor authority to access the resources. The deployer is given permission to get the access token.

B. Create a new service account that has a custom IAM role to access the resources. The deployer is given permission to get the access token.

C. Create a new service account that has Editor authority to access the resources. The deployer is given permission to act as the new service account.

D. Create a new service account that has a custom IAM role to access the resources. The deployer is given permission to act as the new service account.

You want to use the Stackdriver Logging Agent to send an application's log file to Stackdriver from a Compute Engine virtual machine instance. After installing the Stackdriver Logging Agent, what should you do first?

A. Enable the Error Reporting API on the project.

B. Grant the instance full access to all Cloud APIs.

C. Configure the application log file as a custom source.

D. Create a Stackdriver Logs Export Sink with a filter that matches the application’s log entries.

Case study - This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided. To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study. At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section. To start the case study - To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question. Company Overview - HipLocal is a community application designed to facilitate communication between people in close proximity. It is used for event planning and organizing sporting events, and for businesses to connect with their local communities. HipLocal launched recently in a few neighborhoods in Dallas and is rapidly growing into a global phenomenon. Its unique style of hyper-local community communication and business outreach is in demand around the world. Executive Statement - We are the number one local community app; it's time to take our local community services global. Our venture capital investors want to see rapid growth and the same great experience for new local and virtual communities that come online, whether their members are 10 or 10000 miles away from each other. Solution Concept - HipLocal wants to expand their existing service, with updated functionality, in new regions to better serve their global customers. They want to hire and train a new team to support these regions in their time zones. They will need to ensure that the application scales smoothly and provides clear uptime data. Existing Technical Environment - HipLocal's environment is a mix of on-premises hardware and infrastructure running in Google Cloud Platform. The HipLocal team understands their application well, but has limited experience in global scale applications. Their existing technical environment is as follows: * Existing APIs run on Compute Engine virtual machine instances hosted in GCP. * State is stored in a single instance MySQL database in GCP. * Data is exported to an on-premises Teradata/Vertica data warehouse. * Data analytics is performed in an on-premises Hadoop environment. * The application has no logging. * There are basic indicators of uptime; alerts are frequently fired when the APIs are unresponsive. Business Requirements - HipLocal's investors want to expand their footprint and support the increase in demand they are seeing. Their requirements are: * Expand availability of the application to new regions. * Increase the number of concurrent users that can be supported. * Ensure a consistent experience for users when they travel to different regions. * Obtain user activity metrics to better understand how to monetize their product. * Ensure compliance with regulations in the new regions (for example, GDPR). * Reduce infrastructure management time and cost. * Adopt the Google-recommended practices for cloud computing. Technical Requirements - * The application and backend must provide usage metrics and monitoring. * APIs require strong authentication and authorization. * Logging must be increased, and data should be stored in a cloud analytics platform. * Move to serverless architecture to facilitate elastic scaling. * Provide authorized access to internal apps in a secure manner. Which service should HipLocal use to enable access to internal apps?

A. Cloud VPN

B. Cloud Armor

C. Virtual Private Cloud

D. Cloud Identity-Aware Proxy

You are developing an ecommerce application that stores customer, order, and inventory data as relational tables inside Cloud Spanner. During a recent load test, you discover that Spanner performance is not scaling linearly as expected. Which of the following is the cause?

A. The use of 64-bit numeric types for 32-bit numbers.

B. The use of the STRING data type for arbitrary-precision values.

C. The use of Version 1 UUIDs as primary keys that increase monotonically.

D. The use of LIKE instead of STARTS_WITH keyword for parameterized SQL queries.

You recently migrated a monolithic application to Google Cloud by breaking it down into microservices. One of the microservices is deployed using Cloud Functions. As you modernize the application, you make a change to the API of the service that is backward-incompatible. You need to support both existing callers who use the original API and new callers who use the new API. What should you do?

A. Leave the original Cloud Function as-is and deploy a second Cloud Function with the new API. Use a load balancer to distribute calls between the versions.

B. Leave the original Cloud Function as-is and deploy a second Cloud Function that includes only the changed API. Calls are automatically routed to the correct function.

C. Leave the original Cloud Function as-is and deploy a second Cloud Function with the new API. Use Cloud Endpoints to provide an API gateway that exposes a versioned API.

D. Re-deploy the Cloud Function after making code changes to support the new API. Requests for both versions of the API are fulfilled based on a version identifier included in the call.

You are load testing your server application. During the first 30 seconds, you observe that a previously inactive Cloud Storage bucket is now servicing 2000 write requests per second and 7500 read requests per second. Your application is now receiving intermittent 5xx and 429 HTTP responses from the Cloud Storage JSON API as the demand escalates. You want to decrease the failed responses from the Cloud Storage API. What should you do?

A. Distribute the uploads across a large number of individual storage buckets.

B. Use the XML API instead of the JSON API for interfacing with Cloud Storage.

C. Pass the HTTP response codes back to clients that are invoking the uploads from your application.

D. Limit the upload rate from your application clients so that the dormant bucket’s peak request rate is reached more gradually.

You are writing from a Go application to a Cloud Spanner database. You want to optimize your application’s performance using Google-recommended best practices. What should you do?

A. Write to Cloud Spanner using Cloud Client Libraries.

B. Write to Cloud Spanner using Google API Client Libraries

C. Write to Cloud Spanner using a custom gRPC client library.

D. Write to Cloud Spanner using a third-party HTTP client library.

You have decided to migrate your Compute Engine application to Google Kubernetes Engine. You need to build a container image and push it to Artifact Registry using Cloud Build. What should you do? (Choose two.)

A. Run gcloud builds submit in the directory that contains the application source code.

B. Run gcloud run deploy app-name –image gcr.io/$PROJECT_ID/app-name in the directory that contains the application source code.

C. Run gcloud container images add-tag gcr.io/$PROJECT_ID/app-name gcr.io/$PROJECT_ID/app-name:latest in the directory that contains the application source code.

D. In the application source directory, create a file named cloudbuild.yaml that contains the following contents:

E. In the application source directory, create a file named cloudbuild.yaml that contains the following contents:

You are developing a web application that will be accessible over both HTTP and HTTPS and will run on Compute Engine instances. On occasion, you will need to SSH from your remote laptop into one of the Compute Engine instances to conduct maintenance on the app. How should you configure the instances while following Google-recommended best practices?

A. Set up a backend with Compute Engine web server instances with a private IP address behind a TCP proxy load balancer.

B. Configure the firewall rules to allow all ingress traffic to connect to the Compute Engine web servers, with each server having a unique external IP address.

C. Configure Cloud Identity-Aware Proxy API for SSH access. Then configure the Compute Engine servers with private IP addresses behind an HTTP(s) load balancer for the application web traffic.

D. Set up a backend with Compute Engine web server instances with a private IP address behind an HTTP(S) load balancer. Set up a bastion host with a public IP address and open firewall ports. Connect to the web instances using the bastion host.

Case study - This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided. To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study. At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section. To start the case study - To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question. Company Overview - HipLocal is a community application designed to facilitate communication between people in close proximity. It is used for event planning and organizing sporting events, and for businesses to connect with their local communities. HipLocal launched recently in a few neighborhoods in Dallas and is rapidly growing into a global phenomenon. Its unique style of hyper-local community communication and business outreach is in demand around the world. Executive Statement - We are the number one local community app; it's time to take our local community services global. Our venture capital investors want to see rapid growth and the same great experience for new local and virtual communities that come online, whether their members are 10 or 10000 miles away from each other. Solution Concept - HipLocal wants to expand their existing service, with updated functionality, in new regions to better serve their global customers. They want to hire and train a new team to support these regions in their time zones. They will need to ensure that the application scales smoothly and provides clear uptime data. Existing Technical Environment - HipLocal's environment is a mix of on-premises hardware and infrastructure running in Google Cloud Platform. The HipLocal team understands their application well, but has limited experience in global scale applications. Their existing technical environment is as follows: * Existing APIs run on Compute Engine virtual machine instances hosted in GCP. * State is stored in a single instance MySQL database in GCP. * Data is exported to an on-premises Teradata/Vertica data warehouse. * Data analytics is performed in an on-premises Hadoop environment. * The application has no logging. * There are basic indicators of uptime; alerts are frequently fired when the APIs are unresponsive. Business Requirements - HipLocal's investors want to expand their footprint and support the increase in demand they are seeing. Their requirements are: * Expand availability of the application to new regions. * Increase the number of concurrent users that can be supported. * Ensure a consistent experience for users when they travel to different regions. * Obtain user activity metrics to better understand how to monetize their product. * Ensure compliance with regulations in the new regions (for example, GDPR). * Reduce infrastructure management time and cost. * Adopt the Google-recommended practices for cloud computing. Technical Requirements - * The application and backend must provide usage metrics and monitoring. * APIs require strong authentication and authorization. * Logging must be increased, and data should be stored in a cloud analytics platform. * Move to serverless architecture to facilitate elastic scaling. * Provide authorized access to internal apps in a secure manner. Which service should HipLocal use for their public APIs?

A. Cloud Armor

B. Cloud Functions

C. Cloud Endpoints

D. Shielded Virtual Machines

You work on an application that relies on Cloud Spanner as its main datastore. New application features have occasionally caused performance regressions. You want to prevent performance issues by running an automated performance test with Cloud Build for each commit made. If multiple commits are made at the same time, the tests might run concurrently. What should you do?

A. Create a new project with a random name for every build. Load the required data. Delete the project after the test is run.

B. Create a new Cloud Spanner instance for every build. Load the required data. Delete the Cloud Spanner instance after the test is run.

C. Create a project with a Cloud Spanner instance and the required data. Adjust the Cloud Build build file to automatically restore the data to its previous state after the test is run.

D. Start the Cloud Spanner emulator locally. Load the required data. Shut down the emulator after the test is run.

You are deploying a microservices application to Google Kubernetes Engine (GKE) that will broadcast livestreams. You expect unpredictable traffic patterns and large variations in the number of concurrent users. Your application must meet the following requirements: • Scales automatically during popular events and maintains high availability • Is resilient in the event of hardware failures How should you configure the deployment parameters? (Choose two.)

A. Distribute your workload evenly using a multi-zonal node pool.

B. Distribute your workload evenly using multiple zonal node pools.

C. Use cluster autoscaler to resize the number of nodes in the node pool, and use a Horizontal Pod Autoscaler to scale the workload.

D. Create a managed instance group for Compute Engine with the cluster nodes. Configure autoscaling rules for the managed instance group.

E. Create alerting policies in Cloud Monitoring based on GKE CPU and memory utilization. Ask an on-duty engineer to scale the workload by executing a script when CPU and memory usage exceed predefined thresholds.

Your team is developing an ecommerce platform for your company. Users will log in to the website and add items to their shopping cart. Users will be automatically logged out after 30 minutes of inactivity. When users log back in, their shopping cart should be saved. How should you store users' session and shopping cart information while following Google-recommended best practices?

A. Store the session information in Pub/Sub, and store the shopping cart information in Cloud SQL.

B. Store the shopping cart information in a file on Cloud Storage where the filename is the SESSION ID.

C. Store the session and shopping cart information in a MySQL database running on multiple Compute Engine instances.

D. Store the session information in Memorystore for Redis or Memorystore for Memcached, and store the shopping cart information in Firestore.

Your team is developing a new application using a PostgreSQL database and Cloud Run. You are responsible for ensuring that all traffic is kept private on Google Cloud. You want to use managed services and follow Google-recommended best practices. What should you do?

A. 1. Enable Cloud SQL and Cloud Run in the same project. 2. Configure a private IP address for Cloud SQL. Enable private services access. 3. Create a Serverless VPC Access connector. 4. Configure Cloud Run to use the connector to connect to Cloud SQL.

B. 1. Install PostgreSQL on a Compute Engine virtual machine (VM), and enable Cloud Run in the same project. 2. Configure a private IP address for the VM. Enable private services access. 3. Create a Serverless VPC Access connector. 4. Configure Cloud Run to use the connector to connect to the VM hosting PostgreSQL.

C. 1. Use Cloud SQL and Cloud Run in different projects. 2. Configure a private IP address for Cloud SQL. Enable private services access. 3. Create a Serverless VPC Access connector. 4. Set up a VPN connection between the two projects. Configure Cloud Run to use the connector to connect to Cloud SQL.

D. 1. Install PostgreSQL on a Compute Engine VM, and enable Cloud Run in different projects. 2. Configure a private IP address for the VM. Enable private services access. 3. Create a Serverless VPC Access connector. 4. Set up a VPN connection between the two projects. Configure Cloud Run to use the connector to access the VM hosting PostgreSQL

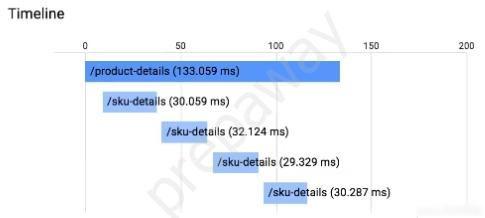

You have an application running in App Engine. Your application is instrumented with Stackdriver Trace. The /product-details request reports details about four known unique products at /sku-details as shown below. You want to reduce the time it takes for the request to complete. What should you do?

A. Increase the size of the instance class.

B. Change the Persistent Disk type to SSD.

C. Change /product-details to perform the requests in parallel.

D. Store the /sku-details information in a database, and replace the webservice call with a database query.

You plan to make a simple HTML application available on the internet. This site keeps information about FAQs for your application. The application is static and contains images, HTML, CSS, and Javascript. You want to make this application available on the internet with as few steps as possible. What should you do?

A. Upload your application to Cloud Storage.

B. Upload your application to an App Engine environment.

C. Create a Compute Engine instance with Apache web server installed. Configure Apache web server to host the application.

D. Containerize your application first. Deploy this container to Google Kubernetes Engine (GKE) and assign an external IP address to the GKE pod hosting the application.

You are building an API that will be used by Android and iOS apps. The API must: * Support HTTPs * Minimize bandwidth cost * Integrate easily with mobile apps Which API architecture should you use?

A. RESTful APIs

B. MQTT for APIs

C. gRPC-based APIs

D. SOAP-based APIs

You are designing a schema for a Cloud Spanner customer database. You want to store a phone number array field in a customer table. You also want to allow users to search customers by phone number. How should you design this schema?

A. Create a table named Customers. Add an Array field in a table that will hold phone numbers for the customer.

B. Create a table named Customers. Create a table named Phones. Add a CustomerId field in the Phones table to find the CustomerId from a phone number.

C. Create a table named Customers. Add an Array field in a table that will hold phone numbers for the customer. Create a secondary index on the Array field.

D. Create a table named Customers as a parent table. Create a table named Phones, and interleave this table into the Customer table. Create an index on the phone number field in the Phones table.

You are developing an application that will allow clients to download a file from your website for a specific period of time. How should you design the application to complete this task while following Google-recommended best practices?

A. Configure the application to send the file to the client as an email attachment.

B. Generate and assign a Cloud Storage-signed URL for the file. Make the URL available for the client to download.

C. Create a temporary Cloud Storage bucket with time expiration specified, and give download permissions to the bucket. Copy the file, and send it to the client.

D. Generate the HTTP cookies with time expiration specified. If the time is valid, copy the file from the Cloud Storage bucket, and make the file available for the client to download.

You are developing a new application that has the following design requirements: ✑ Creation and changes to the application infrastructure are versioned and auditable. ✑ The application and deployment infrastructure uses Google-managed services as much as possible. ✑ The application runs on a serverless compute platform. How should you design the application's architecture?

A. 1. Store the application and infrastructure source code in a Git repository. 2. Use Cloud Build to deploy the application infrastructure with Terraform. 3. Deploy the application to a Cloud Function as a pipeline step.

B. 1. Deploy Jenkins from the Google Cloud Marketplace, and define a continuous integration pipeline in Jenkins. 2. Configure a pipeline step to pull the application source code from a Git repository. 3. Deploy the application source code to App Engine as a pipeline step.

C. 1. Create a continuous integration pipeline on Cloud Build, and configure the pipeline to deploy the application infrastructure using Deployment Manager templates. 2. Configure a pipeline step to create a container with the latest application source code. 3. Deploy the container to a Compute Engine instance as a pipeline step.

D. 1. Deploy the application infrastructure using gcloud commands. 2. Use Cloud Build to define a continuous integration pipeline for changes to the application source code. 3. Configure a pipeline step to pull the application source code from a Git repository, and create a containerized application. 4. Deploy the new container on Cloud Run as a pipeline step.

You are reviewing and updating your Cloud Build steps to adhere to best practices. Currently, your build steps include: 1. Pull the source code from a source repository. 2. Build a container image 3. Upload the built image to Artifact Registry. You need to add a step to perform a vulnerability scan of the built container image, and you want the results of the scan to be available to your deployment pipeline running in Google Cloud. You want to minimize changes that could disrupt other teams’ processes. What should you do?

A. Enable Binary Authorization, and configure it to attest that no vulnerabilities exist in a container image.

B. Upload the built container images to your Docker Hub instance, and scan them for vulnerabilities.

C. Enable the Container Scanning API in Artifact Registry, and scan the built container images for vulnerabilities.

D. Add Artifact Registry to your Aqua Security instance, and scan the built container images for vulnerabilities.

You are running a containerized application on Google Kubernetes Engine. Your container images are stored in Container Registry. Your team uses CI/CD practices. You need to prevent the deployment of containers with known critical vulnerabilities. What should you do?

A. • Use Web Security Scanner to automatically crawl your application• Review your application logs for scan results, and provide an attestation that the container is free of known critical vulnerabilities• Use Binary Authorization to implement a policy that forces the attestation to be provided before the container is deployed

B. • Use Web Security Scanner to automatically crawl your application• Review the scan results in the scan details page in the Cloud Console, and provide an attestation that the container is free of known critical vulnerabilities• Use Binary Authorization to implement a policy that forces the attestation to be provided before the container is deployed

C. • Enable the Container Scanning API to perform vulnerability scanning• Review vulnerability reporting in Container Registry in the Cloud Console, and provide an attestation that the container is free of known critical vulnerabilities• Use Binary Authorization to implement a policy that forces the attestation to be provided before the container is deployed

D. • Enable the Container Scanning API to perform vulnerability scanning• Programmatically review vulnerability reporting through the Container Scanning API, and provide an attestation that the container is free of known critical vulnerabilities• Use Binary Authorization to implement a policy that forces the attestation to be provided before the container is deployed

The development teams in your company want to manage resources from their local environments. You have been asked to enable developer access to each team’s Google Cloud projects. You want to maximize efficiency while following Google-recommended best practices. What should you do?

A. Add the users to their projects, assign the relevant roles to the users, and then provide the users with each relevant Project ID.

B. Add the users to their projects, assign the relevant roles to the users, and then provide the users with each relevant Project Number.

C. Create groups, add the users to their groups, assign the relevant roles to the groups, and then provide the users with each relevant Project ID.

D. Create groups, add the users to their groups, assign the relevant roles to the groups, and then provide the users with each relevant Project Number.

You noticed that your application was forcefully shut down during a Deployment update in Google Kubernetes Engine. Your application didn’t close the database connection before it was terminated. You want to update your application to make sure that it completes a graceful shutdown. What should you do?

A. Update your code to process a received SIGTERM signal to gracefully disconnect from the database.

B. Configure a PodDisruptionBudget to prevent the Pod from being forcefully shut down.

C. Increase the terminationGracePeriodSeconds for your application.

D. Configure a PreStop hook to shut down your application.

You manage a microservice-based ecommerce platform on Google Cloud that sends confirmation emails to a third-party email service provider using a Cloud Function. Your company just launched a marketing campaign, and some customers are reporting that they have not received order confirmation emails. You discover that the services triggering the Cloud Function are receiving HTTP 500 errors. You need to change the way emails are handled to minimize email loss. What should you do?

A. Increase the Cloud Function’s timeout to nine minutes.

B. Configure the sender application to publish the outgoing emails in a message to a Pub/Sub topic. Update the Cloud Function configuration to consume the Pub/Sub queue.

C. Configure the sender application to write emails to Memorystore and then trigger the Cloud Function. When the function is triggered, it reads the email details from Memorystore and sends them to the email service.

D. Configure the sender application to retry the execution of the Cloud Function every one second if a request fails.

You have containerized a legacy application that stores its configuration on an NFS share. You need to deploy this application to Google Kubernetes Engine (GKE) and do not want the application serving traffic until after the configuration has been retrieved. What should you do?

A. Use the gsutil utility to copy files from within the Docker container at startup, and start the service using an ENTRYPOINT script.

B. Create a PersistentVolumeClaim on the GKE cluster. Access the configuration files from the volume, and start the service using an ENTRYPOINT script.

C. Use the COPY statement in the Dockerfile to load the configuration into the container image. Verify that the configuration is available, and start the service using an ENTRYPOINT script.

D. Add a startup script to the GKE instance group to mount the NFS share at node startup. Copy the configuration files into the container, and start the service using an ENTRYPOINT script.

Your security team is auditing all deployed applications running in Google Kubernetes Engine. After completing the audit, your team discovers that some of the applications send traffic within the cluster in clear text. You need to ensure that all application traffic is encrypted as quickly as possible while minimizing changes to your applications and maintaining support from Google. What should you do?

A. Use Network Policies to block traffic between applications.

B. Install Istio, enable proxy injection on your application namespace, and then enable mTLS.

C. Define Trusted Network ranges within the application, and configure the applications to allow traffic only from those networks.

D. Use an automated process to request SSL Certificates for your applications from Let’s Encrypt and add them to your applications.

Access Full Google Professional Cloud Developer Mock Test Free

Want a full-length mock test experience? Click here to unlock the complete Google Professional Cloud Developer Mock Test Free set and get access to hundreds of additional practice questions covering all key topics.

We regularly update our question sets to stay aligned with the latest exam objectives—so check back often for fresh content!

Start practicing with our Google Professional Cloud Developer mock test free today—and take a major step toward exam success!