Google Professional Cloud Developer Dump Free – 50 Practice Questions to Sharpen Your Exam Readiness.

Looking for a reliable way to prepare for your Google Professional Cloud Developer certification? Our Google Professional Cloud Developer Dump Free includes 50 exam-style practice questions designed to reflect real test scenarios—helping you study smarter and pass with confidence.

Using an Google Professional Cloud Developer dump free set of questions can give you an edge in your exam prep by helping you:

- Understand the format and types of questions you’ll face

- Pinpoint weak areas and focus your study efforts

- Boost your confidence with realistic question practice

Below, you will find 50 free questions from our Google Professional Cloud Developer Dump Free collection. These cover key topics and are structured to simulate the difficulty level of the real exam, making them a valuable tool for review or final prep.

Case study - This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided. To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study. At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section. To start the case study - To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question. Company Overview - HipLocal is a community application designed to facilitate communication between people in close proximity. It is used for event planning and organizing sporting events, and for businesses to connect with their local communities. HipLocal launched recently in a few neighborhoods in Dallas and is rapidly growing into a global phenomenon. Its unique style of hyper-local community communication and business outreach is in demand around the world. Executive Statement - We are the number one local community app; it's time to take our local community services global. Our venture capital investors want to see rapid growth and the same great experience for new local and virtual communities that come online, whether their members are 10 or 10000 miles away from each other. Solution Concept - HipLocal wants to expand their existing service, with updated functionality, in new regions to better serve their global customers. They want to hire and train a new team to support these regions in their time zones. They will need to ensure that the application scales smoothly and provides clear uptime data. Existing Technical Environment - HipLocal's environment is a mix of on-premises hardware and infrastructure running in Google Cloud Platform. The HipLocal team understands their application well, but has limited experience in global scale applications. Their existing technical environment is as follows: * Existing APIs run on Compute Engine virtual machine instances hosted in GCP. * State is stored in a single instance MySQL database in GCP. * Data is exported to an on-premises Teradata/Vertica data warehouse. * Data analytics is performed in an on-premises Hadoop environment. * The application has no logging. * There are basic indicators of uptime; alerts are frequently fired when the APIs are unresponsive. Business Requirements - HipLocal's investors want to expand their footprint and support the increase in demand they are seeing. Their requirements are: * Expand availability of the application to new regions. * Increase the number of concurrent users that can be supported. * Ensure a consistent experience for users when they travel to different regions. * Obtain user activity metrics to better understand how to monetize their product. * Ensure compliance with regulations in the new regions (for example, GDPR). * Reduce infrastructure management time and cost. * Adopt the Google-recommended practices for cloud computing. Technical Requirements - * The application and backend must provide usage metrics and monitoring. * APIs require strong authentication and authorization. * Logging must be increased, and data should be stored in a cloud analytics platform. * Move to serverless architecture to facilitate elastic scaling. * Provide authorized access to internal apps in a secure manner. In order to meet their business requirements, how should HipLocal store their application state?

A. Use local SSDs to store state.

B. Put a memcache layer in front of MySQL.

C. Move the state storage to Cloud Spanner.

D. Replace the MySQL instance with Cloud SQL.

You are writing from a Go application to a Cloud Spanner database. You want to optimize your application’s performance using Google-recommended best practices. What should you do?

A. Write to Cloud Spanner using Cloud Client Libraries.

B. Write to Cloud Spanner using Google API Client Libraries

C. Write to Cloud Spanner using a custom gRPC client library.

D. Write to Cloud Spanner using a third-party HTTP client library.

You are reviewing and updating your Cloud Build steps to adhere to best practices. Currently, your build steps include: 1. Pull the source code from a source repository. 2. Build a container image 3. Upload the built image to Artifact Registry. You need to add a step to perform a vulnerability scan of the built container image, and you want the results of the scan to be available to your deployment pipeline running in Google Cloud. You want to minimize changes that could disrupt other teams’ processes. What should you do?

A. Enable Binary Authorization, and configure it to attest that no vulnerabilities exist in a container image.

B. Upload the built container images to your Docker Hub instance, and scan them for vulnerabilities.

C. Enable the Container Scanning API in Artifact Registry, and scan the built container images for vulnerabilities.

D. Add Artifact Registry to your Aqua Security instance, and scan the built container images for vulnerabilities.

You need to copy directory local-scripts and all of its contents from your local workstation to a Compute Engine virtual machine instance. Which command should you use?

A. gsutil cp –project ג€my-gcp-projectג€ -r ~/local-scripts/ gcp-instance-name:~/server-scripts/ –zone ג€us-east1-bג€

B. gsutil cp –project ג€my-gcp-projectג€ -R ~/local-scripts/ gcp-instance-name:~/server-scripts/ –zone ג€us-east1-bג€

C. gcloud compute scp –project ג€my-gcp-projectג€ –recurse ~/local-scripts/ gcp-instance-name:~/server-scripts/ –zone ג€us-east1-bג€

D. gcloud compute mv –project ג€my-gcp-projectג€ –recurse ~/local-scripts/ gcp-instance-name:~/server-scripts/ –zone ג€us-east1-bג€

Case study - This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided. To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study. At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section. To start the case study - To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question. Company Overview - HipLocal is a community application designed to facilitate communication between people in close proximity. It is used for event planning and organizing sporting events, and for businesses to connect with their local communities. HipLocal launched recently in a few neighborhoods in Dallas and is rapidly growing into a global phenomenon. Its unique style of hyper-local community communication and business outreach is in demand around the world. Executive Statement - We are the number one local community app; it's time to take our local community services global. Our venture capital investors want to see rapid growth and the same great experience for new local and virtual communities that come online, whether their members are 10 or 10000 miles away from each other. Solution Concept - HipLocal wants to expand their existing service, with updated functionality, in new regions to better serve their global customers. They want to hire and train a new team to support these regions in their time zones. They will need to ensure that the application scales smoothly and provides clear uptime data. Existing Technical Environment - HipLocal's environment is a mix of on-premises hardware and infrastructure running in Google Cloud Platform. The HipLocal team understands their application well, but has limited experience in global scale applications. Their existing technical environment is as follows: * Existing APIs run on Compute Engine virtual machine instances hosted in GCP. * State is stored in a single instance MySQL database in GCP. * Data is exported to an on-premises Teradata/Vertica data warehouse. * Data analytics is performed in an on-premises Hadoop environment. * The application has no logging. * There are basic indicators of uptime; alerts are frequently fired when the APIs are unresponsive. Business Requirements - HipLocal's investors want to expand their footprint and support the increase in demand they are seeing. Their requirements are: * Expand availability of the application to new regions. * Increase the number of concurrent users that can be supported. * Ensure a consistent experience for users when they travel to different regions. * Obtain user activity metrics to better understand how to monetize their product. * Ensure compliance with regulations in the new regions (for example, GDPR). * Reduce infrastructure management time and cost. * Adopt the Google-recommended practices for cloud computing. Technical Requirements - * The application and backend must provide usage metrics and monitoring. * APIs require strong authentication and authorization. * Logging must be increased, and data should be stored in a cloud analytics platform. * Move to serverless architecture to facilitate elastic scaling. * Provide authorized access to internal apps in a secure manner. HipLocal wants to improve the resilience of their MySQL deployment, while also meeting their business and technical requirements. Which configuration should they choose?

A. Use the current single instance MySQL on Compute Engine and several read-only MySQL servers on Compute Engine.

B. Use the current single instance MySQL on Compute Engine, and replicate the data to Cloud SQL in an external master configuration.

C. Replace the current single instance MySQL instance with Cloud SQL, and configure high availability.

D. Replace the current single instance MySQL instance with Cloud SQL, and Google provides redundancy without further configuration.

Your company's security team uses Identity and Access Management (IAM) to track which users have access to which resources. You need to create a version control system that can integrate with your security team's processes. You want your solution to support fast release cycles and frequent merges to your main branch to minimize merge conflicts. What should you do?

A. Create a Cloud Source Repositories repository, and use trunk-based development.

B. Create a Cloud Source Repositories repository, and use feature-based development.

C. Create a GitHub repository, mirror it to a Cloud Source Repositories repository, and use trunk-based development.

D. Create a GitHub repository, mirror it to a Cloud Source Repositories repository, and use feature-based development.

Your company’s corporate policy states that there must be a copyright comment at the very beginning of all source files. You want to write a custom step in Cloud Build that is triggered by each source commit. You need the trigger to validate that the source contains a copyright and add one for subsequent steps if not there. What should you do?

A. Build a new Docker container that examines the files in /workspace and then checks and adds a copyright for each source file. Changed files are explicitly committed back to the source repository.

B. Build a new Docker container that examines the files in /workspace and then checks and adds a copyright for each source file. Changed files do not need to be committed back to the source repository.

C. Build a new Docker container that examines the files in a Cloud Storage bucket and then checks and adds a copyright for each source file. Changed files are written back to the Cloud Storage bucket.

D. Build a new Docker container that examines the files in a Cloud Storage bucket and then checks and adds a copyright for each source file. Changed files are explicitly committed back to the source repository.

You work for an organization that manages an ecommerce site. Your application is deployed behind a global HTTP(S) load balancer. You need to test a new product recommendation algorithm. You plan to use A/B testing to determine the new algorithm’s effect on sales in a randomized way. How should you test this feature?

A. Split traffic between versions using weights.

B. Enable the new recommendation feature flag on a single instance.

C. Mirror traffic to the new version of your application.

D. Use HTTP header-based routing.

You are using Cloud Build to build and test application source code stored in Cloud Source Repositories. The build process requires a build tool not available in the Cloud Build environment. What should you do?

A. Download the binary from the internet during the build process.

B. Build a custom cloud builder image and reference the image in your build steps.

C. Include the binary in your Cloud Source Repositories repository and reference it in your build scripts.

D. Ask to have the binary added to the Cloud Build environment by filing a feature request against the Cloud Build public Issue Tracker.

You are using Cloud Build to build a Docker image. You need to modify the build to execute unit and run integration tests. When there is a failure, you want the build history to clearly display the stage at which the build failed. What should you do?

A. Add RUN commands in the Dockerfile to execute unit and integration tests.

B. Create a Cloud Build build config file with a single build step to compile unit and integration tests.

C. Create a Cloud Build build config file that will spawn a separate cloud build pipeline for unit and integration tests.

D. Create a Cloud Build build config file with separate cloud builder steps to compile and execute unit and integration tests.

A governmental regulation was recently passed that affects your application. For compliance purposes, you are now required to send a duplicate of specific application logs from your application’s project to a project that is restricted to the security team. What should you do?

A. Create user-defined log buckets in the security team’s project. Configure a Cloud Logging sink to route your application’s logs to log buckets in the security team’s project.

B. Create a job that copies the logs from the _Required log bucket into the security team’s log bucket in their project.

C. Modify the _Default log bucket sink rules to reroute the logs into the security team’s log bucket.

D. Create a job that copies the System Event logs from the _Required log bucket into the security team’s log bucket in their project.

You are planning to deploy hundreds of microservices in your Google Kubernetes Engine (GKE) cluster. How should you secure communication between the microservices on GKE using a managed service?

A. Use global HTTP(S) Load Balancing with managed SSL certificates to protect your services

B. Deploy open source Istio in your GKE cluster, and enable mTLS in your Service Mesh

C. Install cert-manager on GKE to automatically renew the SSL certificates.

D. Install Anthos Service Mesh, and enable mTLS in your Service Mesh.

You are a lead developer working on a new retail system that runs on Cloud Run and Firestore in Datastore mode. A web UI requirement is for the system to display a list of available products when users access the system and for the user to be able to browse through all products. You have implemented this requirement in the minimum viable product (MVP) phase by returning a list of all available products stored in Firestore. A few months after go-live, you notice that Cloud Run instances are terminated with HTTP 500: Container instances are exceeding memory limits errors during busy times. This error coincides with spikes in the number of Datastore entity reads. You need to prevent Cloud Run from crashing and decrease the number of Datastore entity reads. You want to use a solution that optimizes system performance. What should you do?

A. Modify the query that returns the product list using integer offsets.

B. Modify the query that returns the product list using limits.

C. Modify the Cloud Run configuration to increase the memory limits.

D. Modify the query that returns the product list using cursors.

You migrated your applications to Google Cloud Platform and kept your existing monitoring platform. You now find that your notification system is too slow for time critical problems. What should you do?

A. Replace your entire monitoring platform with Stackdriver.

B. Install the Stackdriver agents on your Compute Engine instances.

C. Use Stackdriver to capture and alert on logs, then ship them to your existing platform.

D. Migrate some traffic back to your old platform and perform AB testing on the two platforms concurrently.

You are developing an application that will be launched on Compute Engine instances into multiple distinct projects, each corresponding to the environments in your software development process (development, QA, staging, and production). The instances in each project have the same application code but a different configuration. During deployment, each instance should receive the application's configuration based on the environment it serves. You want to minimize the number of steps to configure this flow. What should you do?

A. When creating your instances, configure a startup script using the gcloud command to determine the project name that indicates the correct environment.

B. In each project, configure a metadata key ג€environmentג€ whose value is the environment it serves. Use your deployment tool to query the instance metadata and configure the application based on the ג€environmentג€ value.

C. Deploy your chosen deployment tool on an instance in each project. Use a deployment job to retrieve the appropriate configuration file from your version control system, and apply the configuration when deploying the application on each instance.

D. During each instance launch, configure an instance custom-metadata key named ג€environmentג€ whose value is the environment the instance serves. Use your deployment tool to query the instance metadata, and configure the application based on the ג€environmentג€ value.

Your operations team has asked you to create a script that lists the Cloud Bigtable, Memorystore, and Cloud SQL databases running within a project. The script should allow users to submit a filter expression to limit the results presented. How should you retrieve the data?

A. Use the HBase API, Redis API, and MySQL connection to retrieve database lists. Combine the results, and then apply the filter to display the results

B. Use the HBase API, Redis API, and MySQL connection to retrieve database lists. Filter the results individually, and then combine them to display the results

C. Run gcloud bigtable instances list, gcloud redis instances list, and gcloud sql databases list. Use a filter within the application, and then display the results

D. Run gcloud bigtable instances list, gcloud redis instances list, and gcloud sql databases list. Use –filter flag with each command, and then display the results

You are supporting a business-critical application in production deployed on Cloud Run. The application is reporting HTTP 500 errors that are affecting the usability of the application. You want to be alerted when the number of errors exceeds 15% of the requests within a specific time window. What should you do?

A. Create a Cloud Function that consumes the Cloud Monitoring API. Use Cloud Scheduler to trigger the Cloud Function daily and alert you if the number of errors is above the defined threshold.

B. Navigate to the Cloud Run page in the Google Cloud console, and select the service from the services list. Use the Metrics tab to visualize the number of errors for that revision, and refresh the page daily.

C. Create an alerting policy in Cloud Monitoring that alerts you if the number of errors is above the defined threshold.

D. Create a Cloud Function that consumes the Cloud Monitoring API. Use Cloud Composer to trigger the Cloud Function daily and alert you if the number of errors is above the defined threshold.

You want to create `fully baked` or `golden` Compute Engine images for your application. You need to bootstrap your application to connect to the appropriate database according to the environment the application is running on (test, staging, production). What should you do?

A. Embed the appropriate database connection string in the image. Create a different image for each environment.

B. When creating the Compute Engine instance, add a tag with the name of the database to be connected. In your application, query the Compute Engine API to pull the tags for the current instance, and use the tag to construct the appropriate database connection string.

C. When creating the Compute Engine instance, create a metadata item with a key of ג€DATABASEג€ and a value for the appropriate database connection string. In your application, read the ג€DATABASEג€ environment variable, and use the value to connect to the appropriate database.

D. When creating the Compute Engine instance, create a metadata item with a key of ג€DATABASEג€ and a value for the appropriate database connection string. In your application, query the metadata server for the ג€DATABASEג€ value, and use the value to connect to the appropriate database.

You are monitoring a web application that is written in Go and deployed in Google Kubernetes Engine. You notice an increase in CPU and memory utilization. You need to determine which source code is consuming the most CPU and memory resources. What should you do?

A. Download, install, and start the Snapshot Debugger agent in your VM. Take debug snapshots of the functions that take the longest time. Review the call stack frame, and identify the local variables at that level in the stack.

B. Import the Cloud Profiler package into your application, and initialize the Profiler agent. Review the generated flame graph in the Google Cloud console to identify time-intensive functions.

C. Import OpenTelemetry and Trace export packages into your application, and create the trace provider.Review the latency data for your application on the Trace overview page, and identify where bottlenecks are occurring.

D. Create a Cloud Logging query that gathers the web application’s logs. Write a Python script that calculates the difference between the timestamps from the beginning and the end of the application’s longest functions to identity time-intensive functions.

Your company has a BigQuery dataset named "Master" that keeps information about employee travel and expenses. This information is organized by employee department. That means employees should only be able to view information for their department. You want to apply a security framework to enforce this requirement with the minimum number of steps. What should you do?

A. Create a separate dataset for each department. Create a view with an appropriate WHERE clause to select records from a particular dataset for the specific department. Authorize this view to access records from your Master dataset. Give employees the permission to this department-specific dataset.

B. Create a separate dataset for each department. Create a data pipeline for each department to copy appropriate information from the Master dataset to the specific dataset for the department. Give employees the permission to this department-specific dataset.

C. Create a dataset named Master dataset. Create a separate view for each department in the Master dataset. Give employees access to the specific view for their department.

D. Create a dataset named Master dataset. Create a separate table for each department in the Master dataset. Give employees access to the specific table for their department.

You are designing an application that uses a microservices architecture. You are planning to deploy the application in the cloud and on-premises. You want to make sure the application can scale up on demand and also use managed services as much as possible. What should you do?

A. Deploy open source Istio in a multi-cluster deployment on multiple Google Kubernetes Engine (GKE) clusters managed by Anthos.

B. Create a GKE cluster in each environment with Anthos, and use Cloud Run for Anthos to deploy your application to each cluster.

C. Install a GKE cluster in each environment with Anthos, and use Cloud Build to create a Deployment for your application in each cluster.

D. Create a GKE cluster in the cloud and install open-source Kubernetes on-premises. Use an external load balancer service to distribute traffic across the two environments.

You are developing a new application that has the following design requirements: ✑ Creation and changes to the application infrastructure are versioned and auditable. ✑ The application and deployment infrastructure uses Google-managed services as much as possible. ✑ The application runs on a serverless compute platform. How should you design the application's architecture?

A. 1. Store the application and infrastructure source code in a Git repository. 2. Use Cloud Build to deploy the application infrastructure with Terraform. 3. Deploy the application to a Cloud Function as a pipeline step.

B. 1. Deploy Jenkins from the Google Cloud Marketplace, and define a continuous integration pipeline in Jenkins. 2. Configure a pipeline step to pull the application source code from a Git repository. 3. Deploy the application source code to App Engine as a pipeline step.

C. 1. Create a continuous integration pipeline on Cloud Build, and configure the pipeline to deploy the application infrastructure using Deployment Manager templates. 2. Configure a pipeline step to create a container with the latest application source code. 3. Deploy the container to a Compute Engine instance as a pipeline step.

D. 1. Deploy the application infrastructure using gcloud commands. 2. Use Cloud Build to define a continuous integration pipeline for changes to the application source code. 3. Configure a pipeline step to pull the application source code from a Git repository, and create a containerized application. 4. Deploy the new container on Cloud Run as a pipeline step.

You are developing an application that reads credit card data from a Pub/Sub subscription. You have written code and completed unit testing. You need to test the Pub/Sub integration before deploying to Google Cloud. What should you do?

A. Create a service to publish messages, and deploy the Pub/Sub emulator. Generate random content in the publishing service, and publish to the emulator.

B. Create a service to publish messages to your application. Collect the messages from Pub/Sub in production, and replay them through the publishing service.

C. Create a service to publish messages, and deploy the Pub/Sub emulator. Collect the messages from Pub/Sub in production, and publish them to the emulator.

D. Create a service to publish messages, and deploy the Pub/Sub emulator. Publish a standard set of testing messages from the publishing service to the emulator.

You are using Cloud Run to host a global ecommerce web application. Your company’s design team is creating a new color scheme for the web app. You have been tasked with determining whether the new color scheme will increase sales. You want to conduct testing on live production traffic. How should you design the study?

A. Use an external HTTP(S) load balancer to route a predetermined percentage of traffic to two different color schemes of your application. Analyze the results to determine whether there is a statistically significant difference in sales.

B. Use an external HTTP(S) load balancer to route traffic to the original color scheme while the new deployment is created and tested. After testing is complete, reroute all traffic to the new color scheme. Analyze the results to determine whether there is a statistically significant difference in sales.

C. Use an external HTTP(S) load balancer to mirror traffic to the new version of your application. Analyze the results to determine whether there is a statistically significant difference in sales.

D. Enable a feature flag that displays the new color scheme to half of all users. Monitor sales to see whether they increase for this group of users.

You have deployed an HTTP(s) Load Balancer with the gcloud commands shown below.Health checks to port 80 on the Compute Engine virtual machine instance are failing and no traffic is sent to your instances. You want to resolve the problem. Which commands should you run?

A. gcloud compute instances add-access-config ${NAME}-backend-instance-1

B. gcloud compute instances add-tags ${NAME}-backend-instance-1 –tags http-server

C. gcloud compute firewall-rules create allow-lb –network load-balancer –allow tcp –source-ranges 130.211.0.0/22,35.191.0.0/16 –direction INGRESS

D. gcloud compute firewall-rules create allow-lb –network load-balancer –allow tcp –destination-ranges 130.211.0.0/22,35.191.0.0/16 –direction EGRESS

Your team is creating a serverless web application on Cloud Run. The application needs to access images stored in a private Cloud Storage bucket. You want to give the application Identity and Access Management (IAM) permission to access the images in the bucket, while also securing the services using Google-recommended best practices. What should you do?

A. Enforce signed URLs for the desired bucket. Grant the Storage Object Viewer IAM role on the bucket to the Compute Engine default service account.

B. Enforce public access prevention for the desired bucket. Grant the Storage Object Viewer IAM role on the bucket to the Compute Engine default service account.

C. Enforce signed URLs for the desired bucket. Create and update the Cloud Run service to use a user-managed service account. Grant the Storage Object Viewer IAM role on the bucket to the service account.

D. Enforce public access prevention for the desired bucket. Create and update the Cloud Run service to use a user-managed service account. Grant the Storage Object Viewer IAM role on the bucket to the service account.

You are deploying a single website on App Engine that needs to be accessible via the URL http://www.altostrat.com/. What should you do?

A. Verify domain ownership with Webmaster Central. Create a DNS CNAME record to point to the App Engine canonical name ghs.googlehosted.com.

B. Verify domain ownership with Webmaster Central. Define an A record pointing to the single global App Engine IP address.

C. Define a mapping in dispatch.yaml to point the domain www.altostrat.com to your App Engine service. Create a DNS CNAME record to point to the App Engine canonical name ghs.googlehosted.com.

D. Define a mapping in dispatch.yaml to point the domain www.altostrat.com to your App Engine service. Define an A record pointing to the single global App Engine IP address.

Case study - This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided. To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study. At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section. To start the case study - To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question. Company Overview - HipLocal is a community application designed to facilitate communication between people in close proximity. It is used for event planning and organizing sporting events, and for businesses to connect with their local communities. HipLocal launched recently in a few neighborhoods in Dallas and is rapidly growing into a global phenomenon. Its unique style of hyper-local community communication and business outreach is in demand around the world. Executive Statement - We are the number one local community app; it's time to take our local community services global. Our venture capital investors want to see rapid growth and the same great experience for new local and virtual communities that come online, whether their members are 10 or 10000 miles away from each other. Solution Concept - HipLocal wants to expand their existing service, with updated functionality, in new regions to better serve their global customers. They want to hire and train a new team to support these regions in their time zones. They will need to ensure that the application scales smoothly and provides clear uptime data, and that they analyze and respond to any issues that occur. Existing Technical Environment - HipLocal's environment is a mix of on-premises hardware and infrastructure running in Google Cloud Platform. The HipLocal team understands their application well, but has limited experience in global scale applications. Their existing technical environment is as follows: • Existing APIs run on Compute Engine virtual machine instances hosted in GCP. • State is stored in a single instance MySQL database in GCP. • Release cycles include development freezes to allow for QA testing. • The application has no logging. • Applications are manually deployed by infrastructure engineers during periods of slow traffic on weekday evenings. • There are basic indicators of uptime; alerts are frequently fired when the APIs are unresponsive. Business Requirements - HipLocal's investors want to expand their footprint and support the increase in demand they are seeing. Their requirements are: • Expand availability of the application to new regions. • Support 10x as many concurrent users. • Ensure a consistent experience for users when they travel to different regions. • Obtain user activity metrics to better understand how to monetize their product. • Ensure compliance with regulations in the new regions (for example, GDPR). • Reduce infrastructure management time and cost. • Adopt the Google-recommended practices for cloud computing. ○ Develop standardized workflows and processes around application lifecycle management. ○ Define service level indicators (SLIs) and service level objectives (SLOs). Technical Requirements - • Provide secure communications between the on-premises data center and cloud-hosted applications and infrastructure. • The application must provide usage metrics and monitoring. • APIs require authentication and authorization. • Implement faster and more accurate validation of new features. • Logging and performance metrics must provide actionable information to be able to provide debugging information and alerts. • Must scale to meet user demand. For this question, refer to the HipLocal case study. How should HipLocal increase their API development speed while continuing to provide the QA team with a stable testing environment that meets feature requirements?

A. Include unit tests in their code, and prevent deployments to QA until all tests have a passing status.

B. Include performance tests in their code, and prevent deployments to QA until all tests have a passing status.

C. Create health checks for the QA environment, and redeploy the APIs at a later time if the environment is unhealthy.

D. Redeploy the APIs to App Engine using Traffic Splitting. Do not move QA traffic to the new versions if errors are found.

You are developing an application that will allow users to read and post comments on news articles. You want to configure your application to store and display user-submitted comments using Firestore. How should you design the schema to support an unknown number of comments and articles?

A. Store each comment in a subcollection of the article.

B. Add each comment to an array property on the article.

C. Store each comment in a document, and add the comment’s key to an array property on the article.

D. Store each comment in a document, and add the comment’s key to an array property on the user profile.

Your team is setting up a build pipeline for an application that will run in Google Kubernetes Engine (GKE). For security reasons, you only want images produced by the pipeline to be deployed to your GKE cluster. Which combination of Google Cloud services should you use?

A. Cloud Build, Cloud Storage, and Binary Authorization

B. Google Cloud Deploy, Cloud Storage, and Google Cloud Armor

C. Google Cloud Deploy, Artifact Registry, and Google Cloud Armor

D. Cloud Build, Artifact Registry, and Binary Authorization

You are deploying a microservices application to Google Kubernetes Engine (GKE). The application will receive daily updates. You expect to deploy a large number of distinct containers that will run on the Linux operating system (OS). You want to be alerted to any known OS vulnerabilities in the new containers. You want to follow Google-recommended best practices. What should you do?

A. Use the gcloud CLI to call Container Analysis to scan new container images. Review the vulnerability results before each deployment.

B. Enable Container Analysis, and upload new container images to Artifact Registry. Review the vulnerability results before each deployment.

C. Enable Container Analysis, and upload new container images to Artifact Registry. Review the critical vulnerability results before each deployment.

D. Use the Container Analysis REST API to call Container Analysis to scan new container images. Review the vulnerability results before each deployment.

You are developing a microservice-based application that will run on Google Kubernetes Engine (GKE). Some of the services need to access different Google Cloud APIs. How should you set up authentication of these services in the cluster following Google-recommended best practices? (Choose two.)

A. Use the service account attached to the GKE node.

B. Enable Workload Identity in the cluster via the gcloud command-line tool.

C. Access the Google service account keys from a secret management service.

D. Store the Google service account keys in a central secret management service.

E. Use gcloud to bind the Kubernetes service account and the Google service account using roles/iam.workloadIdentity.

You are a developer at a large corporation. You manage three Google Kubernetes Engine clusters on Google Cloud. Your team’s developers need to switch from one cluster to another regularly without losing access to their preferred development tools. You want to configure access to these multiple clusters while following Google-recommended best practices. What should you do?

A. Ask the developers to use Cloud Shell and run gcloud container clusters get-credential to switch to another cluster.

B. In a configuration file, define the clusters, users, and contexts. Share the file with the developers and ask them to use kubect1 contig to add cluster, user, and context details.

C. Ask the developers to install the gcloud CLI on their workstation and run gcloud container clusters get-credentials to switch to another cluster.

D. Ask the developers to open three terminals on their workstation and use kubect1 config to configure access to each cluster.

You have an application controlled by a managed instance group. When you deploy a new version of the application, costs should be minimized and the number of instances should not increase. You want to ensure that, when each new instance is created, the deployment only continues if the new instance is healthy. What should you do?

A. Perform a rolling-action with maxSurge set to 1, maxUnavailable set to 0.

B. Perform a rolling-action with maxSurge set to 0, maxUnavailable set to 1

C. Perform a rolling-action with maxHealthy set to 1, maxUnhealthy set to 0.

D. Perform a rolling-action with maxHealthy set to 0, maxUnhealthy set to 1.

Your team develops services that run on Google Kubernetes Engine. Your team's code is stored in Cloud Source Repositories. You need to quickly identify bugs in the code before it is deployed to production. You want to invest in automation to improve developer feedback and make the process as efficient as possible. What should you do?

A. Use Spinnaker to automate building container images from code based on Git tags.

B. Use Cloud Build to automate building container images from code based on Git tags.

C. Use Spinnaker to automate deploying container images to the production environment.

D. Use Cloud Build to automate building container images from code based on forked versions.

You are developing an event-driven application. You have created a topic to receive messages sent to Pub/Sub. You want those messages to be processed in real time. You need the application to be independent from any other system and only incur costs when new messages arrive. How should you configure the architecture?

A. Deploy the application on Compute Engine. Use a Pub/Sub push subscription to process new messages in the topic.

B. Deploy your code on Cloud Functions. Use a Pub/Sub trigger to invoke the Cloud Function. Use the Pub/Sub API to create a pull subscription to the Pub/Sub topic and read messages from it.

C. Deploy the application on Google Kubernetes Engine. Use the Pub/Sub API to create a pull subscription to the Pub/Sub topic and read messages from it.

D. Deploy your code on Cloud Functions. Use a Pub/Sub trigger to handle new messages in the topic.

Case study - This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided. To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study. At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section. To start the case study - To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question. Company Overview - HipLocal is a community application designed to facilitate communication between people in close proximity. It is used for event planning and organizing sporting events, and for businesses to connect with their local communities. HipLocal launched recently in a few neighborhoods in Dallas and is rapidly growing into a global phenomenon. Its unique style of hyper-local community communication and business outreach is in demand around the world. Executive Statement - We are the number one local community app; it's time to take our local community services global. Our venture capital investors want to see rapid growth and the same great experience for new local and virtual communities that come online, whether their members are 10 or 10000 miles away from each other. Solution Concept - HipLocal wants to expand their existing service, with updated functionality, in new regions to better serve their global customers. They want to hire and train a new team to support these regions in their time zones. They will need to ensure that the application scales smoothly and provides clear uptime data, and that they analyze and respond to any issues that occur. Existing Technical Environment - HipLocal's environment is a mix of on-premises hardware and infrastructure running in Google Cloud Platform. The HipLocal team understands their application well, but has limited experience in global scale applications. Their existing technical environment is as follows: • Existing APIs run on Compute Engine virtual machine instances hosted in GCP. • State is stored in a single instance MySQL database in GCP. • Release cycles include development freezes to allow for QA testing. • The application has no logging. • Applications are manually deployed by infrastructure engineers during periods of slow traffic on weekday evenings. • There are basic indicators of uptime; alerts are frequently fired when the APIs are unresponsive. Business Requirements - HipLocal's investors want to expand their footprint and support the increase in demand they are seeing. Their requirements are: • Expand availability of the application to new regions. • Support 10x as many concurrent users. • Ensure a consistent experience for users when they travel to different regions. • Obtain user activity metrics to better understand how to monetize their product. • Ensure compliance with regulations in the new regions (for example, GDPR). • Reduce infrastructure management time and cost. • Adopt the Google-recommended practices for cloud computing. ○ Develop standardized workflows and processes around application lifecycle management. ○ Define service level indicators (SLIs) and service level objectives (SLOs). Technical Requirements - • Provide secure communications between the on-premises data center and cloud-hosted applications and infrastructure. • The application must provide usage metrics and monitoring. • APIs require authentication and authorization. • Implement faster and more accurate validation of new features. • Logging and performance metrics must provide actionable information to be able to provide debugging information and alerts. • Must scale to meet user demand. For this question, refer to the HipLocal case study. HipLocal's application uses Cloud Client Libraries to interact with Google Cloud. HipLocal needs to configure authentication and authorization in the Cloud Client Libraries to implement least privileged access for the application. What should they do?

A. Create an API key. Use the API key to interact with Google Cloud.

B. Use the default compute service account to interact with Google Cloud.

C. Create a service account for the application. Export and deploy the private key for the application. Use the service account to interact with Google Cloud.

D. Create a service account for the application and for each Google Cloud API used by the application. Export and deploy the private keys used by the application. Use the service account with one Google Cloud API to interact with Google Cloud.

You plan to deploy a new Go application to Cloud Run. The source code is stored in Cloud Source Repositories. You need to configure a fully managed, automated, continuous deployment pipeline that runs when a source code commit is made. You want to use the simplest deployment solution. What should you do?

A. Configure a cron job on your workstations to periodically run gcloud run deploy –source in the working directory.

B. Configure a Jenkins trigger to run the container build and deploy process for each source code commit to Cloud Source Repositories.

C. Configure continuous deployment of new revisions from a source repository for Cloud Run using buildpacks.

D. Use Cloud Build with a trigger configured to run the container build and deploy process for each source code commit to Cloud Source Repositories.

The development teams in your company want to manage resources from their local environments. You have been asked to enable developer access to each team’s Google Cloud projects. You want to maximize efficiency while following Google-recommended best practices. What should you do?

A. Add the users to their projects, assign the relevant roles to the users, and then provide the users with each relevant Project ID.

B. Add the users to their projects, assign the relevant roles to the users, and then provide the users with each relevant Project Number.

C. Create groups, add the users to their groups, assign the relevant roles to the groups, and then provide the users with each relevant Project ID.

D. Create groups, add the users to their groups, assign the relevant roles to the groups, and then provide the users with each relevant Project Number.

You manage a microservices application on Google Kubernetes Engine (GKE) using Istio. You secure the communication channels between your microservices by implementing an Istio AuthorizationPolicy, a Kubernetes NetworkPolicy, and mTLS on your GKE cluster. You discover that HTTP requests between two Pods to specific URLs fail, while other requests to other URLs succeed. What is the cause of the connection issue?

A. A Kubernetes NetworkPolicy resource is blocking HTTP traffic between the Pods.

B. The Pod initiating the HTTP requests is attempting to connect to the target Pod via an incorrect TCP port.

C. The Authorization Policy of your cluster is blocking HTTP requests for specific paths within your application.

D. The cluster has mTLS configured in permissive mode, but the Pod’s sidecar proxy is sending unencrypted traffic in plain text.

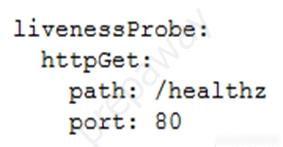

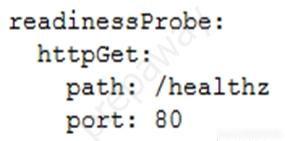

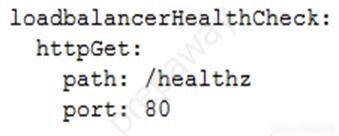

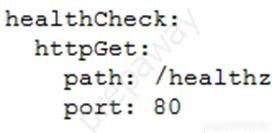

You are planning to deploy your application in a Google Kubernetes Engine (GKE) cluster. The application exposes an HTTP-based health check at /healthz. You want to use this health check endpoint to determine whether traffic should be routed to the pod by the load balancer. Which code snippet should you include in your Pod configuration?

You are creating a web application that runs in a Compute Engine instance and writes a file to any user's Google Drive. You need to configure the application to authenticate to the Google Drive API. What should you do?

A. Use an OAuth Client ID that uses the https://www.googleapis.com/auth/drive.file scope to obtain an access token for each user.

B. Use an OAuth Client ID with delegated domain-wide authority.

C. Use the App Engine service account and https://www.googleapis.com/auth/drive.file scope to generate a signed JSON Web Token (JWT).

D. Use the App Engine service account with delegated domain-wide authority.

Your team is developing unit tests for Cloud Function code. The code is stored in a Cloud Source Repositories repository. You are responsible for implementing the tests. Only a specific service account has the necessary permissions to deploy the code to Cloud Functions. You want to ensure that the code cannot be deployed without first passing the tests. How should you configure the unit testing process?

A. Configure Cloud Build to deploy the Cloud Function. If the code passes the tests, a deployment approval is sent to you.

B. Configure Cloud Build to deploy the Cloud Function, using the specific service account as the build agent. Run the unit tests after successful deployment.

C. Configure Cloud Build to run the unit tests. If the code passes the tests, the developer deploys the Cloud Function.

D. Configure Cloud Build to run the unit tests, using the specific service account as the build agent. If the code passes the tests, Cloud Build deploys the Cloud Function.

You are deploying your application to a Compute Engine virtual machine instance with the Stackdriver Monitoring Agent installed. Your application is a unix process on the instance. You want to be alerted if the unix process has not run for at least 5 minutes. You are not able to change the application to generate metrics or logs. Which alert condition should you configure?

A. Uptime check

B. Process health

C. Metric absence

D. Metric threshold

You have an application that uses an HTTP Cloud Function to process user activity from both desktop browser and mobile application clients. This function will serve as the endpoint for all metric submissions using HTTP POST. Due to legacy restrictions, the function must be mapped to a domain that is separate from the domain requested by users on web or mobile sessions. The domain for the Cloud Function is https://fn.example.com. Desktop and mobile clients use the domain https://www.example.com. You need to add a header to the function's HTTP response so that only those browser and mobile sessions can submit metrics to the Cloud Function. Which response header should you add?

A. Access-Control-Allow-Origin: *

B. Access-Control-Allow-Origin: https://*.example.com

C. Access-Control-Allow-Origin: https://fn.example.com

D. Access-Control-Allow-origin: https://www.example.com

You are developing a microservice-based application that will be deployed on a Google Kubernetes Engine cluster. The application needs to read and write to a Spanner database. You want to follow security best practices while minimizing code changes. How should you configure your application to retrieve Spanner credentials?

A. Configure the appropriate service accounts, and use Workload Identity to run the pods.

B. Store the application credentials as Kubernetes Secrets, and expose them as environment variables.

C. Configure the appropriate routing rules, and use a VPC-native cluster to directly connect to the database.

D. Store the application credentials using Cloud Key Management Service, and retrieve them whenever a database connection is made.

Case study - This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided. To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study. At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section. To start the case study - To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question. Company Overview - HipLocal is a community application designed to facilitate communication between people in close proximity. It is used for event planning and organizing sporting events, and for businesses to connect with their local communities. HipLocal launched recently in a few neighborhoods in Dallas and is rapidly growing into a global phenomenon. Its unique style of hyper-local community communication and business outreach is in demand around the world. Executive Statement - We are the number one local community app; it's time to take our local community services global. Our venture capital investors want to see rapid growth and the same great experience for new local and virtual communities that come online, whether their members are 10 or 10000 miles away from each other. Solution Concept - HipLocal wants to expand their existing service, with updated functionality, in new regions to better serve their global customers. They want to hire and train a new team to support these regions in their time zones. They will need to ensure that the application scales smoothly and provides clear uptime data. Existing Technical Environment - HipLocal's environment is a mix of on-premises hardware and infrastructure running in Google Cloud Platform. The HipLocal team understands their application well, but has limited experience in global scale applications. Their existing technical environment is as follows: * Existing APIs run on Compute Engine virtual machine instances hosted in GCP. * State is stored in a single instance MySQL database in GCP. * Data is exported to an on-premises Teradata/Vertica data warehouse. * Data analytics is performed in an on-premises Hadoop environment. * The application has no logging. * There are basic indicators of uptime; alerts are frequently fired when the APIs are unresponsive. Business Requirements - HipLocal's investors want to expand their footprint and support the increase in demand they are seeing. Their requirements are: * Expand availability of the application to new regions. * Increase the number of concurrent users that can be supported. * Ensure a consistent experience for users when they travel to different regions. * Obtain user activity metrics to better understand how to monetize their product. * Ensure compliance with regulations in the new regions (for example, GDPR). * Reduce infrastructure management time and cost. * Adopt the Google-recommended practices for cloud computing. Technical Requirements - * The application and backend must provide usage metrics and monitoring. * APIs require strong authentication and authorization. * Logging must be increased, and data should be stored in a cloud analytics platform. * Move to serverless architecture to facilitate elastic scaling. * Provide authorized access to internal apps in a secure manner. HipLocal wants to reduce the number of on-call engineers and eliminate manual scaling. Which two services should they choose? (Choose two.)

A. Use Google App Engine services.

B. Use serverless Google Cloud Functions.

C. Use Knative to build and deploy serverless applications.

D. Use Google Kubernetes Engine for automated deployments.

E. Use a large Google Compute Engine cluster for deployments.

You have recently instrumented a new application with OpenTelemetry, and you want to check the latency of your application requests in Trace. You want to ensure that a specific request is always traced. What should you do?

A. Wait 10 minutes, then verify that Trace captures those types of requests automatically.

B. Write a custom script that sends this type of request repeatedly from your dev project.

C. Use the Trace API to apply custom attributes to the trace.

D. Add the X-Cloud-Trace-Context header to the request with the appropriate parameters.

You are a cluster administrator for Google Kubernetes Engine (GKE). Your organization’s clusters are enrolled in a release channel. You need to be informed of relevant events that affect your GKE clusters, such as available upgrades and security bulletins. What should you do?

A. Configure cluster notifications to be sent to a Pub/Sub topic.

B. Execute a scheduled query against the google_cloud_release_notes BigQuery dataset.

C. Query the GKE API for available versions.

D. Create an RSS subscription to receive a daily summary of the GKE release notes.

You have an HTTP Cloud Function that is called via POST. Each submission's request body has a flat, unnested JSON structure containing numeric and text data. After the Cloud Function completes, the collected data should be immediately available for ongoing and complex analytics by many users in parallel. How should you persist the submissions?

A. Directly persist each POST request’s JSON data into Datastore.

B. Transform the POST request’s JSON data, and stream it into BigQuery.

C. Transform the POST request’s JSON data, and store it in a regional Cloud SQL cluster.

D. Persist each POST request’s JSON data as an individual file within Cloud Storage, with the file name containing the request identifier.

Access Full Google Professional Cloud Developer Dump Free

Looking for even more practice questions? Click here to access the complete Google Professional Cloud Developer Dump Free collection, offering hundreds of questions across all exam objectives.

We regularly update our content to ensure accuracy and relevance—so be sure to check back for new material.

Begin your certification journey today with our Google Professional Cloud Developer dump free questions — and get one step closer to exam success!