DBS-C01 Practice Exam Free – 50 Questions to Simulate the Real Exam

Are you getting ready for the DBS-C01 certification? Take your preparation to the next level with our DBS-C01 Practice Exam Free – a carefully designed set of 50 realistic exam-style questions to help you evaluate your knowledge and boost your confidence.

Using a DBS-C01 practice exam free is one of the best ways to:

- Experience the format and difficulty of the real exam

- Identify your strengths and focus on weak areas

- Improve your test-taking speed and accuracy

Below, you will find 50 realistic DBS-C01 practice exam free questions covering key exam topics. Each question reflects the structure and challenge of the actual exam.

A gaming company is evaluating Amazon ElastiCache as a solution to manage player leaderboards. Millions of players around the world will complete in annual tournaments. The company wants to implement an architecture that is highly available. The company also wants to ensure that maintenance activities have minimal impact on the availability of the gaming platform. Which combination of steps should the company take to meet these requirements? (Choose two.)

A. Deploy an ElastiCache for Redis cluster with read replicas and Multi-AZ enabled.

B. Deploy an ElastiCache for Memcached global datastore.

C. Deploy a single-node ElastiCache for Redis cluster with automatic backups enabled. In the event of a failure, create a new cluster and restore data from the most recent backup.

D. Use the default maintenance window to apply any required system changes and mandatory updates as soon as they are available.

E. Choose a preferred maintenance window at the time of lowest usage to apply any required changes and mandatory updates.

A company is planning to migrate a 40 TB Oracle database to an Amazon Aurora PostgreSQL DB cluster by using a single AWS Database Migration Service (AWS DMS) task within a single replication instance. During early testing, AWS DMS is not scaling to the company's needs. Full load and change data capture (CDC) are taking days to complete. The source database server and the target DB cluster have enough network bandwidth and CPU bandwidth for the additional workload. The replication instance has enough resources to support the replication. A database specialist needs to improve database performance, reduce data migration time, and create multiple DMS tasks. Which combination of changes will meet these requirements? (Choose two.)

A. Increase the value of the ParallelLoadThreads parameter in the DMS task settings for the tables.

B. Use a smaller set of tables with each DMS task. Set the MaxFullLoadSubTasks parameter to a higher value.

C. Use a smaller set of tables with each DMS task. Set the MaxFullLoadSubTasks parameter to a lower value.

D. Use parallel load with different data boundaries for larger tables.

E. Run the DMS tasks on a larger instance class. Increase local storage on the instance.

A company wants to improve its ecommerce website on AWS. A database specialist decides to add Amazon ElastiCache for Redis in the implementation stack to ease the workload off the database and shorten the website response times. The database specialist must also ensure the ecommerce website is highly available within the company's AWS Region. How should the database specialist deploy ElastiCache to meet this requirement?

A. Launch an ElastiCache for Redis cluster using the AWS CLI with the -cluster-enabled switch.

B. Launch an ElastiCache for Redis cluster and select read replicas in different Availability Zones.

C. Launch two ElastiCache for Redis clusters in two different Availability Zones. Configure Redis streams to replicate the cache from the primary cluster to another.

D. Launch an ElastiCache cluster in the primary Availability Zone and restore the cluster’s snapshot to a different Availability Zone during disaster recovery.

A company wants to migrate its on-premises MySQL databases to Amazon RDS for MySQL. To comply with the company's security policy, all databases must be encrypted at rest. RDS DB instance snapshots must also be shared across various accounts to provision testing and staging environments. Which solution meets these requirements?

A. Create an RDS for MySQL DB instance with an AWS Key Management Service (AWS KMS) customer managed CMK. Update the key policy to include the Amazon Resource Name (ARN) of the other AWS accounts as a principal, and then allow the kms:CreateGrant action.

B. Create an RDS for MySQL DB instance with an AWS managed CMK. Create a new key policy to include the Amazon Resource Name (ARN) of the other AWS accounts as a principal, and then allow the kms:CreateGrant action.

C. Create an RDS for MySQL DB instance with an AWS owned CMK. Create a new key policy to include the administrator user name of the other AWS accounts as a principal, and then allow the kms:CreateGrant action.

D. Create an RDS for MySQL DB instance with an AWS CloudHSM key. Update the key policy to include the Amazon Resource Name (ARN) of the other AWS accounts as a principal, and then allow the kms:CreateGrant action.

A company uses an Amazon RDS for PostgreSQL DB instance for its customer relationship management (CRM) system. New compliance requirements specify that the database must be encrypted at rest. Which action will meet these requirements?

A. Create an encrypted copy of manual snapshot of the DB instance. Restore a new DB instance from the encrypted snapshot.

B. Modify the DB instance and enable encryption.

C. Restore a DB instance from the most recent automated snapshot and enable encryption.

D. Create an encrypted read replica of the DB instance. Promote the read replica to a standalone instance.

A database specialist is designing a disaster recovery (DR) strategy for a highly available application that is in development. The application uses an Amazon DynamoDB table as its data store. The application requires an RTO of 1 minute and an RPO of 2 minutes. Which DR strategy for the DynamoDB table will meet these requirements with the MOST operational efficiency?

A. Create a DynamoDB stream and an AWS Lambda function. Configure the Lambda function to process the stream and copy the data to a table in another AWS Region.

B. Use a DynamoDB global table replica in another AWS Region. Activate point-in-time recovery for both tables.

C. Use a DynamoDB Accelerator (DAX) table in another AWS Region. Activate point-in-time recovery for the table.

D. Create an AWS Backup plan. Assign the DynamoDB table as a resource.

A financial services company uses Amazon RDS for Oracle with Transparent Data Encryption (TDE). The company is required to encrypt its data at rest at all times. The key required to decrypt the data has to be highly available, and access to the key must be limited. As a regulatory requirement, the company must have the ability to rotate the encryption key on demand. The company must be able to make the key unusable if any potential security breaches are spotted. The company also needs to accomplish these tasks with minimum overhead. What should the database administrator use to set up the encryption to meet these requirements?

A. AWS CloudHSM

B. AWS Key Management Service (AWS KMS) with an AWS managed key

C. AWS Key Management Service (AWS KMS) with server-side encryption

D. AWS Key Management Service (AWS KMS) CMK with customer-provided material

A Database Specialist needs to define a database migration strategy to migrate an on-premises Oracle database to an Amazon Aurora MySQL DB cluster. The company requires near-zero downtime for the data migration. The solution must also be cost-effective. Which approach should the Database Specialist take?

A. Dump all the tables from the Oracle database into an Amazon S3 bucket using datapump (expdp). Run data transformations in AWS Glue. Load the data from the S3 bucket to the Aurora DB cluster.

B. Order an AWS Snowball appliance and copy the Oracle backup to the Snowball appliance. Once the Snowball data is delivered to Amazon S3, create a new Aurora DB cluster. Enable the S3 integration to migrate the data directly from Amazon S3 to Amazon RDS.

C. Use the AWS Schema Conversion Tool (AWS SCT) to help rewrite database objects to MySQL during the schema migration. Use AWS DMS to perform the full load and change data capture (CDC) tasks.

D. Use AWS Server Migration Service (AWS SMS) to import the Oracle virtual machine image as an Amazon EC2 instance. Use the Oracle Logical Dump utility to migrate the Oracle data from Amazon EC2 to an Aurora DB cluster.

A software development company is using Amazon Aurora MySQL DB clusters for several use cases, including development and reporting. These use cases place unpredictable and varying demands on the Aurora DB clusters, and can cause momentary spikes in latency. System users run ad-hoc queries sporadically throughout the week. Cost is a primary concern for the company, and a solution that does not require significant rework is needed. Which solution meets these requirements?

A. Create new Aurora Serverless DB clusters for development and reporting, then migrate to these new DB clusters.

B. Upgrade one of the DB clusters to a larger size, and consolidate development and reporting activities on this larger DB cluster.

C. Use existing DB clusters and stop/start the databases on a routine basis using scheduling tools.

D. Change the DB clusters to the burstable instance family.

A database specialist needs to review and optimize an Amazon DynamoDB table that is experiencing performance issues. A thorough investigation by the database specialist reveals that the partition key is causing hot partitions, so a new partition key is created. The database specialist must effectively apply this new partition key to all existing and new data. How can this solution be implemented?

A. Use Amazon EMR to export the data from the current DynamoDB table to Amazon S3. Then use Amazon EMR again to import the data from Amazon S3 into a new DynamoDB table with the new partition key.

B. Use AWS DMS to copy the data from the current DynamoDB table to Amazon S3. Then import the DynamoDB table to create a new DynamoDB table with the new partition key.

C. Use the AWS CLI to update the DynamoDB table and modify the partition key.

D. Use the AWS CLI to back up the DynamoDB table. Then use the restore-table-from-backup command and modify the partition key.

A retail company uses Amazon Redshift Spectrum to run complex analytical queries on objects that are stored in an Amazon S3 bucket. The objects are joined with multiple dimension tables that are stored in an Amazon Redshift database. The company uses the database to create monthly and quarterly aggregated reports. Users who attempt to run queries are reporting the following error message: error: Spectrum Scan Error: Access throttled Which solution will resolve this error?

A. Check file sizes of fact tables in Amazon S3, and look for large files. Break up large files into smaller files of equal size between 100 MB and 1 GB

B. Reduce the number of queries that users can run in parallel.

C. Check file sizes of fact tables in Amazon S3, and look for small files. Merge the small files into larger files of at least 64 MB in size.

D. Review and optimize queries that submit a large aggregation step to Redshift Spectrum.

A company is using an Amazon Aurora MySQL database with Performance Insights enabled. A database specialist is checking Performance Insights and observes an alert message that starts with the following phrase: `Performance Insights is unable to collect SQL Digest statistics on new queries`¦` Which action will resolve this alert message?

A. Truncate the events_statements_summary_by_digest table.

B. Change the AWS Key Management Service (AWS KMS) key that is used to enable Performance Insights.

C. Set the value for the performance_schema parameter in the parameter group to 1.

D. Disable and reenable Performance Insights to be effective in the next maintenance window.

A large automobile company is migrating the database of a critical financial application to Amazon DynamoDB. The company's risk and compliance policy requires that every change in the database be recorded as a log entry for audits. The system is anticipating more than 500,000 log entries each minute. Log entries should be stored in batches of at least 100,000 records in each file in Apache Parquet format. How should a database specialist implement these requirements with DynamoDB?

A. Enable Amazon DynamoDB Streams on the table. Create an AWS Lambda function triggered by the stream. Write the log entries to an Amazon S3 object.

B. Create a backup plan in AWS Backup to back up the DynamoDB table once a day. Create an AWS Lambda function that restores the backup in another table and compares both tables for changes. Generate the log entries and write them to an Amazon S3 object.

C. Enable AWS CloudTrail logs on the table. Create an AWS Lambda function that reads the log files once an hour and filters DynamoDB API actions. Write the filtered log files to Amazon S3.

D. Enable Amazon DynamoDB Streams on the table. Create an AWS Lambda function triggered by the stream. Write the log entries to an Amazon Kinesis Data Firehose delivery stream with buffering and Amazon S3 as the destination.

A company migrated one of its business-critical database workloads to an Amazon Aurora Multi-AZ DB cluster. The company requires a very low RTO and needs to improve the application recovery time after database failovers. Which approach meets these requirements?

A. Set the max_connections parameter to 16,000 in the instance-level parameter group.

B. Modify the client connection timeout to 300 seconds.

C. Create an Amazon RDS Proxy database proxy and update client connections to point to the proxy endpoint.

D. Enable the query cache at the instance level.

A company stores session history for its users in an Amazon DynamoDB table. The company has a large user base and generates large amounts of session data. Teams analyze the session data for 1 week, and then the data is no longer needed. A database specialist needs to design an automated solution to purge session data that is more than 1 week old. Which strategy meets these requirements with the MOST operational efficiency?

A. Create an AWS Step Functions state machine with a DynamoDB DeleteItem operation that uses the ConditionExpression parameter to delete items older than a week. Create an Amazon EventBridge (Amazon CloudWatch Events) scheduled rule that runs the Step Functions state machine on a weekly basis.

B. Create an AWS Lambda function to delete items older than a week from the DynamoDB table. Create an Amazon EventBridge (Amazon CloudWatch Events) scheduled rule that triggers the Lambda function on a weekly basis.

C. Enable Amazon DynamoDB Streams on the table. Use a stream to invoke an AWS Lambda function to delete items older than a week from the DynamoDB table

D. Enable TTL on the DynamoDB table and set a Number data type as the TTL attribute. DynamoDB will automatically delete items that have a TTL that is less than the current time.

A company has a hybrid environment in which a VPC connects to an on-premises network through an AWS Site-to-Site VPN connection. The VPC contains an application that is hosted on Amazon EC2 instances. The EC2 instances run in private subnets behind an Application Load Balancer (ALB) that is associated with multiple public subnets. The EC2 instances need to securely access an Amazon DynamoDB table. Which solution will meet these requirements?

A. Use the internet gateway of the VPC to access the DynamoDB table. Use the ALB to route the traffic to the EC2 instances.

B. Add a NAT gateway in one of the public subnets of the VPC. Configure the security groups of the EC2 instances to access the DynamoDB table through the NAT gateway.

C. Use the Site-to-Site VPN connection to route all DynamoDB network traffic through the on-premises network infrastructure to access the EC2 instances.

D. Create a VPC endpoint for DynamoDB. Assign the endpoint to the route table of the private subnets that contain the EC2 instances.

A company is deploying a solution in Amazon Aurora by migrating from an on-premises system. The IT department has established an AWS Direct Connect link from the company's data center. The company's Database Specialist has selected the option to require SSL/TLS for connectivity to prevent plaintext data from being set over the network. The migration appears to be working successfully, and the data can be queried from a desktop machine. Two Data Analysts have been asked to query and validate the data in the new Aurora DB cluster. Both Analysts are unable to connect to Aurora. Their user names and passwords have been verified as valid and the Database Specialist can connect to the DB cluster using their accounts. The Database Specialist also verified that the security group configuration allows network from all corporate IP addresses. What should the Database Specialist do to correct the Data Analysts' inability to connect?

A. Restart the DB cluster to apply the SSL change.

B. Instruct the Data Analysts to download the root certificate and use the SSL certificate on the connection string to connect.

C. Add explicit mappings between the Data Analysts’ IP addresses and the instance in the security group assigned to the DB cluster.

D. Modify the Data Analysts’ local client firewall to allow network traffic to AWS.

An online bookstore recently migrated its database from on-premises Oracle to Amazon Aurora PostgreSQL 13. The bookstore uses scheduled jobs to run customized SQL scripts to administer the Oracle database, running hours-long maintenance tasks, such as partition maintenance and statistics gathering. The bookstore's application team has reached out to a database specialist seeking an ideal replacement for scheduling jobs with Aurora PostgreSQL. What should the database specialist implement to meet these requirements with MINIMAL operational overhead?

A. Configure an Amazon EC2 instance to run on a schedule to initiate database maintenance jobs

B. Configure AWS Batch with AWS Step Functions to schedule long-running database maintenance tasks

C. Create an Amazon EventBridae (Amazon CloudWatch Events) rule with AWS Lambda that runs on a schedule to initiate database maintenance jobs

D. Turn on the pg_cron extension in the Aurora PostgreSOL database and schedule the database maintenance tasks by using the cron.schedule function

A Database Specialist is creating Amazon DynamoDB tables, Amazon CloudWatch alarms, and associated infrastructure for an Application team using a development AWS account. The team wants a deployment method that will standardize the core solution components while managing environment-specific settings separately, and wants to minimize rework due to configuration errors. Which process should the Database Specialist recommend to meet these requirements?

A. Organize common and environmental-specific parameters hierarchically in the AWS Systems Manager Parameter Store, then reference the parameters dynamically from an AWS CloudFormation template. Deploy the CloudFormation stack using the environment name as a parameter.

B. Create a parameterized AWS CloudFormation template that builds the required objects. Keep separate environment parameter files in separate Amazon S3 buckets. Provide an AWS CLI command that deploys the CloudFormation stack directly referencing the appropriate parameter bucket.

C. Create a parameterized AWS CloudFormation template that builds the required objects. Import the template into the CloudFormation interface in the AWS Management Console. Make the required changes to the parameters and deploy the CloudFormation stack.

D. Create an AWS Lambda function that builds the required objects using an AWS SDK. Set the required parameter values in a test event in the Lambda console for each environment that the Application team can modify, as needed. Deploy the infrastructure by triggering the test event in the console.

A company wants to use AWS Organizations to create isolated accounts for different teams and functionality. The company’s database administrator needs to copy a DB instance from the main account in the us-east-1 Region to a new test account in the us-west-2 Region. The database administrator has already taken a snapshot of the encrypted Amazon RDS for PostgreSQL source DB instance in the main account. Which combination of steps must the database administrator take to copy the snapshot to the new account? (Choose three.)

A. Create a new AWS Key Management Service (AWS KMS) customer managed key in the main account in us-east-1. Replicate the key ID and key material to the test account in us-west-2.

B. Create a new AWS Key Management Service (AWS KMS) customer managed key in the main account in us-east-1. Copy the key to the test account in us-west-2.

C. Copy the snapshot of the source DB instance to us-west-2 by using the AWS Key Management Service (AWS KMS) customer managed key. Enable encryption on the new snapshot. Share the snapshot with the test account.

D. Copy the snapshot of the source DB instance to the test account in us-east-1. Switch to the test account and share the snapshot with us-west-2.

E. In the test account, copy the shared snapshot to create a final snapshot. Use the final snapshot to create a new RDS for PostgreSQL DB instance.

F. In the test account, copy the shared snapshot by using the copied AWS Key Management Service (AWS KMS) key to create a final encrypted snapshot. Use the final snapshot to create a new RDS for PostgreSQL DB instance.

A company recently launched a mobile app that has grown in popularity during the last week. The company started development in the cloud and did not initially follow security best practices during development of the mobile app. The mobile app gives customers the ability to use the platform anonymously. Platform architects use Amazon ElastiCache for Redis in a VPC to manage session affinity (sticky sessions) and cookies for customers. The company's security team now mandates encryption in transit and encryption at rest for all traffic. A database specialist is using the AWS CLI to comply with this mandate. Which combination of steps should the database specialist take to meet these requirements? (Choose three.)

A. Create a manual backup of the existing Redis replication group by using the create-snapshot command. Restore from the backup by using the create-replication-group command

B. Use the –transit-encryption-enabled parameter on the new Redis replication group

C. Use the –at-rest-encryption-enabled parameter on the existing Redis replication group

D. Use the –transit-encryption-enabled parameter on the existing Redis replication group

E. Use the –at-rest-encryption-enabled parameter on the new Redis replication group

F. Create a manual backup of the existing Redis replication group by using the CreateBackupSelection command. Restore from the backup by using the StartRestoreJob command

A company is planning to use Amazon RDS for SQL Server for one of its critical applications. The company's security team requires that the users of the RDS for SQL Server DB instance are authenticated with on-premises Microsoft Active Directory credentials. Which combination of steps should a database specialist take to meet this requirement? (Choose three.)

A. Extend the on-premises Active Directory to AWS by using AD Connector.

B. Create an IAM user that uses the AmazonRDSDirectoryServiceAccess managed IAM policy.

C. Create a directory by using AWS Directory Service for Microsoft Active Directory.

D. Create an Active Directory domain controller on Amazon EC2.

E. Create an IAM role that uses the AmazonRDSDirectoryServiceAccess managed IAM policy.

F. Create a one-way forest trust from the AWS Directory Service for Microsoft Active Directory directory to the on-premises Active Directory.

A company wants to migrate its Microsoft SQL Server Enterprise Edition database instance from on-premises to AWS. A deep review is performed and the AWS Schema Conversion Tool (AWS SCT) provides options for running this workload on Amazon RDS for SQL Server Enterprise Edition, Amazon RDS for SQL Server Standard Edition, Amazon Aurora MySQL, and Amazon Aurora PostgreSQL. The company does not want to use its own SQL server license and does not want to change from Microsoft SQL Server. What is the MOST cost-effective and operationally efficient solution?

A. Run SQL Server Enterprise Edition on Amazon EC2.

B. Run SQL Server Standard Edition on Amazon RDS.

C. Run SQL Server Enterprise Edition on Amazon RDS.

D. Run Amazon Aurora MySQL leveraging SQL Server on Linux compatibility libraries.

A database specialist needs to move a table from a database that is running on an Amazon Aurora PostgreSQL DB cluster into a new and distinct database cluster. The new table in the new database must be updated with any changes to the original table that happen while the migration is in progress. The original table contains a column to store data as large as 2 GB in the form of large binary objects (LOBs). A few records are large in size, but most of the LOB data is smaller than 32 KB. What is the FASTEST way to replicate all the data from the original table?

A. Use AWS Database Migration Service (AWS DMS) with ongoing replication in full LOB mode.

B. Take a snapshot of the database. Create a new DB instance by using the snapshot.

C. Use AWS Database Migration Service (AWS DMS) with ongoing replication in limited LOB mode.

D. Use AWS Database Migration Service (AWS DMS) with ongoing replication in inline LOB mode.

A database specialist is planning to migrate a MySQL database to Amazon Aurora. The database specialist wants to configure the primary DB cluster in the us-west-2 Region and the secondary DB cluster in the eu-west-1 Region. In the event of a disaster recovery scenario, the database must be available in eu-west-1 with an RPO of a few seconds. Which solution will meet these requirements?

A. Use Aurora MySQL with the primary DB cluster in us-west-2 and a cross-Region Aurora Replica in eu-west-1

B. Use Aurora MySQL with the primary DB cluster in us-west-2 and binlog-based external replication to eu-west-1

C. Use an Aurora MySQL global database with the primary DB cluster in us-west-2 and the secondary DB cluster in eu-west-1

D. Use Aurora MySQL with the primary DB cluster in us-west-2. Use AWS Database Migration Service (AWS DMS) change data capture (GDC) replication to the secondary DB cluster in eu-west-1

A company uses AWS Lambda functions in a private subnet in a VPC to run application logic. The Lambda functions must not have access to the public internet. Additionally, all data communication must remain within the private network. As part of a new requirement, the application logic needs access to an Amazon DynamoDB table. What is the MOST secure way to meet this new requirement?

A. Provision the DynamoDB table inside the same VPC that contains the Lambda functions

B. Create a gateway VPC endpoint for DynamoDB to provide access to the table

C. Use a network ACL to only allow access to the DynamoDB table from the VPC

D. Use a security group to only allow access to the DynamoDB table from the VPC

A company is using Amazon Neptune as the graph database for one of its products. The company's data science team accidentally created large amounts of temporary information during an ETL process. The Neptune DB cluster automatically increased the storage space to accommodate the new data, but the data science team deleted the unused information. What should a database specialist do to avoid unnecessary charges for the unused cluster volume space?

A. Take a snapshot of the cluster volume. Restore the snapshot in another cluster with a smaller volume size.

B. Use the AWS CLI to turn on automatic resizing of the cluster volume.

C. Export the cluster data into a new Neptune DB cluster.

D. Add a Neptune read replica to the cluster. Promote this replica as a new primary DB instance. Reset the storage space of the cluster.

A company is using Amazon with Aurora Replicas for read-only workload scaling. A Database Specialist needs to split up two read-only applications so each application always connects to a dedicated replica. The Database Specialist wants to implement load balancing and high availability for the read-only applications. Which solution meets these requirements?

A. Use a specific instance endpoint for each replica and add the instance endpoint to each read-only application connection string.

B. Use reader endpoints for both the read-only workload applications.

C. Use a reader endpoint for one read-only application and use an instance endpoint for the other read-only application.

D. Use custom endpoints for the two read-only applications.

A company has a Microsoft SQL Server 2017 Enterprise edition on Amazon RDS database with the Multi-AZ option turned on. Automatic backups are turned on and the retention period is set to 7 days. The company needs to add a read replica to the RDS DB instance. How should a database specialist achieve this task?

A. Turn off the Multi-AZ feature, add the read replica, and turn Multi-AZ back on again.

B. Set the backup retention period to 0, add the read replica, and set the backup retention period to 7 days again.

C. Restore a snapshot to a new RDS DB instance and add the DB instance as a replica to the original database.

D. Add the new read replica without making any other changes to the RDS database.

A company is running a two-tier ecommerce application in one AWS account. The application is backed by an Amazon RDS for MySQL Multi-AZ DB instance. A developer mistakenly deleted the DB instance in the production environment. The company restores the database, but this event results in hours of downtime and lost revenue. Which combination of changes would minimize the risk of this mistake occurring in the future? (Choose three.)

A. Grant least privilege to groups, IAM users, and roles.

B. Allow all users to restore a database from a backup.

C. Enable deletion protection on existing production DB instances.

D. Use an ACL policy to restrict users from DB instance deletion.

E. Enable AWS CloudTrail logging and Enhanced Monitoring.

A company has a heterogeneous six-node production Amazon Aurora DB cluster that handles online transaction processing (OLTP) for the core business and OLAP reports for the human resources department. To match compute resources to the use case, the company has decided to have the reporting workload for the human resources department be directed to two small nodes in the Aurora DB cluster, while every other workload goes to four large nodes in the same DB cluster. Which option would ensure that the correct nodes are always available for the appropriate workload while meeting these requirements?

A. Use the writer endpoint for OLTP and the reader endpoint for the OLAP reporting workload.

B. Use automatic scaling for the Aurora Replica to have the appropriate number of replicas for the desired workload.

C. Create additional readers to cater to the different scenarios.

D. Use custom endpoints to satisfy the different workloads.

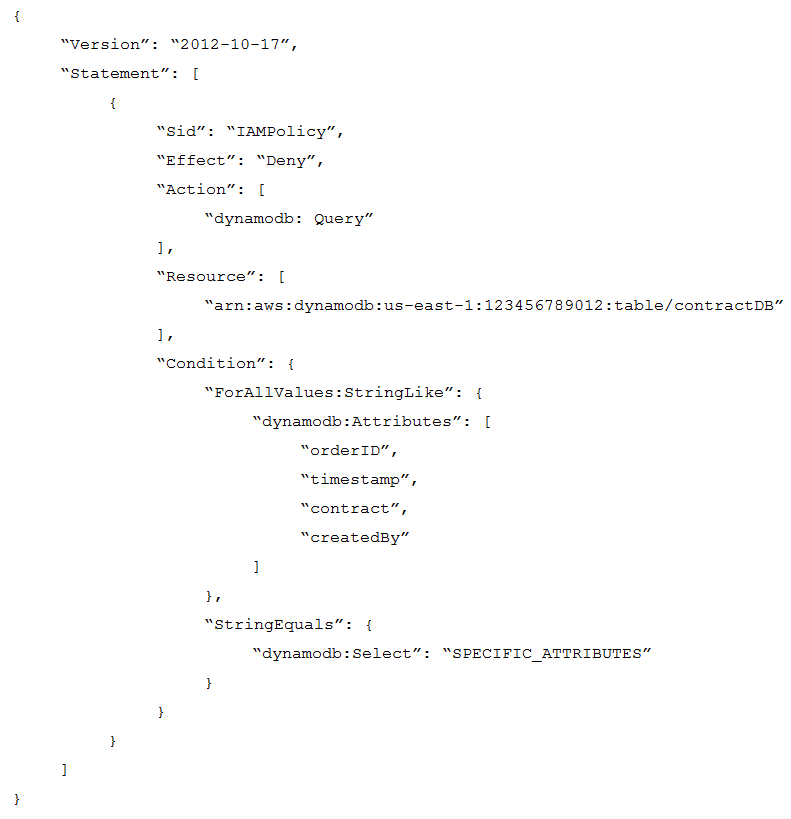

A company uses the Amazon DynamoDB table contractDB in us-east-1 for its contract system with the following schema: orderID (primary key) timestamp (sort key) contract (map) createdBy (string) customerEmail (string) After a problem in production, the operations team has asked a database specialist to provide an IAM policy to read items from the database to debug the application. In addition, the developer is not allowed to access the value of the customerEmail field to stay compliant. Which IAM policy should the database specialist use to achieve these requirements? A.B.

C.

D.

A company has an on-premises SQL Server database. The users access the database using Active Directory authentication. The company successfully migrated its database to Amazon RDS for SQL Server. However, the company is concerned about user authentication in the AWS Cloud environment. Which solution should a database specialist provide for the user to authenticate?

A. Deploy Active Directory Federation Services (AD FS) on premises and configure it with an on-premises Active Directory. Set up delegation between the on- premises AD FS and AWS Security Token Service (AWS STS) to map user identities to a role using theAmazonRDSDirectoryServiceAccess managed IAM policy.

B. Establish a forest trust between the on-premises Active Directory and AWS Directory Service for Microsoft Active Directory. Use AWS SSO to configure an Active Directory user delegated to access the databases in RDS for SQL Server.

C. Use Active Directory Connector to redirect directory requests to the company’s on-premises Active Directory without caching any information in the cloud. Use the RDS master user credentials to connect to the DB instance and configure SQL Server logins and users from the Active Directory users and groups.

D. Establish a forest trust between the on-premises Active Directory and AWS Directory Service for Microsoft Active Directory. Ensure RDS for SQL Server is using mixed mode authentication. Use the RDS master user credentials to connect to the DB instance and configure SQL Server logins and users from the Active Directory users and groups.

To meet new data compliance requirements, a company needs to keep critical data durably stored and readily accessible for 7 years. Data that is more than 1 year old is considered archival data and must automatically be moved out of the Amazon Aurora MySQL DB cluster every week. On average, around 10 GB of new data is added to the database every month. A database specialist must choose the most operationally efficient solution to migrate the archival data to Amazon S3. Which solution meets these requirements?

A. Create a custom script that exports archival data from the DB cluster to Amazon S3 using a SQL view, then deletes the archival data from the DB cluster. Launch an Amazon EC2 instance with a weekly cron job to execute the custom script.

B. Configure an AWS Lambda function that exports archival data from the DB cluster to Amazon S3 using a SELECT INTO OUTFILE S3 statement, then deletes the archival data from the DB cluster. Schedule the Lambda function to run weekly using Amazon EventBridge (Amazon CloudWatch Events).

C. Configure two AWS Lambda functions: one that exports archival data from the DB cluster to Amazon S3 using the mysqldump utility, and another that deletes the archival data from the DB cluster. Schedule both Lambda functions to run weekly using Amazon EventBridge (Amazon CloudWatch Events).

D. Use AWS Database Migration Service (AWS DMS) to continually export the archival data from the DB cluster to Amazon S3. Configure an AWS Data Pipeline process to run weekly that executes a custom SQL script to delete the archival data from the DB cluster.

A banking company recently launched an Amazon RDS for MySQL DB instance as part of a proof-of-concept project. A database specialist has configured automated database snapshots. As a part of routine testing, the database specialist noticed one day that the automated database snapshot was not created. Which of the following are possible reasons why the snapshot was not created? (Choose two.)

A. A copy of the RDS automated snapshot for this DB instance is in progress within the same AWS Region.

B. A copy of the RDS automated snapshot for this DB instance is in progress in a different AWS Region.

C. The RDS maintenance window is not configured.

D. The RDS DB instance is in the STORAGE_FULL state.

E. RDS event notifications have not been enabled.

An advertising company is developing a backend for a bidding platform. The company needs a cost-effective datastore solution that will accommodate a sudden increase in the volume of write transactions. The database also needs to make data changes available in a near real-time data stream. Which solution will meet these requirements?

A. Amazon Aurora MySQL Multi-AZ DB cluster

B. Amazon Keyspaces (for Apache Cassandra)

C. Amazon DynamoDB table with DynamoDB auto scaling

D. Amazon DocumentDB (with MongoDB compatibility) cluster with a replica instance in a second Availability Zone

An ecommerce company migrates an on-premises MongoDB database to Amazon DocumentDB (with MongoDB compatibility). After the migration, a database specialist realizes that encryption at rest has not been turned on for the Amazon DocumentDB cluster. What should the database specialist do to enable encryption at rest for the Amazon DocumentDB cluster?

A. Take a snapshot of the Amazon DocumentDB cluster. Restore the unencrypted snapshot as a new cluster while specifying the encryption option, and provide an AWS Key Management Service (AWS KMS) key.

B. Enable encryption for the Amazon DocumentDB cluster on the AWS Management Console. Reboot the cluster.

C. Modify the Amazon DocumentDB cluster by using the modify-db-cluster command with the –storage-encrypted parameter set to true.

D. Add a new encrypted instance to the Amazon DocumentDB cluster, and then delete an unencrypted instance from the cluster. Repeat until all instances are encrypted.

A company has a 12-node Amazon Aurora MySQL DB cluster. The company wants to use three specific Aurora Replicas to handle the workload from one of its read-only applications. Which solution will meet this requirement with the LEAST operational overhead?

A. Use CNAMEs to set up DNS aliases for the three Aurora Replicas.

B. Configure an Aurora custom endpoint for the three Aurora Replicas.

C. Use the cluster reader endpoint. Configure the failover priority of the three Aurora Replicas.

D. Use the specific instance endpoints for each of the three Aurora Replicas.

A company is running Amazon RDS for MySQL for its workloads. There is downtime when AWS operating system patches are applied during the Amazon RDS- specified maintenance window. What is the MOST cost-effective action that should be taken to avoid downtime?

A. Migrate the workloads from Amazon RDS for MySQL to Amazon DynamoDB

B. Enable cross-Region read replicas and direct read traffic to them when Amazon RDS is down

C. Enable a read replica and direct read traffic to it when Amazon RDS is down

D. Enable an Amazon RDS for MySQL Multi-AZ configuration

A Database Specialist is working with a company to launch a new website built on Amazon Aurora with several Aurora Replicas. This new website will replace an on-premises website connected to a legacy relational database. Due to stability issues in the legacy database, the company would like to test the resiliency of Aurora. Which action can the Database Specialist take to test the resiliency of the Aurora DB cluster?

A. Stop the DB cluster and analyze how the website responds

B. Use Aurora fault injection to crash the master DB instance

C. Remove the DB cluster endpoint to simulate a master DB instance failure

D. Use Aurora Backtrack to crash the DB cluster

A Database Specialist is migrating a 2 TB Amazon RDS for Oracle DB instance to an RDS for PostgreSQL DB instance using AWS DMS. The source RDS Oracle DB instance is in a VPC in the us-east-1 Region. The target RDS for PostgreSQL DB instance is in a VPC in the use-west-2 Region. Where should the AWS DMS replication instance be placed for the MOST optimal performance?

A. In the same Region and VPC of the source DB instance

B. In the same Region and VPC as the target DB instance

C. In the same VPC and Availability Zone as the target DB instance

D. In the same VPC and Availability Zone as the source DB instance

A company's development team needs to have production data restored in a staging AWS account. The production database is running on an Amazon RDS for PostgreSQL Multi-AZ DB instance, which has AWS KMS encryption enabled using the default KMS key. A database specialist planned to share the most recent automated snapshot with the staging account, but discovered that the option to share snapshots is disabled in the AWS Management Console. What should the database specialist do to resolve this?

A. Disable automated backups in the DB instance. Share both the automated snapshot and the default KMS key with the staging account. Restore the snapshot in the staging account and enable automated backups.

B. Copy the automated snapshot specifying a custom KMS encryption key. Share both the copied snapshot and the custom KMS encryption key with the staging account. Restore the snapshot to the staging account within the same Region.

C. Modify the DB instance to use a custom KMS encryption key. Share both the automated snapshot and the custom KMS encryption key with the staging account. Restore the snapshot in the staging account.

D. Copy the automated snapshot while keeping the default KMS key. Share both the snapshot and the default KMS key with the staging account. Restore the snapshot in the staging account.

After restoring an Amazon RDS snapshot from 3 days ago, a company's Development team cannot connect to the restored RDS DB instance. What is the likely cause of this problem?

A. The restored DB instance does not have Enhanced Monitoring enabled

B. The production DB instance is using a custom parameter group

C. The restored DB instance is using the default security group

D. The production DB instance is using a custom option group

A global company is developing an application across multiple AWS Regions. The company needs a database solution with low latency in each Region and automatic disaster recovery. The database must be deployed in an active-active configuration with automatic data synchronization between Regions. Which solution will meet these requirements with the LOWEST latency?

A. Amazon RDS with cross-Region read replicas

B. Amazon DynamoDB global tables

C. Amazon Aurora global database

D. Amazon Athena and Amazon S3 with S3 Cross Region Replication

A Database Specialist has migrated an on-premises Oracle database to Amazon Aurora PostgreSQL. The schema and the data have been migrated successfully. The on-premises database server was also being used to run database maintenance cron jobs written in Python to perform tasks including data purging and generating data exports. The logs for these jobs show that, most of the time, the jobs completed within 5 minutes, but a few jobs took up to 10 minutes to complete. These maintenance jobs need to be set up for Aurora PostgreSQL. How can the Database Specialist schedule these jobs so the setup requires minimal maintenance and provides high availability?

A. Create cron jobs on an Amazon EC2 instance to run the maintenance jobs following the required schedule.

B. Connect to the Aurora host and create cron jobs to run the maintenance jobs following the required schedule.

C. Create AWS Lambda functions to run the maintenance jobs and schedule them with Amazon CloudWatch Events.

D. Create the maintenance job using the Amazon CloudWatch job scheduling plugin.

A company uses an Amazon RDS for PostgreSQL database in the us-east-2 Region. The company wants to have a copy of the database available in the us-west-2 Region as part of a new disaster recovery strategy. A database architect needs to create the new database. There can be little to no downtime to the source database. The database architect has decided to use AWS Database Migration Service (AWS DMS) to replicate the database across Regions. The database architect will use full load mode and then will switch to change data capture (CDC) mode. Which parameters must the database architect configure to support CDC mode for the RDS for PostgreSQL database? (Choose three.)

A. Set wal_level = logical.

B. Set wal_level = replica.

C. Set max_replication_slots to 1 or more, depending on the number of DMS tasks.

D. Set max_replication_slots to 0 to support dynamic allocation of slots.

E. Set wal_sender_timeout to 20,000 milliseconds.

F. Set wal_sender_timeout to 5,000 milliseconds.

A company runs a MySQL database for its ecommerce application on a single Amazon RDS DB instance. Application purchases are automatically saved to the database, which causes intensive writes. Company employees frequently generate purchase reports. The company needs to improve database performance and reduce downtime due to patching for upgrades. Which approach will meet these requirements with the LEAST amount of operational overhead?

A. Enable a Multi-AZ deployment of the RDS for MySQL DB instance, and enable Memcached in the MySQL option group.

B. Enable a Multi-AZ deployment of the RDS for MySQL DB instance, and set up replication to a MySQL DB instance running on Amazon EC2.

C. Enable a Multi-AZ deployment of the RDS for MySQL DB instance, and add a read replica.

D. Add a read replica and promote it to an Amazon Aurora MySQL DB cluster master. Then enable Amazon Aurora Serverless.

A company is building a new web platform where user requests trigger an AWS Lambda function that performs an insert into an Amazon Aurora MySQL DB cluster. Initial tests with less than 10 users on the new platform yielded successful execution and fast response times. However, upon more extensive tests with the actual target of 3,000 concurrent users, Lambda functions are unable to connect to the DB cluster and receive too many connections errors. Which of the following will resolve this issue?

A. Edit the my.cnf file for the DB cluster to increase max_connections

B. Increase the instance size of the DB cluster

C. Change the DB cluster to Multi-AZ

D. Increase the number of Aurora Replicas

A social media company is using Amazon DynamoDB to store user profile data and user activity data. Developers are reading and writing the data, causing the size of the tables to grow significantly. Developers have started to face performance bottlenecks with the tables. Which solution should a database specialist recommend to read items the FASTEST without consuming all the provisioned throughput for the tables?

A. Use the Scan API operation in parallel with many workers to read all the items. Use the Query API operation to read multiple items that have a specific partition key and sort key. Use the GetItem API operation to read a single item.

B. Use the Scan API operation with a filter expression that allows multiple items to be read. Use the Query API operation to read multiple items that have a specific partition key and sort key. Use the GetItem API operation to read a single item.

C. Use the Scan API operation with a filter expression that allows multiple items to be read. Use the Query API operation to read a single item that has a specific primary key. Use the BatchGetItem API operation to read multiple items.

D. Use the Scan API operation in parallel with many workers to read all the items. Use the Query API operation to read a single item that has a specific primary key Use the BatchGetItem API operation to read multiple items.

An IT consulting company wants to reduce costs when operating its development environment databases. The company's workflow creates multiple Amazon Aurora MySQL DB clusters for each development group. The Aurora DB clusters are only used for 8 hours a day. The DB clusters can then be deleted at the end of the development cycle, which lasts 2 weeks. Which of the following provides the MOST cost-effective solution?

A. Use AWS CloudFormation templates. Deploy a stack with the DB cluster for each development group. Delete the stack at the end of the development cycle.

B. Use the Aurora DB cloning feature. Deploy a single development and test Aurora DB instance, and create clone instances for the development groups. Delete the clones at the end of the development cycle.

C. Use Aurora Replicas. From the master automatic pause compute capacity option, create replicas for each development group, and promote each replica to master. Delete the replicas at the end of the development cycle.

D. Use Aurora Serverless. Restore current Aurora snapshot and deploy to a serverless cluster for each development group. Enable the option to pause the compute capacity on the cluster and set an appropriate timeout.

Free Access Full DBS-C01 Practice Exam Free

Looking for additional practice? Click here to access a full set of DBS-C01 practice exam free questions and continue building your skills across all exam domains.

Our question sets are updated regularly to ensure they stay aligned with the latest exam objectives—so be sure to visit often!

Good luck with your DBS-C01 certification journey!