AI-100 Practice Test Free – 50 Real Exam Questions to Boost Your Confidence

Preparing for the AI-100 exam? Start with our AI-100 Practice Test Free – a set of 50 high-quality, exam-style questions crafted to help you assess your knowledge and improve your chances of passing on the first try.

Taking a AI-100 practice test free is one of the smartest ways to:

- Get familiar with the real exam format and question types

- Evaluate your strengths and spot knowledge gaps

- Gain the confidence you need to succeed on exam day

Below, you will find 50 free AI-100 practice questions to help you prepare for the exam. These questions are designed to reflect the real exam structure and difficulty level. You can click on each Question to explore the details.

You are designing an AI application that will perform real-time processing by using Microsoft Azure Stream Analytics. You need to identify the valid outputs of a Stream Analytics job. What are three possible outputs? Each correct answer presents a complete solution. NOTE: Each correct selection is worth one point.

A. A Hive table in Azure HDInsight

B. Azure SQL Database

C. Azure Cosmos DB

D. Azure Blob storage

E. Azure Redis Cache

Your company has deployed 1,000 Internet-connected sensors for an AI application. The sensors generate large amounts new data on an hourly basis. The data generated by the sensors are currently stored on an on-premises server. You must meet the following requirements: Move the data to Azure so that you can perform advanced analytics on the data. Ensure data persistence. Keep costs at a minimum. Which of the following actions should you take?

A. Make use of Azure Blob storage

B. Make use of Azure Cosmos DB

C. Make use of Azure Databricks

D. Make use of Azure Table storage

You need to build a reputation monitoring solution that reviews Twitter activity about your company. The solution must identify negative tweets and tweets that contain inappropriate images. You plan to use Azure Logic Apps to build the solution. Which two additional Azure services should you include in the solution? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

A. Computer Vision

B. Azure Blueprint

C. Content Moderator

D. Text Analytics

E. Azure Machine Learning Service

F. Form Recognizer

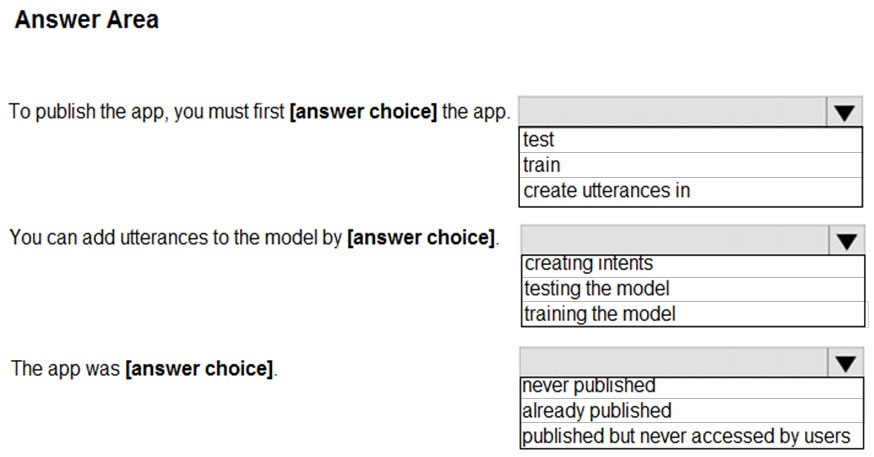

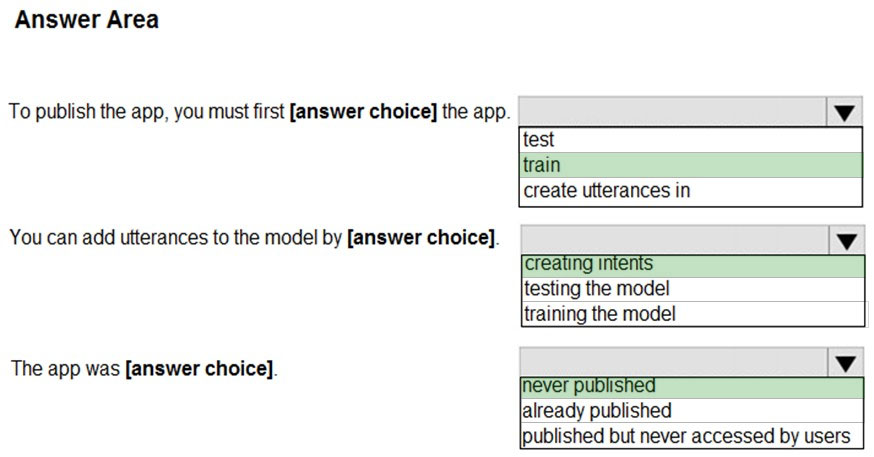

HOTSPOT - You have an app that uses the Language Understanding (LUIS) API as shown in the following exhibit.Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic. NOTE: Each correct selection is worth one point. Hot Area:

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen. You have an Azure SQL database, an Azure Data Lake Storage Gen 2 account, and an API developed by using Azure Machine Learning Studio. You need to ingest data once daily from the database, score each row by using the API, and write the data to the storage account. Solution: You create an Azure Data Factory pipeline that contains the Machine Learning Batch Execution activity. Does this meet the goal?

A. Yes

B. No

You are developing a Microsoft Bot Framework application. The application consumes structured NoSQL data that must be stored in the cloud. You implement Azure Blob storage for the application. You want access to the blob store to be controlled by using a role. You implement Azure Active Directory (Azure AD) integration on the storage account. Does this action accomplish your objective?

A. Yes, it does

B. No, it does not

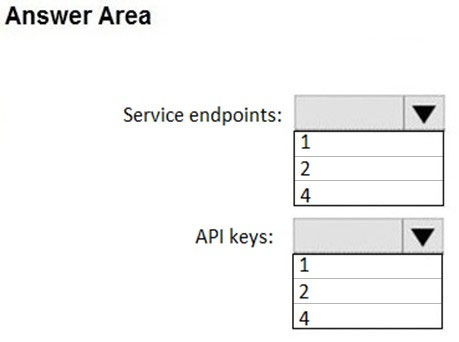

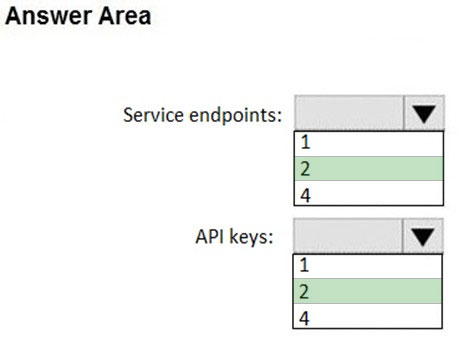

HOTSPOT - You plan to deploy the Text Analytics and Computer Vision services. The Azure Cognitive Services will be deployed to the West US and East Europe Azure regions. You need to identify the minimum number of service endpoints and API keys required for the planned deployment. What should you identify? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point. Hot Area:

HOTSPOT -

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

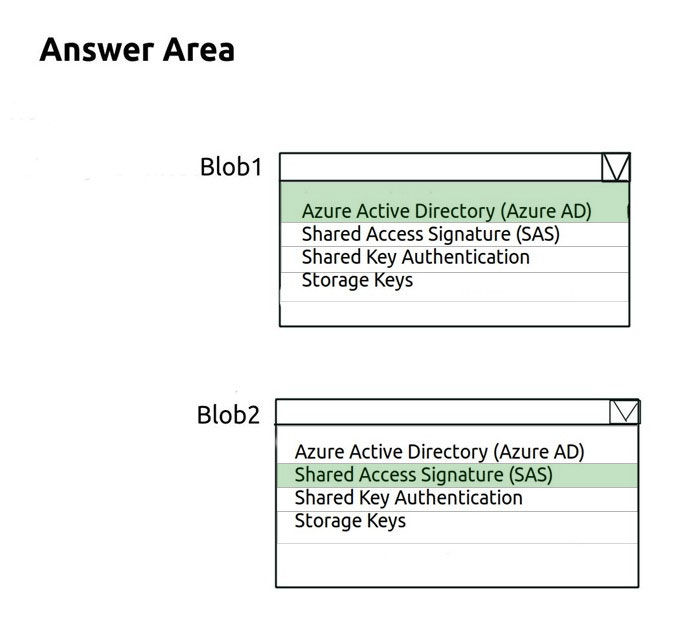

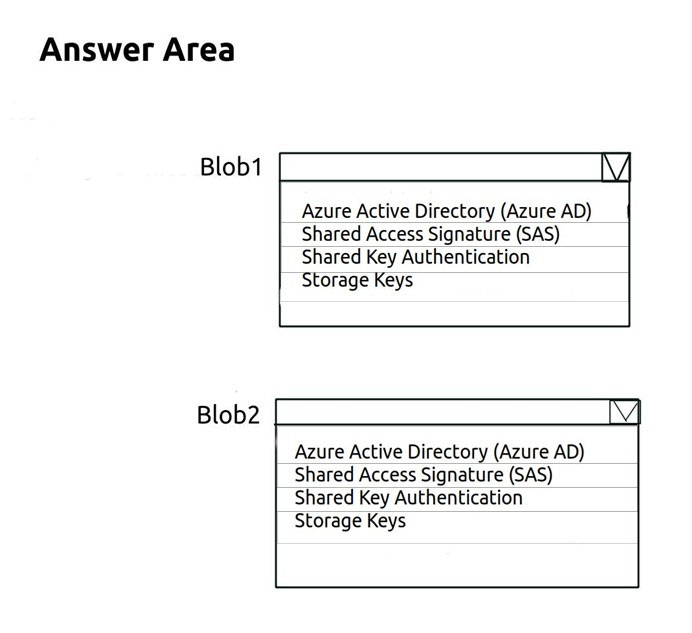

You plan to deploy an application that will perform image recognition. The application will store image data in two Azure Blob storage stores named Blob1 and

Blob2.

You need to recommend a security solution that meets the following requirements:

✑ Access to Blob1 must be controlled by using a role.

✑ Access to Blob2 must be time-limited and constrained to specific operations.

What should you recommend using to control access to each blob store? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

You have an existing Language Understanding (LUIS) model for an internal bot. You need to recommend a solution to add a meeting reminder functionality to the bot by using a prebuilt model. The solution must minimize the size of the model. Which component of LUIS should you recommend?

A. domain

B. intents

C. entities

You are designing an AI solution that will analyze millions of pictures by using Azure HDInsight Hadoop cluster. You need to recommend a solution for storing the pictures. The solution must minimize costs. Which storage solution should you recommend?

A. Azure Table storage

B. Azure File Storage

C. Azure Data Lake Storage Gen2

D. Azure Data Lake Storage Gen1

Your company plans to create a mobile app that will be used by employees to query the employee handbook. You need to ensure that the employees can query the handbook by typing or by using speech. Which core component should you use for the app?

A. Language Understanding (LUIS)

B. QnA Maker

C. Text Analytics

D. Azure Search

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen. You are developing an application that uses an Azure Kubernetes Service (AKS) cluster. You are troubleshooting a node issue. You need to connect to an AKS node by using SSH. Solution: You add an SSH key to the node, and then you create an SSH connection. Does this meet the goal?

A. Yes

B. No

You are developing a Microsoft Bot Framework app that consumes structured NoSQL data. The app has the following data storage requirements: Data must be stored in Azure. Data persistence must be ensured. You want to keep costs at a minimum. Which of the following actions should you take?

A. Make use of Azure Blob storage

B. Make use of Azure Cosmos DB

C. Make use of Azure Databricks

D. Make use of Azure Table storage

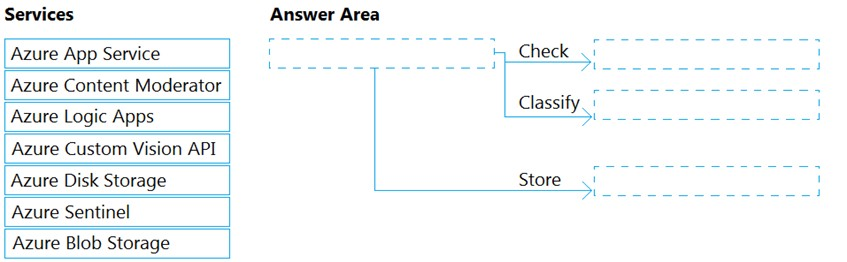

DRAG DROP - You are developing an application for photo classification. Users of the application will include minors. The users will upload photos to the application. The photos will be stored for model training purposes. All the photos must be considered appropriate for minors. You need to recommend an architecture for the application. Which Azure services should you recommend using in the architecture? To answer, drag the appropriate services to the correct targets. Each service may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point. Select and Place:

You plan to design an application that will use data from Azure Data Lake and perform sentiment analysis by using Azure Machine Learning algorithms. The developers of the application use a mix of Windows- and Linux-based environments. The developers contribute to shared GitHub repositories. You need all the developers to use the same tool to develop the application. What is the best tool to use? More than one answer choice may achieve the goal.

A. Microsoft Visual Studio Code

B. Azure Notebooks

C. Azure Machine Learning Studio

D. Microsoft Visual Studio

Your company develops an API application that is orchestrated by using Kubernetes. You need to deploy the application. Which three actions should you perform? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

A. Create a Kubernetes cluster.

B. Create an Azure Container Registry instance.

C. Create a container image file.

D. Create a Web App for Containers.

E. Create an Azure container instance.

Your company plans to monitor twitter hashtags, and then to build a graph of connected people and places that contains the associated sentiment. The monitored hashtags use several languages, but the graph will be displayed in English. You need to recommend the required Azure Cognitive Services endpoints for the planned graph. Which Cognitive Services endpoints should you recommend?

A. Language Detection, Content Moderator, and Key Phrase Extraction

B. Translator Text, Content Moderator, and Key Phrase Extraction

C. Language Detection, Sentiment Analysis, and Key Phase Extraction

D. Translator Text, Sentiment Analysis, and Named Entity Recognition

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen. You have an Azure SQL database, an Azure Data Lake Storage Gen 2 account, and an API developed by using Azure Machine Learning Studio. You need to ingest data once daily from the database, score each row by using the API, and write the data to the storage account. Solution: You create a scheduled Jupyter Notebook in Azure Databricks. Does this meet the goal?

A. Yes

B. No

You are using Azure Cognitive Services to create an interactive AI application that will be deployed for a world-wide audience. You want the app to support multiple languages, including English, French, Spanish, Portuguese, and German. Which of the following actions should you take?

A. Make use of Text Analytics.

B. Make use of Content Moderator.

C. Make use of QnA Maker.

D. Make use of Language API.

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen. You have an app named App1 that uses the Face API. App1 contains several PersonGroup objects. You discover that a PersonGroup object for an individual named Ben Smith cannot accept additional entries. The PersonGroup object for Ben Smith contains 10,000 entries. You need to ensure that additional entries can be added to the PersonGroup object for Ben Smith. The solution must ensure that Ben Smith can be identified by all the entries. Solution: You create a second PersonGroup object for Ben Smith. Does this meet the goal?

A. Yes

B. No

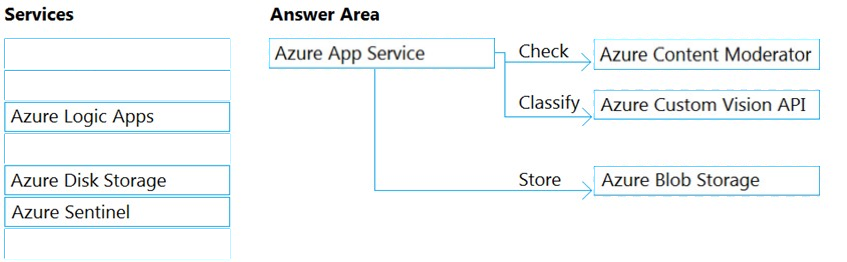

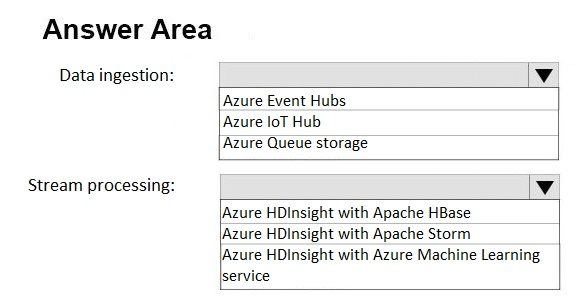

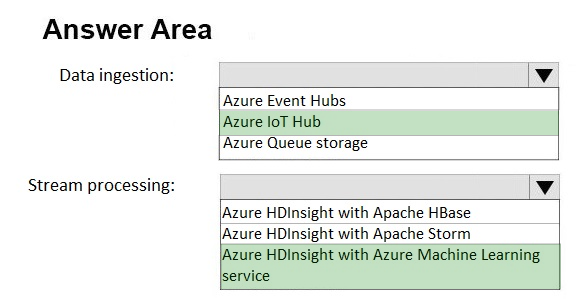

HOTSPOT - You are developing an application that will perform clickstream analysis. The application will ingest and analyze millions of messages in the real time. You need to ensure that communication between the application and devices is bidirectional. What should you use for data ingestion and stream processing? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point. Hot Area:

Your company uses an internal blog to share news with employees. You use the Translator Text API to translate the text in the blog from English to several other languages used by the employees. Several employees report that the translations are often inaccurate. You need to improve the accuracy of the translations. What should you add to the translation solution?

A. Text Analytics

B. Language Understanding (LUIS)

C. Azure Media Services

D. Custom Translator

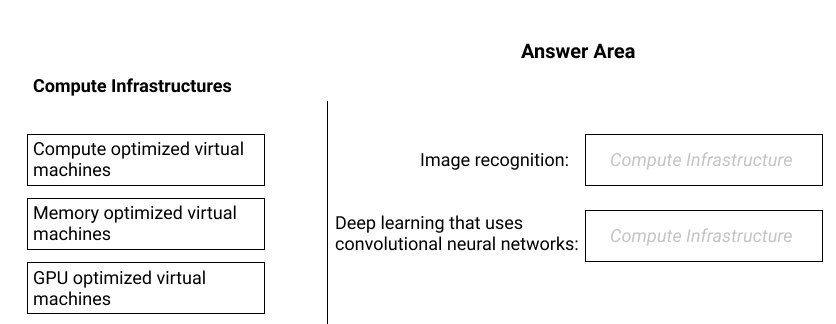

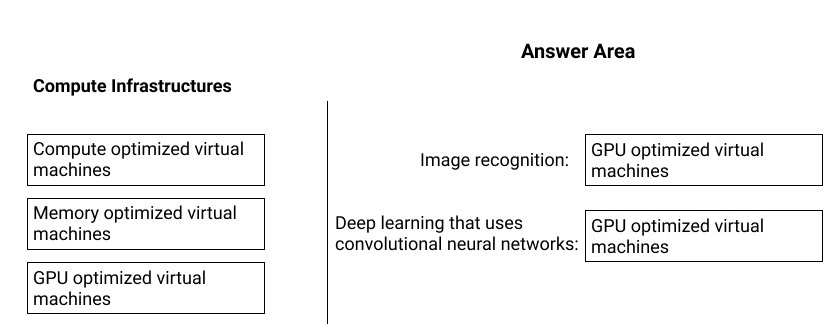

DRAG DROP - You are designing an Azure Batch AI solution that will be used to train many different Azure Machine Learning models. The solution will perform the following: ✑ Image recognition ✑ Deep learning that uses convolutional neural networks. You need to select a compute infrastructure for each model. The solution must minimize the processing time. What should you use for each model? To answer, drag the appropriate compute infrastructures to the correct models. Each compute infrastructure may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point. Select and Place:

You have an app that records meetings by using speech-to-text capabilities from the Speech Services API. You discover that when action items are listed at the end of each meeting, the app transcribes the text inaccurately when industry terms are used. You need to improve the accuracy of the meeting records. What should you do?

A. Add a phrase list

B. Create a custom wake word

C. Parse the text by using the Language Understanding (LUIS) API

D. Train a custom model by using Custom Translator

You are designing a Computer Vision AI application. You need to recommend a deployment solution for the application. The solution must ensure that costs scale linearly without any upfront costs. What should you recommend?

A. a containerized Computer Vision API on Azure Kubernetes Service (AKS) that has autoscaling configured

B. the Computer Vision API as a single resource

C. an Azure Container Service

D. a containerized Computer Vision API on Azure Kubernetes Service (AKS) that has virtual nodes configured

You are developing the workflow for an Azure Machine Learning solution. The solution must retrieve data from the following on-premises sources: Windows Server 2016 File servers Microsoft SQL Server databases - Oracle databases - Which of the following actions should you take?

A. Make use of Azure Data Factory to retrieve the data.

B. Make use of Azure Databricks to retrieve the data.

C. Make use of Azure Stream Analytics to retrieve the data.

D. Make use of Azure Synapse Analytics to retrieve the data.

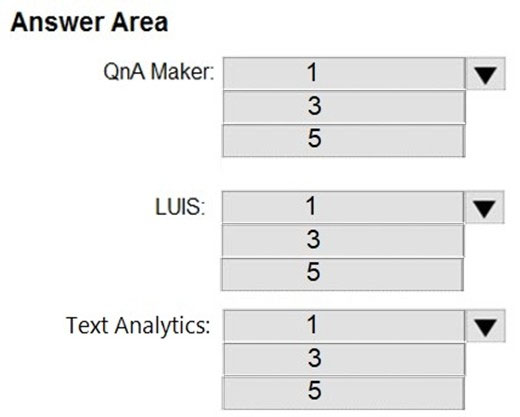

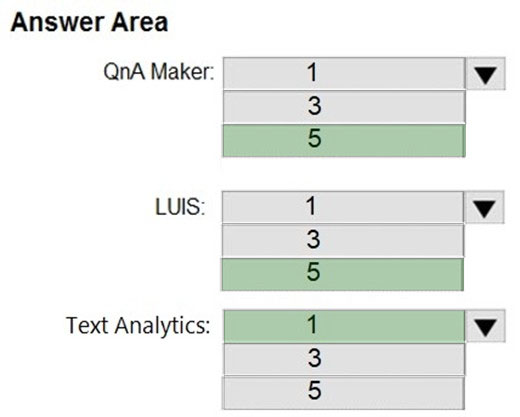

HOTSPOT - You plan to create a bot that will support five languages. The bot will be used by users located in three different countries. The bot will answer common customer questions. The bot will use Language Understanding (LUIS) to identify which skill to use and to detect the language of the customer. You need to identify the minimum number of Azure resources that must be created for the planned bot. How many QnA Maker, LUIS and Text Analytics instances should you create? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point. Hot Area:

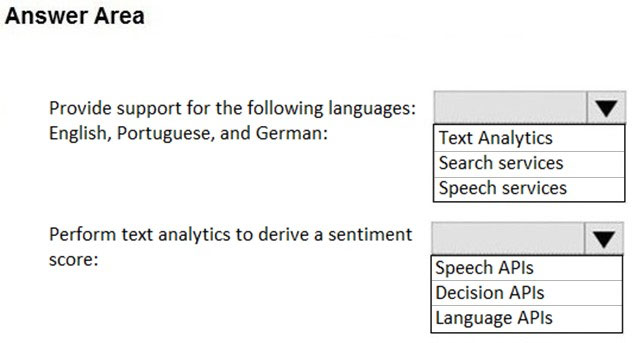

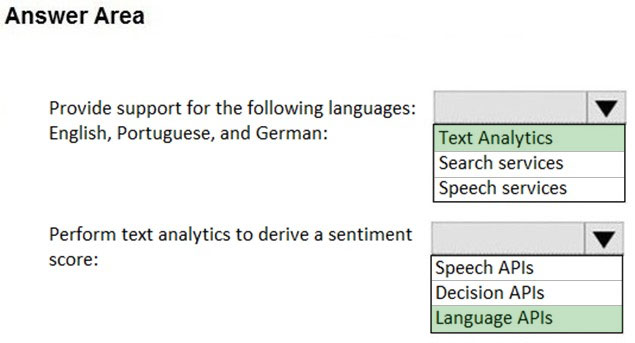

HOTSPOT - You plan to use Azure Cognitive Services to provide the development team at your company with the ability to create intelligent apps without having direct AI or data science skills. The company identifies the following requirements for the planned Cognitive Services deployment: ✑ Provide support for the following languages: English, Portuguese, and German. ✑ Perform text analytics to derive a sentiment score. Which Cognitive Service service should you deploy for each requirement? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point. Hot Area:

You plan to deploy two AI applications named AI1 and AI2. The data for the applications will be stored in a relational database. You need to ensure that the users of AI1 and AI2 can see only data in each user's respective geographic region. The solution must be enforced at the database level by using row-level security. Which database solution should you use to store the application data?

A. Microsoft SQL Server on a Microsoft Azure virtual machine

B. Microsoft Azure Database for MySQL

C. Microsoft Azure Data Lake Store

D. Microsoft Azure Cosmos DB

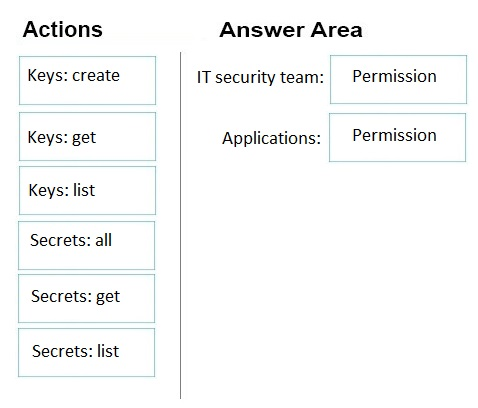

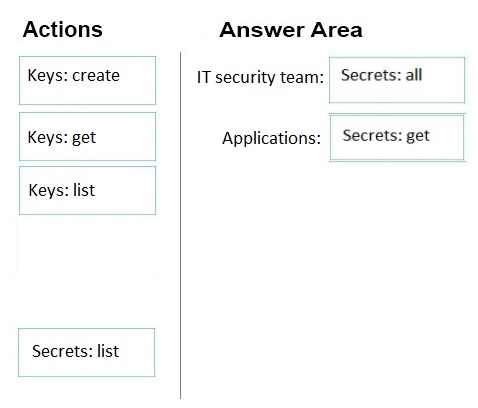

DRAG DROP - You use an Azure key vault to store credentials for several Azure Machine Learning applications. You need to configure the key vault to meet the following requirements: ✑ Ensure that the IT security team can add new passwords and periodically change the passwords. ✑ Ensure that the applications can securely retrieve the passwords for the applications. ✑ Use the principle of least privilege. Which permissions should you grant? To answer, drag the appropriate permissions to the correct targets. Each permission may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point. Select and Place:

You are developing an AI application for your company. The application will use Microsoft Azure Stream Analytics. You save the outputs from the Stream Analytics workflows to the cloud. Which of the following actions should you take?

A. Make use of a Hive table in Azure HDInsight

B. Make use of Azure Cosmos DB

C. Make use of Azure File storage

D. Make use of Azure Table storage

You are developing an app that will analyze sensitive data from global users. Your app must adhere the following compliance policies: The app must not store data in the cloud. The app not use services in the cloud to process the data. Which of the following actions should you take?

A. Make use of Azure Machine Learning Studio

B. Make use of Docker containers for the Text Analytics

C. Make use of a Text Analytics container deployed to Azure Kubernetes Service

D. Make use of Microsoft Machine Learning (MML) for Apache Spark

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen. You create several AI models in Azure Machine Learning Studio. You deploy the models to a production environment. You need to monitor the compute performance of the models. Solution: You enable Model data collection. Does this meet the goal?

A. Yes

B. No

You are developing a Microsoft Bot Framework application. The application consumes structured NoSQL data that must be stored in the cloud. You implement Azure Blob storage for the application. You want access to the blob store to be controlled by using a role. You implement Shared Key authorization on the storage account. Does this action accomplish your objective?

A. Yes, it does

B. No, it does not

A data scientist deploys a deep learning model on an Fsv2 virtual machine. Data analysis is slow. You need to recommend which virtual machine series the data scientist must use to ensure that data analysis occurs as quickly as possible. Which series should you recommend?

A. ND

B. B

C. DC

D. Ev3

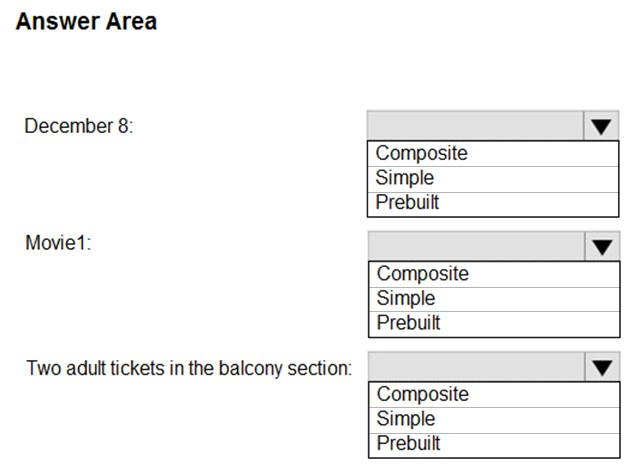

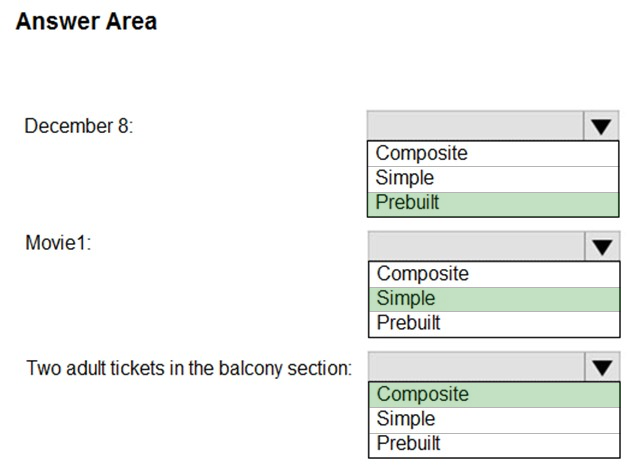

HOTSPOT - Your company is building a cinema chatbot by using the Bot Framework and Language Understanding (LUIS). You are designing of the intents and the entities for LUIS. The following are utterances that customers might provide: ✑ Which movies are playing on December 8? ✑ What time is the performance of Movie1? ✑ I would like to purchase two adult tickets in the balcony section for Movie2. You need to identify which entity types to use. The solution must minimize development effort. Which entry type should you use for each entity? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point. Hot Area:

You design an AI workflow that combines data from multiple data sources for analysis. The data sources are composed of: ✑ JSON files uploaded to an Azure Storage account ✑ On-premises Oracle databases ✑ Azure SQL databases Which service should you use to ingest the data?

A. Azure Data Factory

B. Azure SQL Data Warehouse

C. Azure Data Lake Storage

D. Azure Databricks

Your plan to design a bot that will be hosted by using Azure Bot Service. Your company identifies the following compliance requirements for the bot: ✑ Payment Card Industry Data Security Standards (PCI DSS) ✑ General Data Protection Regulation (GDPR) ✑ ISO 27001 You need to identify which compliance requirements are met by hosting the bot in the bot service. What should you identify?

A. PCI DSS only

B. PCI DSS, ISO 27001, and GDPR

C. ISO 27001 only

D. GDPR only

Your company is developing an AI solution that will identify inappropriate text in multiple languages. You need to implement a Cognitive Services API that meets this requirement. You use Language Understanding (LUIS) to identify inappropriate text. Does this action accomplish your objective?

A. Yes, it does

B. No, it does not

Your company has a data team of Scala and R experts. You plan to ingest data from multiple Apache Kafka streams. You need to recommend a processing technology to broker messages at scale from Kafka streams to Azure Storage. What should you recommend?

A. Azure Databricks

B. Azure Functions

C. Azure HDInsight with Apache Storm

D. Azure HDInsight with Microsoft Machine Learning Server

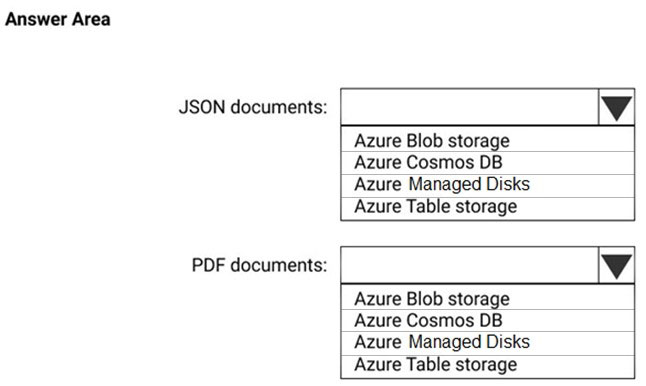

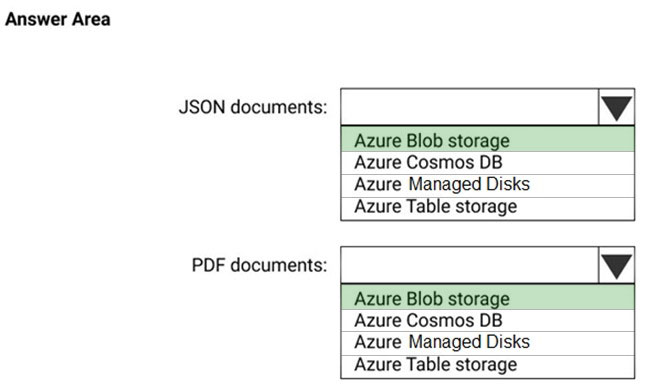

HOTSPOT - You need to build a sentiment analysis solution that will use input data from JSON documents and PDF documents. The JSON documents must be processed in batches and aggregated. Which storage type should you use for each file type? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point. Hot Area:

You are designing an AI solution in Azure that will perform image classification. You need to identify which processing platform will provide you with the ability to update the logic over time. The solution must have the lowest latency for inferencing without having to batch. Which compute target should you identify?

A. graphics processing units (GPUs)

B. field-programmable gate arrays (FPGAs)

C. central processing units (CPUs)

D. application-specific integrated circuits (ASICs)

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen. You have Azure IoT Edge devices that generate streaming data. On the devices, you need to detect anomalies in the data by using Azure Machine Learning models. Once an anomaly is detected, the devices must add information about the anomaly to the Azure IoT Hub stream. Solution: You deploy an Azure Machine Learning model as an IoT Edge module. Does this meet the goal?

A. Yes

B. No

You have a database that contains sales data. You plan to process the sales data by using two data streams named Stream1 and Stream2. Stream1 will be used for purchase order data. Stream2 will be used for reference data. The reference data is stored in CSV files. You need to recommend an ingestion solution for each data stream. What two solutions should you recommend? Each correct answer is a complete solution. NOTE: Each correct selection is worth one point.

A. an Azure event hub for Stream1 and Azure Blob storage for Stream2

B. Azure Blob storage for Stream1 and Stream2

C. an Azure event hub for Stream1 and Stream2

D. Azure Blob storage for Stream1 and Azure Cosmos DB for Stream2

E. Azure Cosmos DB for Stream1 and an Azure event hub for Stream2

Your company has 1,000 AI developers who are responsible for provisioning environments in Azure. You need to control the type, size, and location of the resources that the developers can provision. What should you use?

A. Azure Key Vault

B. Azure service principals

C. Azure managed identities

D. Azure Security Center

E. Azure Policy

You have thousands of images that contain text. You need to process the text from the images to a machine-readable character stream. Which Azure Cognitive Services service should you use?

A. Image Moderation API

B. Text Analytics

C. Translator Text

D. Computer Vision

You design an AI solution that uses an Azure Stream Analytics job to process data from an Azure IoT hub. The IoT hub receives time series data from thousands of IoT devices at a factory. The job outputs millions of messages per second. Different applications consume the messages as they are available. The messages must be purged. You need to choose an output type for the job. What is the best output type to achieve the goal? More than one answer choice may achieve the goal.

A. Azure Event Hubs

B. Azure SQL Database

C. Azure Blob storage

D. Azure Cosmos DB

You need to design the Butler chatbot solution to meet the technical requirements. What is the best channel and pricing tier to use? More than one answer choice may achieve the goal. Select the BEST answer.

A. Standard channels that use the S1 pricing tier

B. Standard channels that use the Free pricing tier

C. Premium channels that use the Free pricing tier

D. Premium channels that use the S1 pricing tier

You have Azure IoT Edge devices that generate measurement data from temperature sensors. The data changes very slowly. You need to analyze the data in a temporal two-minute window. If the temperature rises five degrees above a limit, an alert must be raised. The solution must minimize the development of custom code. What should you use?

A. A Machine Learning model as a web service

B. an Azure Machine Learning model as an IoT Edge module

C. Azure Stream Analytics as an IoT Edge module

D. Azure Functions as an IoT Edge module

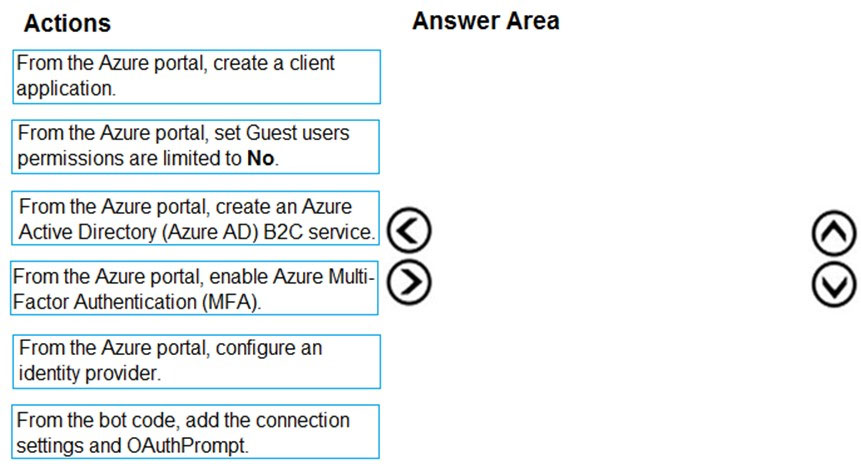

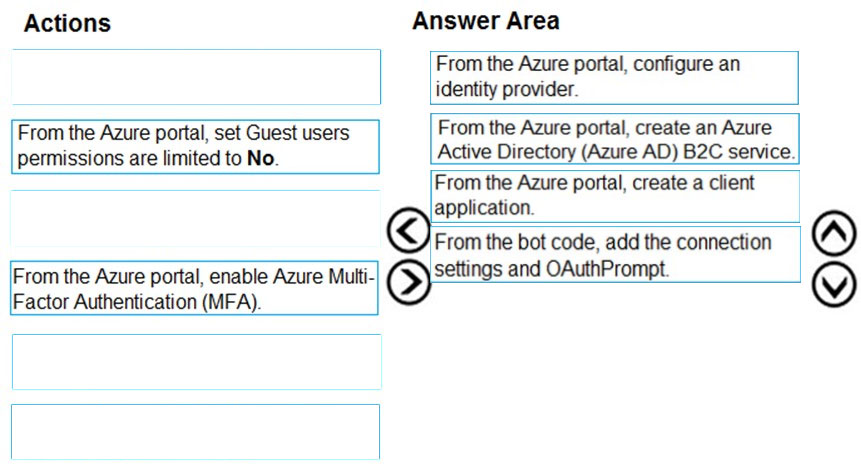

DRAG DROP - You need to create a bot to meet the following requirements: ✑ The bot must support multiple bot channels including Direct Line. ✑ Users must be able to sign in to the bot by using a Gmail user account and save activities and preferences. Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order. NOTE: More than one order of answer choices is correct. You will receive credit for any of the correct orders you select. Select and Place:

Free Access Full AI-100 Practice Test Free Questions

If you’re looking for more AI-100 practice test free questions, click here to access the full AI-100 practice test.

We regularly update this page with new practice questions, so be sure to check back frequently.

Good luck with your AI-100 certification journey!