AI-100 Practice Questions Free – 50 Exam-Style Questions to Sharpen Your Skills

Are you preparing for the AI-100 certification exam? Kickstart your success with our AI-100 Practice Questions Free – a carefully selected set of 50 real exam-style questions to help you test your knowledge and identify areas for improvement.

Practicing with AI-100 practice questions free gives you a powerful edge by allowing you to:

- Understand the exam structure and question formats

- Discover your strong and weak areas

- Build the confidence you need for test day success

Below, you will find 50 free AI-100 practice questions designed to match the real exam in both difficulty and topic coverage. They’re ideal for self-assessment or final review. You can click on each Question to explore the details.

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen. You create several AI models in Azure Machine Learning Studio. You deploy the models to a production environment. You need to monitor the compute performance of the models. Solution: You enable Model data collection. Does this meet the goal?

A. Yes

B. No

You plan to deploy Azure IoT Edge devices that will each store more than 10,000 images locally and classify the images by using a Custom Vision Service classifier. Each image is approximately 5 MB. You need to ensure that the images persist on the devices for 14 days. What should you use?

A. The device cache

B. Azure Blob storage on the IoT Edge devices

C. Azure Stream Analytics on the IoT Esge devices

D. Microsoft SQL Server on the IoT Edge devices

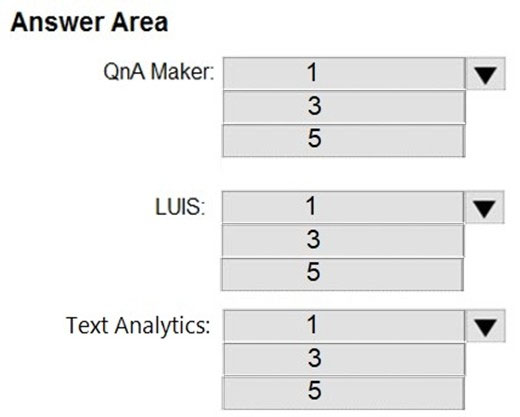

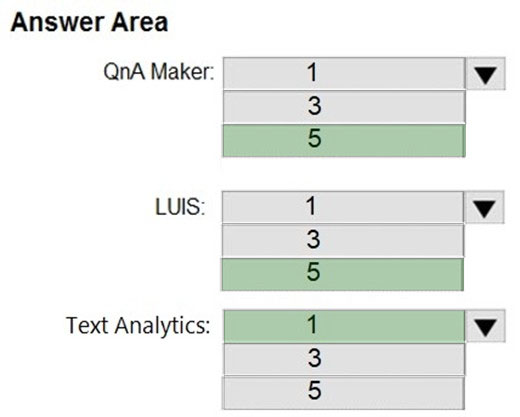

HOTSPOT - You plan to create a bot that will support five languages. The bot will be used by users located in three different countries. The bot will answer common customer questions. The bot will use Language Understanding (LUIS) to identify which skill to use and to detect the language of the customer. You need to identify the minimum number of Azure resources that must be created for the planned bot. How many QnA Maker, LUIS and Text Analytics instances should you create? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point. Hot Area:

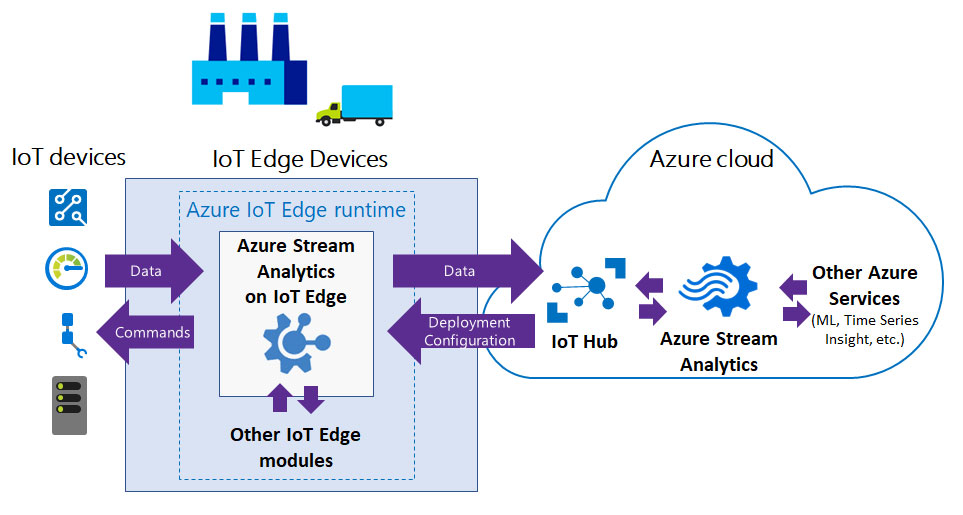

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen. You have Azure IoT Edge devices that generate streaming data. On the devices, you need to detect anomalies in the data by using Azure Machine Learning models. Once an anomaly is detected, the devices must add information about the anomaly to the Azure IoT Hub stream. Solution: You deploy an Azure Machine Learning model as an IoT Edge module. Does this meet the goal?

A. Yes

B. No

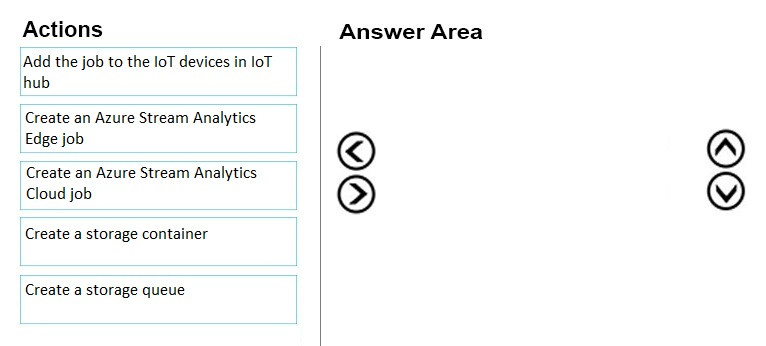

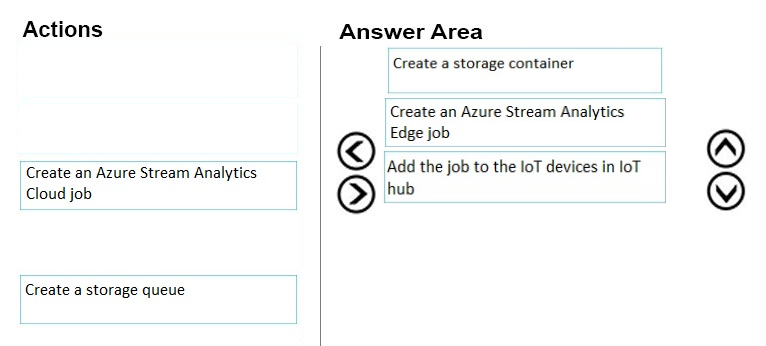

DRAG DROP - You are designing an AI solution that will use IoT devices to gather data from conference attendees, and then later analyze the data. The IoT devices will connect to an Azure IoT hub. You need to design a solution to anonymize the data before the data is sent to the IoT hub. Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order. Select and Place:

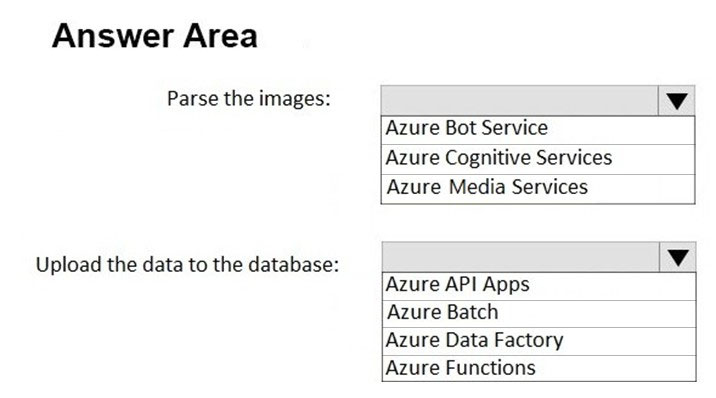

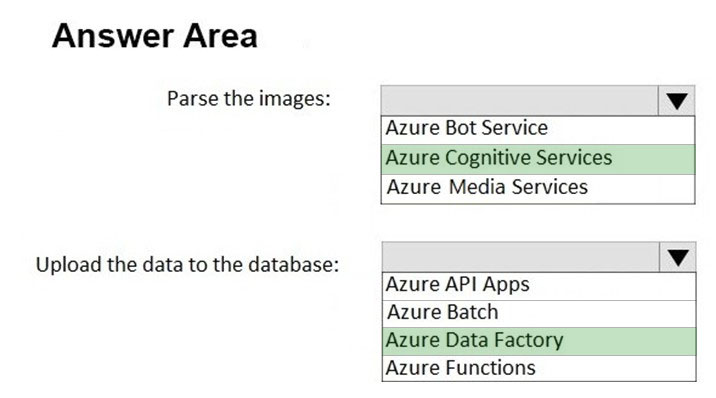

HOTSPOT - You are designing an application to parse images of business forms and upload the data to a database. The upload process will occur once a week. You need to recommend which services to use for the application. The solution must minimize infrastructure costs. Which services should you recommend? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point. Hot Area:

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen. You are deploying an Azure Machine Learning model to an Azure Kubernetes Service (AKS) container. You need to monitor the scoring accuracy of each run of the model. Solution: You modify the scoring file. Does this meet the goal?

A. Yes

B. No

You plan to implement a new data warehouse for a planned AI solution. You have the following information regarding the data warehouse: ✑ The data files will be available in one week. ✑ Most queries that will be executed against the data warehouse will be ad-hoc queries. ✑ The schemas of data files that will be loaded to the data warehouse will change often. ✑ One month after the planned implementation, the data warehouse will contain 15 TB of data. You need to recommend a database solution to support the planned implementation. What two solutions should you include in the recommendation? Each correct answer is a complete solution. NOTE: Each correct selection is worth one point.

A. Apache Hadoop

B. Apache Spark

C. A Microsoft Azure SQL database

D. An Azure virtual machine that runs Microsoft SQL Server

You have Azure IoT Edge devices that collect measurements every 30 seconds. You plan to send the measurements to an Azure IoT hub. You need to ensure that every event is processed as quickly as possible. What should you use?

A. Apache Kafka

B. Azure Stream Analytics record functions

C. Azure Stream Analytics windowing functions

D. Azure Machine Learning on the IoT Edge devices

You need to meet the greeting requirements for Butler. Which type of authentication should you use?

A. AdaptiveCard

B. SigninCard

C. CardCarousel

D. HeroCard

You are designing an AI application that will perform real-time processing by using Microsoft Azure Stream Analytics. You need to identify the valid outputs of a Stream Analytics job. What are three possible outputs? Each correct answer presents a complete solution. NOTE: Each correct selection is worth one point.

A. A Hive table in Azure HDInsight

B. Azure SQL Database

C. Azure Cosmos DB

D. Azure Blob storage

E. Azure Redis Cache

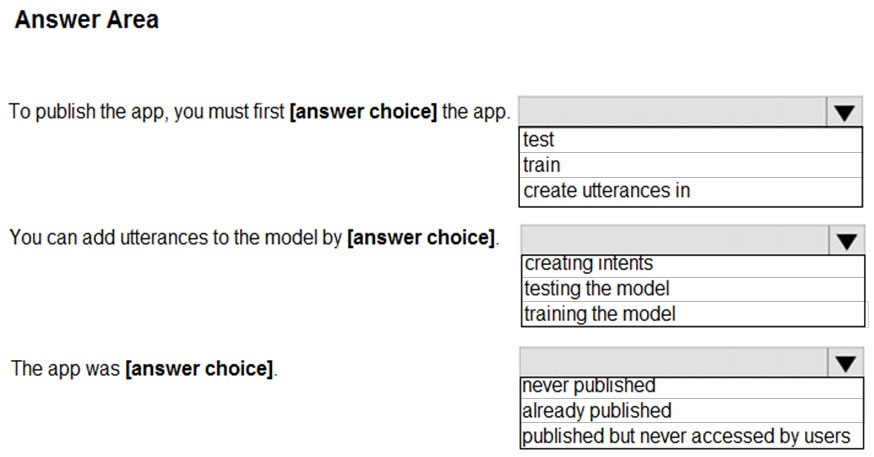

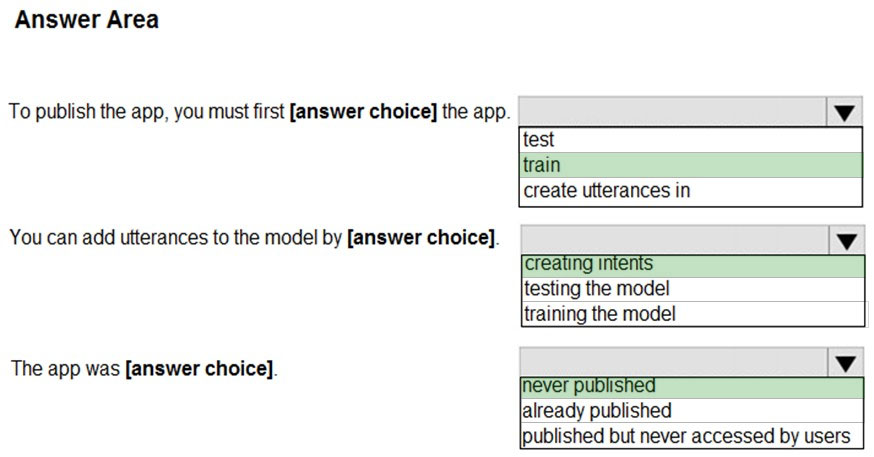

HOTSPOT - You have an app that uses the Language Understanding (LUIS) API as shown in the following exhibit.Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic. NOTE: Each correct selection is worth one point. Hot Area:

You need to evaluate trends in fuel prices during a period of 10 years. The solution must identify unusual fluctuations in prices and produce visual representations. Which Azure Cognitive Services API should you use?

A. Anomaly Detector

B. Computer Vision

C. Text Analytics

D. Bing Autosuggest

You have a database that contains sales data. You plan to process the sales data by using two data streams named Stream1 and Stream2. Stream1 will be used for purchase order data. Stream2 will be used for reference data. The reference data is stored in CSV files. You need to recommend an ingestion solution for each data stream. What two solutions should you recommend? Each correct answer is a complete solution. NOTE: Each correct selection is worth one point.

A. an Azure event hub for Stream1 and Azure Blob storage for Stream2

B. Azure Blob storage for Stream1 and Stream2

C. an Azure event hub for Stream1 and Stream2

D. Azure Blob storage for Stream1 and Azure Cosmos DB for Stream2

E. Azure Cosmos DB for Stream1 and an Azure event hub for Stream2

You company's developers have created an Azure Data Factory pipeline that moves data from an on-premises server to Azure Storage. The pipeline consumes Azure Cognitive Services APIs. You need to deploy the pipeline. Your solution must minimize custom code. You use Integration Runtime to move data to the cloud and Azure API Management to consume Cognitive Services APIs. Does this action accomplish your objective?

A. Yes, it does

B. No, it does not

The development team at your company builds a bot by using C# and .NET. You need to deploy the bot to Azure. Which tool should you use?

A. the .NET Core CLI

B. the Azure CLI

C. the Git CLI

D. the AzCopy toll

You are developing a Microsoft Bot Framework application. The application consumes structured NoSQL data that must be stored in the cloud. You implement Azure Blob storage for the application. You want access to the blob store to be controlled by using a role. You implement On-premises Active Directory Domain Services (AD DS). Does this action accomplish your objective?

A. Yes, it does

B. No, it does not

You create an Azure Cognitive Services resource. A developer needs to be able to retrieve the keys used by the resource. The solution must use the principle of least privilege. What is the best role to assign to the developer?

A. Security Manager

B. Security Reader

C. Cognitive Services Contributor

D. Cognitive Services User

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen. You have an Azure SQL database, an Azure Data Lake Storage Gen 2 account, and an API developed by using Azure Machine Learning Studio. You need to ingest data once daily from the database, score each row by using the API, and write the data to the storage account. Solution: You create an Azure Data Factory pipeline that contains the Machine Learning Batch Execution activity. Does this meet the goal?

A. Yes

B. No

You are designing a business application that will use Azure Cognitive Services to parse images of business forms. You have the following requirements: Parsed image data must be uploaded to Azure Storage once a week. The solution must minimize infrastructure costs. What should you do?

A. Use Azure API Apps to upload the data.

B. Use Azure Bot Service to upload the data.

C. Use Azure Data Factory (ADF) to upload the data.

D. Use Azure Machine Learning to upload the data.

You have an Azure Machine Learning experiment. You need to validate that the experiment meets GDPR regulation requirements and stores documentation about the experiment. What should you use?

A. Compliance Manager

B. an Azure Log Analytics workspace

C. Azure Table storage

D. Azure Security Center

Your company creates a popular mobile game. The company tracks usage patterns of the game. You need to provide special offers to users when there is a significant change in the usage patterns. Which Azure Cognitive Services service should you use?

A. Form Recognizer

B. Bing Autosuggest

C. Text Analytics

D. Anomaly Detector

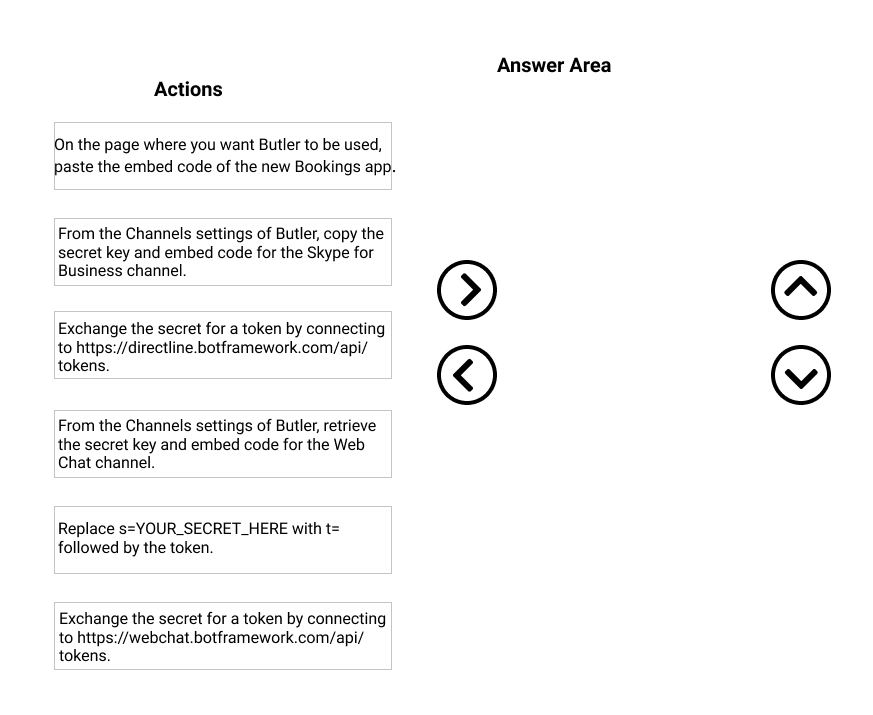

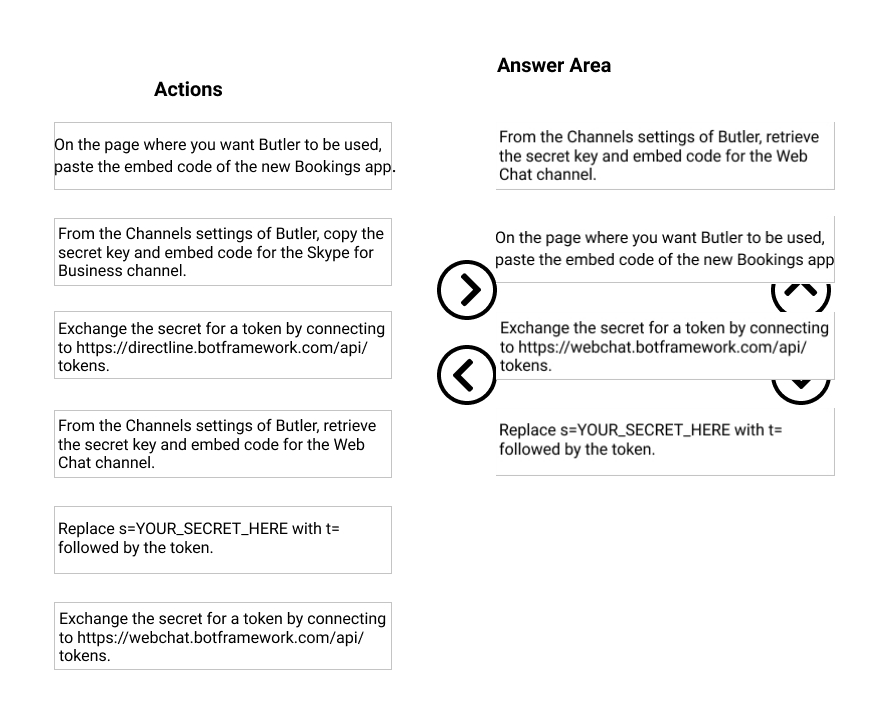

DRAG DROP - You need to integrate the new Bookings app and the Butler chabot. Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order. Select and Place:

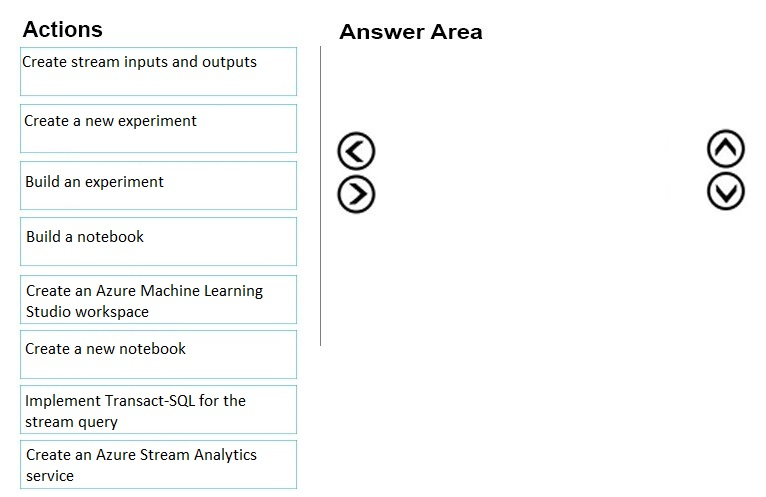

DRAG DROP - You need to build an AI solution that will be shared between several developers and customers. You plan to write code, host code, and document the runtime all within a single user experience. You build the environment to host the solution. Which three actions should you perform in sequence next? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order. Select and Place:

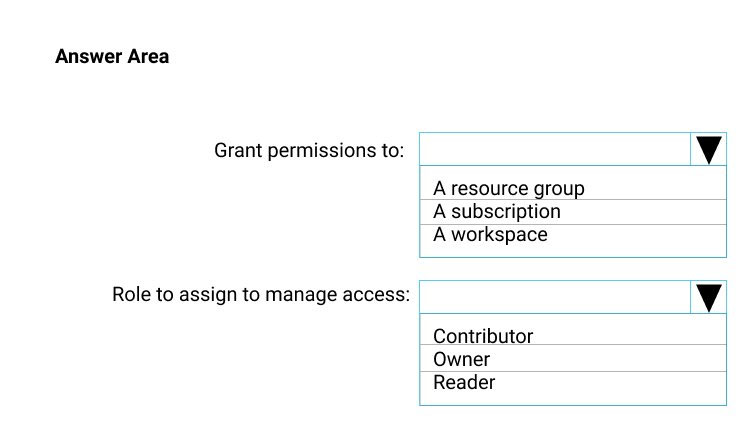

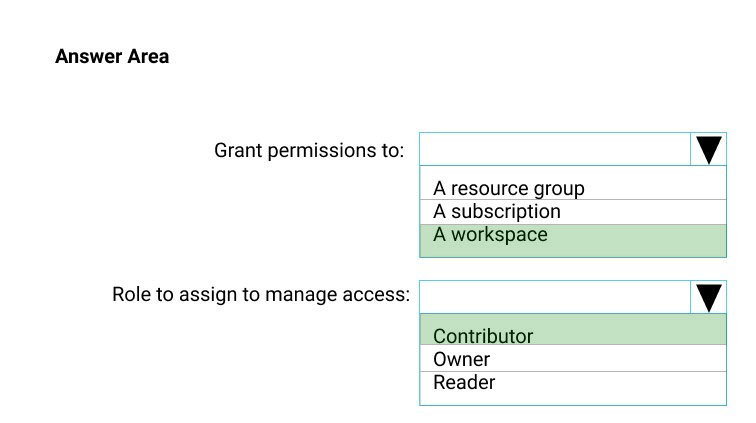

HOTSPOT - You need to configure security for an Azure Machine Learning service used by groups of data scientists. The groups must have access to only their own experiments and must be able to grant permissions to the members of their team. What should you do? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point. Hot Area:

You are designing an AI solution that will analyze millions of pictures by using Azure HDInsight Hadoop cluster. You need to recommend a solution for storing the pictures. The solution must minimize costs. Which storage solution should you recommend?

A. Azure Table storage

B. Azure File Storage

C. Azure Data Lake Storage Gen2

D. Azure Databricks File System

You plan to deploy a bot that will use the following Azure Cognitive Services: ✑ Language Understanding (LUIS) ✑ Text Analytics Your company's compliance policy states that all data used by the bot must be stored in the on-premises network. You need to recommend a compute solution to support the planned bot. What should you include in the recommendation?

A. an Azure Databricks cluster

B. a Docker container

C. Microsoft Machine Learning Server

D. the Azure Machine Learning service

You need to design an application that will analyze real-time data from financial feeds. The data will be ingested into Azure IoT Hub. The data must be processed as quickly as possible in the order in which it is ingested. Which service should you include in the design?

A. Azure Data Factory

B. Azure Queue storage

C. Azure Stream Analytics

D. Azure Notification Hubs

E. Apache Kafka

F. Azure Event Hubs

You plan to deploy Azure IoT Edge devices. Each device will store more than 10,000 images locally. Each image is approximately 5 MB. You need to ensure that the images persist on the devices for 14 days. What should you use?

A. Azure Stream Analytics on the IoT Edge devices

B. Azure Database for Postgres SQL

C. Azure Blob storage on the IoT Edge devices

D. Microsoft SQL Server on the IoT Edge devices

You design an AI workflow that combines data from multiple data sources for analysis. The data sources are composed of: ✑ JSON files uploaded to an Azure Storage account ✑ On-premises Oracle databases ✑ Azure SQL databases Which service should you use to ingest the data?

A. Azure Data Factory

B. Azure SQL Data Warehouse

C. Azure Data Lake Storage

D. Azure Databricks

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen. You have an app named App1 that uses the Face API. App1 contains several PersonGroup objects. You discover that a PersonGroup object for an individual named Ben Smith cannot accept additional entries. The PersonGroup object for Ben Smith contains 10,000 entries. You need to ensure that additional entries can be added to the PersonGroup object for Ben Smith. The solution must ensure that Ben Smith can be identified by all the entries. Solution: You modify the custom time interval for the training phase of App1. Does this meet the goal?

A. Yes

B. No

Your company plans to implement an AI solution that will analyze data from IoT devices. Data from the devices will be analyzed in real time. The results of the analysis will be stored in a SQL database. You need to recommend a data processing solution that uses the Transact-SQL language. Which data processing solution should you recommend?

A. Azure Stream Analytics

B. SQL Server Integration Services (SSIS)

C. Azure Event Hubs

D. Azure Machine Learning

A data scientist deploys a deep learning model on an Fsv2 virtual machine. Data analysis is slow. You need to recommend which virtual machine series the data scientist must use to ensure that data analysis occurs as quickly as possible. Which series should you recommend?

A. ND

B. B

C. DC

D. Ev3

You have an app that records meetings by using speech-to-text capabilities from the Speech Services API. You discover that when action items are listed at the end of each meeting, the app transcribes the text inaccurately when industry terms are used. You need to improve the accuracy of the meeting records. What should you do?

A. Add a phrase list

B. Create a custom wake word

C. Parse the text by using the Language Understanding (LUIS) API

D. Train a custom model by using Custom Translator

Your company plans to develop a mobile app to provide meeting transcripts by using speech-to-text. Audio from the meetings will be streamed to provide real-time transcription. You need to recommend which task each meeting participant must perform to ensure that the transcripts of the meetings can identify all participants. Which task should you recommend?

A. Record the meeting as an MP4.

B. Create a voice signature.

C. Sign up for Azure Speech Services.

D. Sign up as a guest in Azure Active Directory (Azure AD)

You company's developers have created an Azure Data Factory pipeline that moves data from an on-premises server to Azure Storage. The pipeline consumes Azure Cognitive Services APIs. You need to deploy the pipeline. Your solution must minimize custom code. You use Self-hosted Integration Runtime to move data to the cloud and Azure Logic Apps to consume Cognitive Services APIs. Does this action accomplish your objective?

A. Yes, it does

B. No, it does not

You are developing a Microsoft Bot Framework application. The application consumes structured NoSQL data that must be stored in the cloud. You implement Azure Blob storage for the application. You want access to the blob store to be controlled by using a role. You implement Azure Active Directory (Azure AD) integration on the storage account. Does this action accomplish your objective?

A. Yes, it does

B. No, it does not

Your company is developing an AI solution that will identify inappropriate text in multiple languages. You need to implement a Cognitive Services API that meets this requirement. You use the Azure Content Moderator API to identify inappropriate text. Does this action accomplish your objective?

A. Yes, it does

B. No, it does not

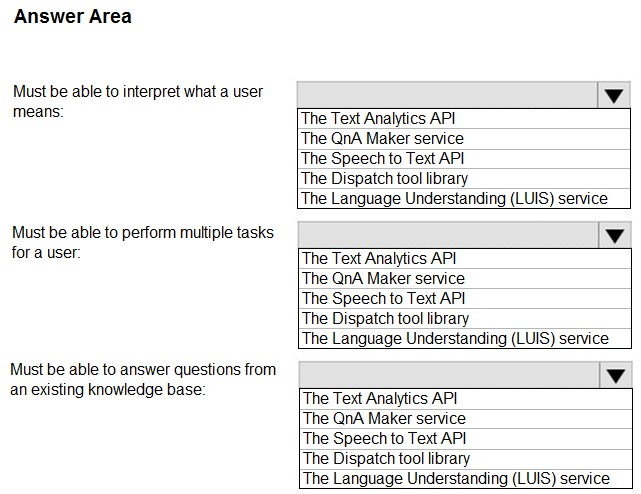

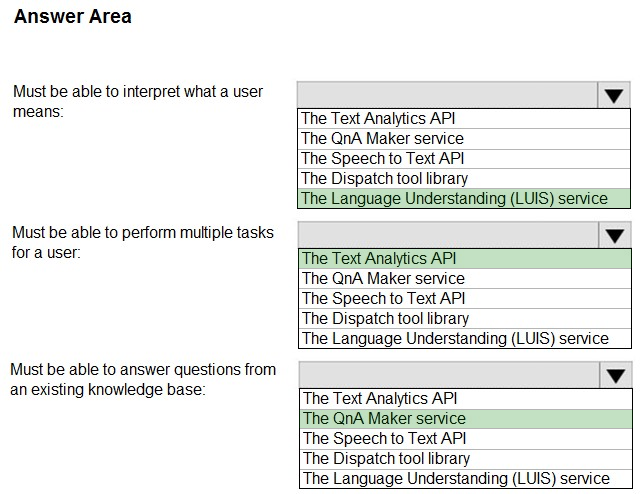

HOTSPOT - You plan to create an intelligent bot to handle internal user chats to the help desk of your company. The bot has the following requirements: ✑ Must be able to interpret what a user means. ✑ Must be able to perform multiple tasks for a user. Must be able to answer questions from an existing knowledge base.You need to recommend which solutions meet the requirements. Which solution should you recommend for each requirement? To answer, drag the appropriate solutions to the correct requirements. Each solution may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point. Hot Area:

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen. You create several API models in Azure Machine Learning Studio. You deploy the models to a production environment. You need to monitor the compute performance of the models. Solution: You create environment files. Does this meet the goal?

A. Yes

B. No

You have deployed several Azure IoT Edge devices for an AI solution. The Azure IoT Edge devices generate measurement data from temperature sensors. You need a solution to process the sensor data. Your solution must be able to write configuration changes back to the devices. You make use of Microsoft Azure IoT Hub. Does this action accomplish your objective?

A. Yes, it does

B. No, it does not

You are designing a solution that will integrate the Bing Web Search API and will return a JSON response. The development team at your company uses C# as its primary development language. You provide developers with the Bing endpoint. Which additional component do the developers need to prepare and to retrieve data by using an API call?

A. the subscription ID

B. the API key

C. a query

D. the resource group ID

Your company has 1,000 AI developers who are responsible for provisioning environments in Azure. You need to control the type, size, and location of the resources that the developers can provision. What should you use?

A. Azure Key Vault

B. Azure service principals

C. Azure managed identities

D. Azure Security Center

E. Azure Policy

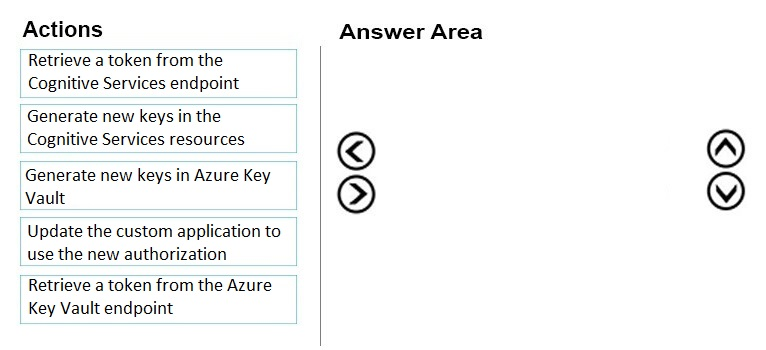

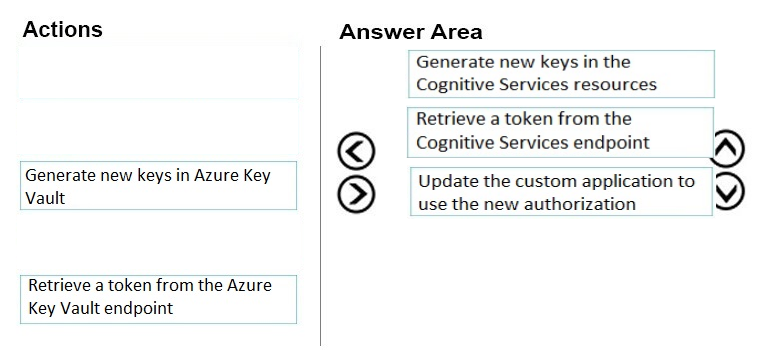

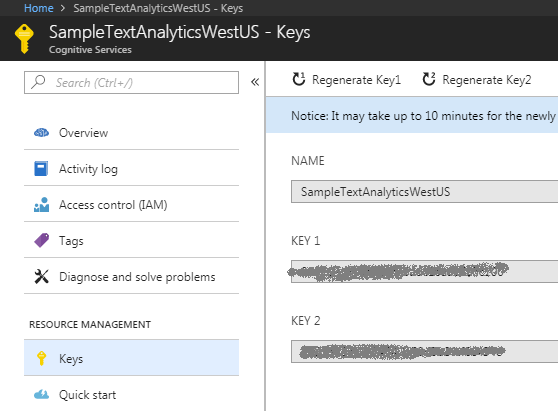

DRAG DROP - You develop a custom application that uses a token to connect to Azure Cognitive Services resources. A new security policy requires that all access keys are changed every 30 days. You need to recommend a solution to implement the security policy. Which three actions should you recommend be performed every 30 days? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order. Select and Place:

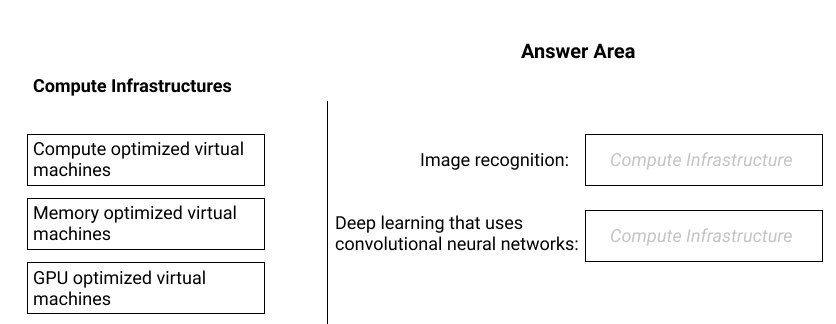

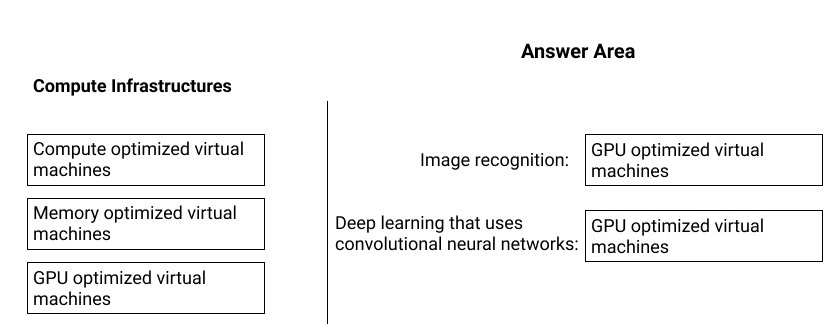

DRAG DROP - You are designing an Azure Batch AI solution that will be used to train many different Azure Machine Learning models. The solution will perform the following: ✑ Image recognition ✑ Deep learning that uses convolutional neural networks. You need to select a compute infrastructure for each model. The solution must minimize the processing time. What should you use for each model? To answer, drag the appropriate compute infrastructures to the correct models. Each compute infrastructure may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point. Select and Place:

You need to build a solution to monitor Twitter. The solution must meet the following requirements: ✑ Send an email message to the marketing department when negative Twitter messages are detected. ✑ Run sentiment analysis on Twitter messages that mention specific tags. ✑ Use the least amount of custom code possible. Which two services should you include in the solution? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

A. Azure Databricks

B. Azure Stream Analytics

C. Azure Functions

D. Azure Cognitive Services

E. Azure Logic Apps

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen. You are developing an application that uses an Azure Kubernetes Service (AKS) cluster. You are troubleshooting a node issue. You need to connect to an AKS node by using SSH. Solution: You change the permissions of the AKS resource group, and then you create an SSH connection. Does this meet the goal?

A. Yes

B. No

You have deployed 1,000 sensors for an AI application that you are developing. The sensors generate large amounts data that is ingested on an hourly basis. You want your application to analyze the data generated by the sensors in real-time. Which of the following actions should you take?

A. Make use of Azure Kubernetes Service (AKS)

B. Make use of Azure Cosmos DB

C. Make use of an Azure HDInsight Hadoop cluster

D. Make use of Azure Data Factory

You have a Face API solution that updates in real time. A pilot of the solution runs successfully on a small dataset. When you attempt to use the solution on a larger dataset that continually changes, the performance degrades, slowing how long it takes to recognize existing faces. You need to recommend changes to reduce the time it takes to recognize existing faces without increasing costs. What should you recommend?

A. Change the solution to use the Computer Vision API instead of the Face API.

B. Separate training into an independent pipeline and schedule the pipeline to run daily.

C. Change the solution to use the Bing Image Search API instead of the Face API.

D. Distribute the face recognition inference process across many Azure Cognitive Services instances.

You are developing a bot for an ecommerce application. The bot will support five languages. The bot will use Language Understanding (LUIS) to detect the language of the customer, and QnA Maker to answer common customer questions. LUIS supports all the languages. You need to determine the minimum number of Azure resources that you must create for the bot. You create one instance of QnA Maker and five instances Language Understanding (LUIS). Does this action accomplish your objective?

A. Yes, it does

B. No, it does not

Free Access Full AI-100 Practice Questions Free

Want more hands-on practice? Click here to access the full bank of AI-100 practice questions free and reinforce your understanding of all exam objectives.

We update our question sets regularly, so check back often for new and relevant content.

Good luck with your AI-100 certification journey!