AI-100 Dump Free – 50 Practice Questions to Sharpen Your Exam Readiness.

Looking for a reliable way to prepare for your AI-100 certification? Our AI-100 Dump Free includes 50 exam-style practice questions designed to reflect real test scenarios—helping you study smarter and pass with confidence.

Using an AI-100 dump free set of questions can give you an edge in your exam prep by helping you:

- Understand the format and types of questions you’ll face

- Pinpoint weak areas and focus your study efforts

- Boost your confidence with realistic question practice

Below, you will find 50 free questions from our AI-100 Dump Free collection. These cover key topics and are structured to simulate the difficulty level of the real exam, making them a valuable tool for review or final prep.

You are developing a Microsoft Bot Framework application. The application consumes structured NoSQL data that must be stored in the cloud. You implement Azure Blob storage for the application. You want access to the blob store to be controlled by using a role. You implement Azure Active Directory (Azure AD) integration on the storage account. Does this action accomplish your objective?

A. Yes, it does

B. No, it does not

Your company uses an internal blog to share news with employees. You use the Translator Text API to translate the text in the blog from English to several other languages used by the employees. Several employees report that the translations are often inaccurate. You need to improve the accuracy of the translations. What should you add to the translation solution?

A. Text Analytics

B. Language Understanding (LUIS)

C. Azure Media Services

D. Custom Translator

You are developing a Microsoft Bot Framework application. The application consumes structured NoSQL data that must be stored in the cloud. You implement Azure Blob storage for the application. You want access to the blob store to be controlled by using a role. You implement Shared Access Signatures (SAS) on the storage account. Does this action accomplish your objective?

A. Yes, it does

B. No, it does not

You have an existing Language Understanding (LUIS) model for an internal bot. You need to recommend a solution to add a meeting reminder functionality to the bot by using a prebuilt model. The solution must minimize the size of the model. Which component of LUIS should you recommend?

A. domain

B. intents

C. entities

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen. You create several API models in Azure Machine Learning Studio. You deploy the models to a production environment. You need to monitor the compute performance of the models. Solution: You create environment files. Does this meet the goal?

A. Yes

B. No

You have deployed several Azure IoT Edge devices for an AI solution. The Azure IoT Edge devices generate measurement data from temperature sensors. You need a solution to process the sensor data. Your solution must be able to write configuration changes back to the devices. You make use of Microsoft Azure IoT Hub. Does this action accomplish your objective?

A. Yes, it does

B. No, it does not

You have an AI application that uses keys in Azure Key Vault. Recently, a key used by the application was deleted accidentally and was unrecoverable. You need to ensure that if a key is deleted, it is retained in the key vault for 90 days. Which two features should you configure? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

A. The expiration date on the keys

B. Soft delete

C. Purge protection

D. Auditors

E. The activation date on the keys

You are designing an AI application that will perform real-time processing by using Microsoft Azure Stream Analytics. You need to identify the valid outputs of a Stream Analytics job. What are three possible outputs? Each correct answer presents a complete solution. NOTE: Each correct selection is worth one point.

A. A Hive table in Azure HDInsight

B. Azure SQL Database

C. Azure Cosmos DB

D. Azure Blob storage

E. Azure Redis Cache

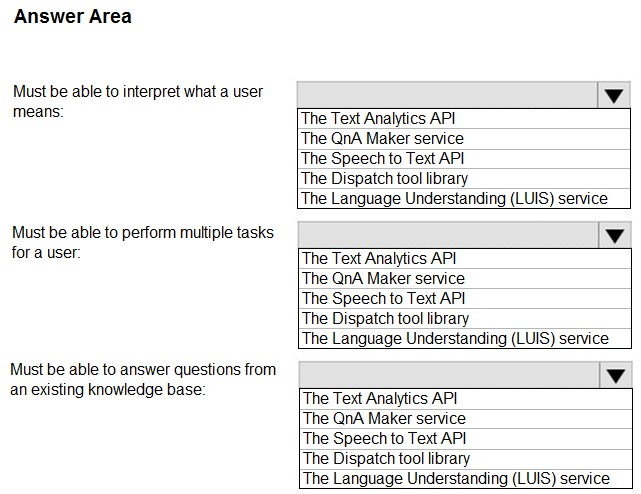

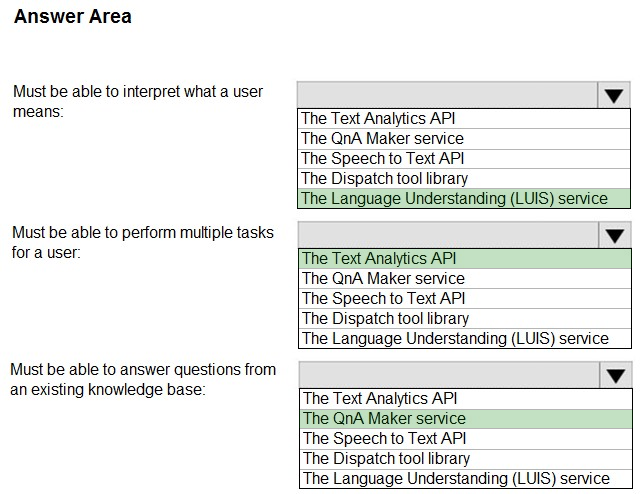

HOTSPOT - You plan to create an intelligent bot to handle internal user chats to the help desk of your company. The bot has the following requirements: ✑ Must be able to interpret what a user means. ✑ Must be able to perform multiple tasks for a user. Must be able to answer questions from an existing knowledge base.You need to recommend which solutions meet the requirements. Which solution should you recommend for each requirement? To answer, drag the appropriate solutions to the correct requirements. Each solution may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point. Hot Area:

You are implementing the Language Understanding (LUIS) API and are building a GDPR-compliant bot by using the Bot Framework. You need to recommend a solution to ensure that the implementation of LUIS is GDPR-compliant. What should you include in the recommendation?

A. Enable active learning for the bot.

B. Configure the bot to send the active learning preference of a user.

C. Delete the utterances from Review endpoint utterances.

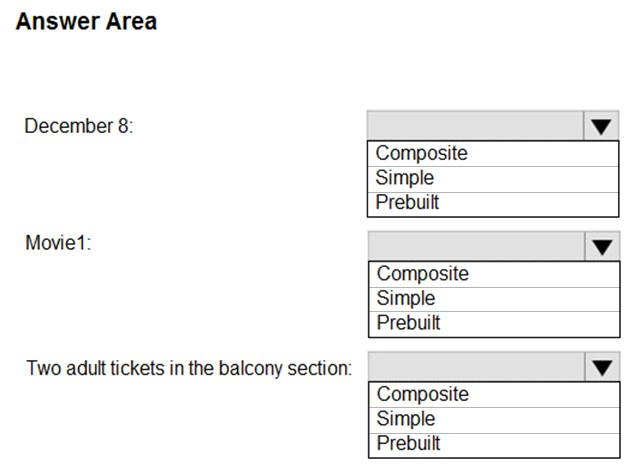

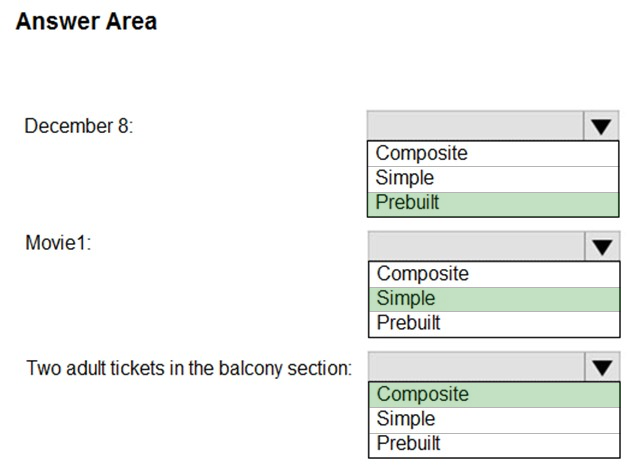

HOTSPOT - Your company is building a cinema chatbot by using the Bot Framework and Language Understanding (LUIS). You are designing of the intents and the entities for LUIS. The following are utterances that customers might provide: ✑ Which movies are playing on December 8? ✑ What time is the performance of Movie1? ✑ I would like to purchase two adult tickets in the balcony section for Movie2. You need to identify which entity types to use. The solution must minimize development effort. Which entry type should you use for each entity? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point. Hot Area:

You are developing an app for a conference provider. The app will use speech-to-text to provide transcription at a conference in English. It will also use the Translator Text API to translate the transcripts to the language preferred by the conference attendees. You test the translation features on the app and discover that the translations are fairly poor. You want to improve the quality of the translations. Which of the following actions should you take?

A. Use Text Analytics to perform the translations.

B. Use the Language Understanding (LUIS) API to perform the translations.

C. Perform the translations by training a custom model using Custom Translator.

D. Use the Computer Vision API to perform the translations.

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen. You have an app named App1 that uses the Face API. App1 contains several PersonGroup objects. You discover that a PersonGroup object for an individual named Ben Smith cannot accept additional entries. The PersonGroup object for Ben Smith contains 10,000 entries. You need to ensure that additional entries can be added to the PersonGroup object for Ben Smith. The solution must ensure that Ben Smith can be identified by all the entries. Solution: You modify the custom time interval for the training phase of App1. Does this meet the goal?

A. Yes

B. No

You are using Azure Cognitive Services to create an interactive AI application that will be deployed for a world-wide audience. You want the app to support multiple languages, including English, French, Spanish, Portuguese, and German. Which of the following actions should you take?

A. Make use of Text Analytics.

B. Make use of Content Moderator.

C. Make use of QnA Maker.

D. Make use of Language API.

You are designing a solution that will integrate the Bing Web Search API and will return a JSON response. The development team at your company uses C# as its primary development language. You provide developers with the Bing endpoint. Which additional component do the developers need to prepare and to retrieve data by using an API call?

A. the subscription ID

B. the API key

C. a query

D. the resource group ID

You deploy an application that performs sentiment analysis on the data stored in Azure Cosmos DB. Recently, you loaded a large amount of data to the database. The data was for a customer named Contoso, Ltd. You discover that queries for the Contoso data are slow to complete, and the queries slow the entire application. You need to reduce the amount of time it takes for the queries to complete. The solution must minimize costs. What should you do? More than one answer choice may achieve the goal. (Choose two.)

A. Change the request units.

B. Change the partitioning strategy.

C. Change the transaction isolation level.

D. Migrate the data to the Cosmos DB database.

You have an Azure Machine Learning experiment. You need to validate that the experiment meets GDPR regulation requirements and stores documentation about the experiment. What should you use?

A. Compliance Manager

B. an Azure Log Analytics workspace

C. Azure Table storage

D. Azure Security Center

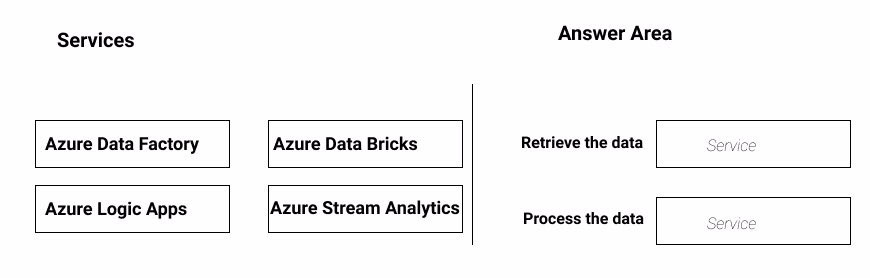

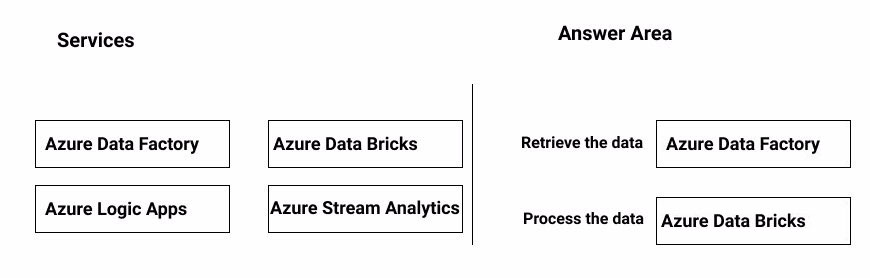

DRAG DROP - You need to design the workflow for an Azure Machine Learning solution. The solution must meet the following requirements: ✑ Retrieve data from file shares, Microsoft SQL Server databases, and Oracle databases that in an on-premises network. ✑ Use an Apache Spark job to process data stored in an Azure SQL Data Warehouse database. Which service should you use to meet each requirement? To answer, drag the appropriate services to the correct requirements. Each service may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point. Select and Place:

You are designing an AI solution that will provide feedback to teachers who train students over the Internet. The students will be in classrooms located in remote areas. The solution will capture video and audio data of the students in the classrooms. You need to recommend Azure Cognitive Services for the AI solution to meet the following requirements: ✑ Alert teachers if a student facial expression indicates the student is angry or scared. ✑ Identify each student in the classrooms for attendance purposes. ✑ Allow the teachers to log voice conversations as text. Which Cognitive Services should you recommend?

A. Face API and Text Analytics

B. Computer Vision and Text Analytics

C. QnA Maker and Computer Vision

D. Speech to Text and Face API

You are designing an AI system for your company. Your system will consume several Apache Kafka data streams. You want your system to be able to process the data streams at scale and in real-time. Which of the following actions should you take?

A. Make use of Azure HDInsight with Apache HBase

B. Make use of Azure HDInsight with Apache Spark

C. Make use of Azure HDInsight with Apache Storm

D. Make use of Azure HDInsight with Microsoft Machine Learning Server

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen. You are deploying an Azure Machine Learning model to an Azure Kubernetes Service (AKS) container. You need to monitor the scoring accuracy of each run of the model. Solution: You configure Azure Monitor for containers. Does this meet the goal?

A. Yes

B. No

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen. You have Azure IoT Edge devices that generate streaming data. On the devices, you need to detect anomalies in the data by using Azure Machine Learning models. Once an anomaly is detected, the devices must add information about the anomaly to the Azure IoT Hub stream. Solution: You deploy Azure Stream Analytics as an IoT Edge module. Does this meet the goal?

A. Yes

B. No

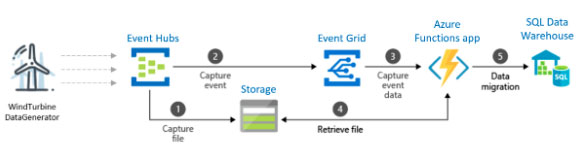

Your company has a data team of Transact-SQL experts. You plan to ingest data from multiple sources into Azure Event Hubs. You need to recommend which technology the data team should use to move and query data from Event Hubs to Azure Storage. The solution must leverage the data team's existing skills. What is the best recommendation to achieve the goal? More than one answer choice may achieve the goal.

A. Azure Notification Hubs

B. Azure Event Grid

C. Apache Kafka streams

D. Azure Stream Analytics

You have a Bing Search service that is used to query a product catalog. You need to identify the following information: ✑ The locale of the query ✑ The top 50 query strings ✑ The number of calls to the service ✑ The top geographical regions of the service What should you implement?

A. Bing Statistics

B. Azure API Management (APIM)

C. Azure Monitor

D. Azure Application Insights

You are developing an app that will perform the following tasks: Evaluate trends in blue chip stock prices over the past decade. Identify unusual fluctuations in stock prices. Produce visual representations of the data. You need to determine which Azure Cognitive Services APIs would be suitable for the app. Which of the following actions should you take?

A. Make use of the Anomaly Detector API

B. Make use of the Computer Vision API

C. Make use of the QnA Maker API

D. Make use of the Custom Vision API

Your company plans to create a mobile app that will be used by employees to query the employee handbook. You need to ensure that the employees can query the handbook by typing or by using speech. Which core component should you use for the app?

A. Language Understanding (LUIS)

B. QnA Maker

C. Text Analytics

D. Azure Search

You have Azure IoT Edge devices that generate measurement data from temperature sensors. The data changes very slowly. You need to analyze the data in a temporal two-minute window. If the temperature rises five degrees above a limit, an alert must be raised. The solution must minimize the development of custom code. What should you use?

A. A Machine Learning model as a web service

B. an Azure Machine Learning model as an IoT Edge module

C. Azure Stream Analytics as an IoT Edge module

D. Azure Functions as an IoT Edge module

You are developing an AI application for your company. The application that uses batch processing to analyze data in JSON and PDF documents. You want to store the JSON and PDF documents in Azure. You want to ensure data persistence while keeping costs at a minimum. Which of the following actions should you take?

A. Make use of Azure Blob storage

B. Make use of Azure Cosmos DB

C. Make use of Azure Databricks

D. Make use of Azure Table storage

Your company plans to monitor twitter hashtags, and then to build a graph of connected people and places that contains the associated sentiment. The monitored hashtags use several languages, but the graph will be displayed in English. You need to recommend the required Azure Cognitive Services endpoints for the planned graph. Which Cognitive Services endpoints should you recommend?

A. Language Detection, Content Moderator, and Key Phrase Extraction

B. Translator Text, Content Moderator, and Key Phrase Extraction

C. Language Detection, Sentiment Analysis, and Key Phase Extraction

D. Translator Text, Sentiment Analysis, and Named Entity Recognition

Your company has an on-premises datacenter. You plan to publish an app that will recognize a set of individuals by using the Face API. The model is trained. You need to ensure that all images are processed in the on-premises datacenter. What should you deploy to host the Face API?

A. a Docker container

B. Azure File Sync

C. Azure Application Gateway

D. Azure Data Box Edge

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen. You have Azure IoT Edge devices that generate streaming data. On the devices, you need to detect anomalies in the data by using Azure Machine Learning models. Once an anomaly is detected, the devices must add information about the anomaly to the Azure IoT Hub stream. Solution: You deploy Azure Functions as an IoT Edge module. Does this meet the goal?

A. Yes

B. No

You are designing an AI solution that will analyze millions of pictures by using Azure HDInsight Hadoop cluster. You need to recommend a solution for storing the pictures. The solution must minimize costs. Which storage solution should you recommend?

A. Azure Table storage

B. Azure File Storage

C. Azure Data Lake Storage Gen2

D. Azure Data Lake Storage Gen1

You are designing an AI solution that will analyze millions of pictures. You need to recommend a solution for storing the pictures. The solution must minimize costs. Which storage solution should you recommend?

A. an Azure Data Lake store

B. Azure File Storage

C. Azure Blob storage

D. Azure Table storage

You design an AI workflow that combines data from multiple data sources for analysis. The data sources are composed of: ✑ JSON files uploaded to an Azure Storage account ✑ On-premises Oracle databases ✑ Azure SQL databases Which service should you use to ingest the data?

A. Azure Data Factory

B. Azure SQL Data Warehouse

C. Azure Data Lake Storage

D. Azure Databricks

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen. You need to create an IoT solution that performs the following tasks: ✑ Identifies hazards ✑ Provides a real-time online dashboard ✑ Takes images of an area every minute ✑ Counts the number of people in an area every minute Solution: You configure the IoT devices to send the images to an Azure IoT hub, and then you configure an Azure Automation call to Azure Cognitive Services that sends the results to an Azure event hub. You configure Microsoft Power BI to connect to the event hub by using Azure Stream Analytics. Does this meet the goal?

A. Yes

B. No

You have a database that contains sales data. You plan to process the sales data by using two data streams named Stream1 and Stream2. Stream1 will be used for purchase order data. Stream2 will be used for reference data. The reference data is stored in CSV files. You need to recommend an ingestion solution for each data stream. What two solutions should you recommend? Each correct answer is a complete solution. NOTE: Each correct selection is worth one point.

A. an Azure event hub for Stream1 and Azure Blob storage for Stream2

B. Azure Blob storage for Stream1 and Stream2

C. an Azure event hub for Stream1 and Stream2

D. Azure Blob storage for Stream1 and Azure Cosmos DB for Stream2

E. Azure Cosmos DB for Stream1 and an Azure event hub for Stream2

You are designing an AI workflow that performs data analysis from multiple data sources. The data sources consist of JSON files that have been uploaded to an Azure Storage account, on-premises Oracle databases, and Azure SQL databases. Which service should you recommend to ingest the data?

A. Azure Data Factory

B. Azure Kubernetes Service (AKS)

C. Azure Bot Service

D. Azure Databricks

You are configuring data persistence for a Microsoft Bot Framework application. The application requires a structured NoSQL cloud data store. You need to identify a storage solution for the application. The solution must minimize costs. What should you identify?

A. Azure Blob storage

B. Azure Cosmos DB

C. Azure HDInsight

D. Azure Table storage

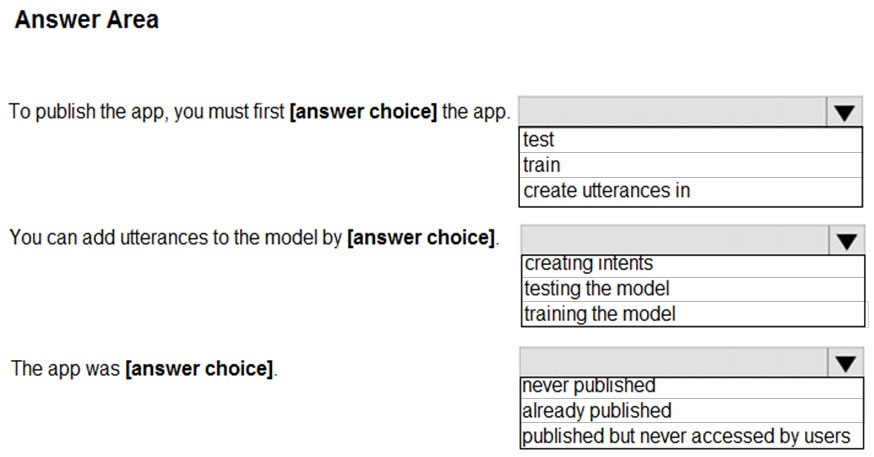

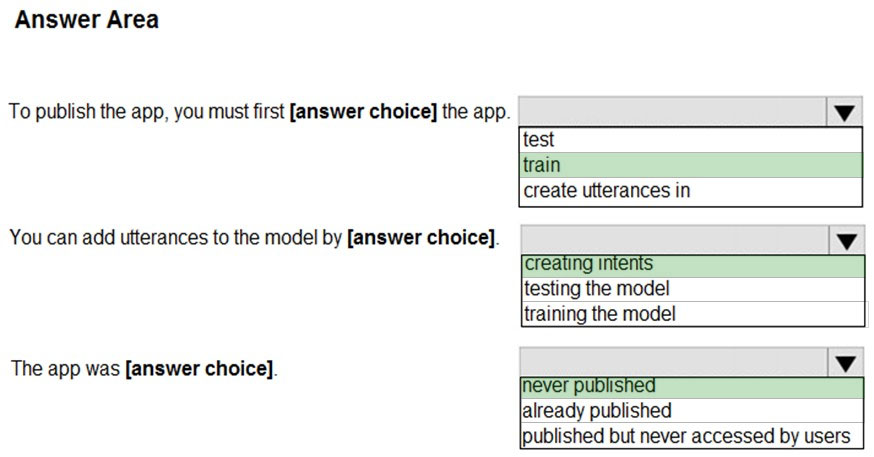

HOTSPOT - You have an app that uses the Language Understanding (LUIS) API as shown in the following exhibit.Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic. NOTE: Each correct selection is worth one point. Hot Area:

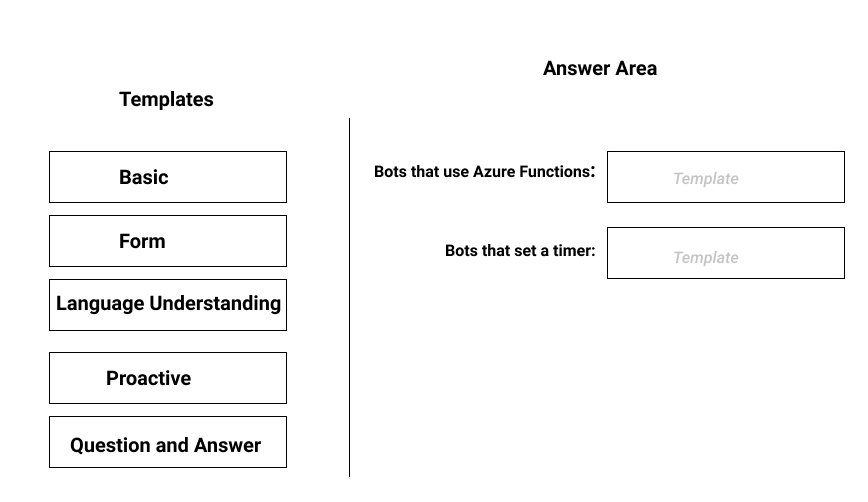

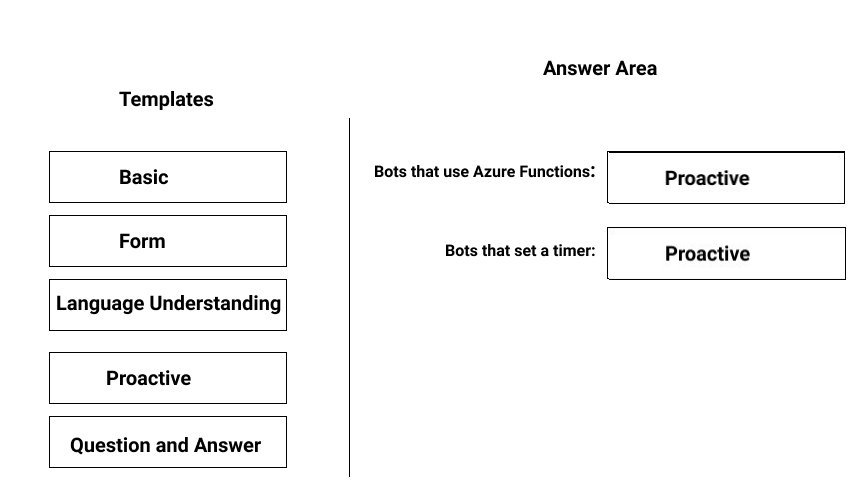

DRAG DROP - You plan to use the Microsoft Bot Framework to develop bots that will be deployed by using the Azure Bot Service. You need to configure the Azure Bot Service to support the following types of bots: ✑ Bots that use Azure Functions ✑ Bots that set a timer-based Which template should you use for each bot type? To answer drag the appropriate templates to the correct bot type. Each template may be used once, more than once or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point. Select and Place:

You are developing an app that will analyze sensitive data from global users. Your app must adhere the following compliance policies: The app must not store data in the cloud. The app not use services in the cloud to process the data. Which of the following actions should you take?

A. Make use of Azure Machine Learning Studio

B. Make use of Docker containers for the Text Analytics

C. Make use of a Text Analytics container deployed to Azure Kubernetes Service

D. Make use of Microsoft Machine Learning (MML) for Apache Spark

Your company is developing an AI solution that will identify inappropriate text in multiple languages. You need to implement a Cognitive Services API that meets this requirement. You use Language Understanding (LUIS) to identify inappropriate text. Does this action accomplish your objective?

A. Yes, it does

B. No, it does not

You company's developers have created an Azure Data Factory pipeline that moves data from an on-premises server to Azure Storage. The pipeline consumes Azure Cognitive Services APIs. You need to deploy the pipeline. Your solution must minimize custom code. You use Integration Runtime to move data to the cloud and Azure API Management to consume Cognitive Services APIs. Does this action accomplish your objective?

A. Yes, it does

B. No, it does not

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen. You have Azure IoT Edge devices that generate streaming data. On the devices, you need to detect anomalies in the data by using Azure Machine Learning models. Once an anomaly is detected, the devices must add information about the anomaly to the Azure IoT Hub stream. Solution: You deploy an Azure Machine Learning model as an IoT Edge module. Does this meet the goal?

A. Yes

B. No

You have deployed 1,000 sensors for an AI application that you are developing. The sensors generate large amounts data that is ingested on an hourly basis. You want your application to analyze the data generated by the sensors in real-time. Which of the following actions should you take?

A. Make use of Azure Kubernetes Service (AKS)

B. Make use of Azure Cosmos DB

C. Make use of an Azure HDInsight Hadoop cluster

D. Make use of Azure Data Factory

You design an AI solution that uses an Azure Stream Analytics job to process data from an Azure IoT hub. The IoT hub receives time series data from thousands of IoT devices at a factory. The job outputs millions of messages per second. Different applications consume the messages as they are available. The messages must be purged. You need to choose an output type for the job. What is the best output type to achieve the goal? More than one answer choice may achieve the goal.

A. Azure Event Hubs

B. Azure SQL Database

C. Azure Blob storage

D. Azure Cosmos DB

You plan to build an application that will perform predictive analytics. Users will be able to consume the application data by using Microsoft Power BI or a custom website. You need to ensure that you can audit application usage. Which auditing solution should you use?

A. Azure Storage Analytics

B. Azure Application Insights

C. Azure diagnostics logs

D. Azure Active Directory (Azure AD) reporting

Your company's marketing department is creating a social media campaign that will allow users to submit video messages for the company's social media sites. You are developing an AI app for the campaign. Your app must meet the following requirements: Add captions to the video messages before they are posted to the social media sites. Ensure that no negative video messages are posted to the social media sites. Which of the following actions should you take?

A. Implement Form Recognizer in your app.

B. Implement the Face API in your app.

C. Implement Custom Vision in your app.

D. Implement Video Indexer in your app.

You plan to deploy an AI solution that tracks the behavior of 10 custom mobile apps. Each mobile app has several thousand users. You need to recommend a solution for real-time data ingestion for the data originating from the mobile app users. Which Microsoft Azure service should you include in the recommendation?

A. Azure Event Hubs

B. Azure Service Bus queries

C. Azure Service Bus topics and subscriptions

D. Apache Storm on Azure HDInsight

After you answer a question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen. You are developing an application that uses an Azure Kubernetes Service (AKS) cluster. You are troubleshooting a node issue. You need to connect to an AKS node by using SSH. Solution: You add an SSH key to the node, and then you create an SSH connection. Does this meet the goal?

A. Yes

B. No

Access Full AI-100 Dump Free

Looking for even more practice questions? Click here to access the complete AI-100 Dump Free collection, offering hundreds of questions across all exam objectives.

We regularly update our content to ensure accuracy and relevance—so be sure to check back for new material.

Begin your certification journey today with our AI-100 dump free questions — and get one step closer to exam success!